Ayush Shrivastava

@ayshrv

PhD at UMich, Georgia Tech & IIT (BHU) Varanasi Alum.

ID: 2926810536

http://ayshrv.com/ 11-12-2014 13:43:00

78 Tweet

396 Takipçi

1,1K Takip Edilen

Seeing the World through Your Eyes paper page: huggingface.co/papers/2306.09… The reflective nature of the human eye is an underappreciated source of information about what the world around us looks like. By imaging the eyes of a moving person, we can collect multiple views of a scene

🚀 We've hit some big milestones with embedchain: • 300K apps • 53K downloads • 5.7K GitHub stars Every step taught us something new. Today, we're taking those lessons & introducing a platform to manage data for LLM apps. No waitlist, link in next tweet 👇🏻👇🏻👇🏻

In case you were wondering what’s going on with the back of the #CVPR2024 T-shirt: it’s a hybrid image made by Aaron Inbum Park and Daniel Geng! When you look at it up close, you’ll just see the Seattle skyline, but when you view it from a distance, the text “CVPR” should appear.

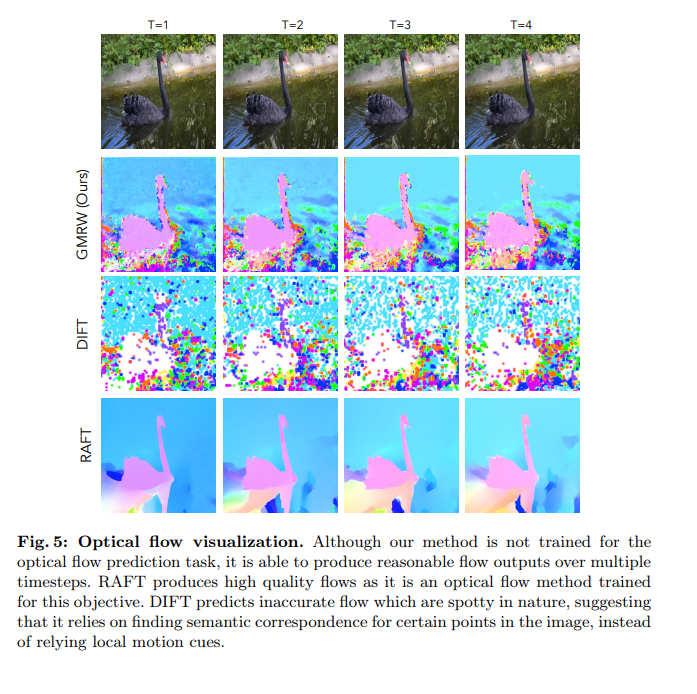

Self-Supervised Any-Point Tracking by Contrastive Random Walks Ayush Shrivastava, Andrew Owens tl;dr: global matching transformer->self-attention->transition matrix->contrastive random walk->cycle-consistent track arxiv.org/pdf/2409.16288

At #ECCV2024: a very simple, self-supervised tracking method! We train a transformer to perform all-pairs matching using the contrastive random walk. If you want to learn more, please come to our poster at 10:30am on Thursday (#214). w/ Ayush Shrivastava x.com/ayshrv/status/…

Video prediction foundation models implicitly learn how objects move in videos. Can we learn how to extract these representations to accurately track objects in videos _without_ any supervision? Yes! 🧵 Work done with: Rahul Venkatesh, Seungwoo (Simon) Kim, Jiajun Wu and Daniel Yamins