AvivTamarLab

@avivtamarlab

Aviv Tamar's Robot Learning Lab at Technion ECE

ID: 1756314953613537280

10-02-2024 13:52:30

14 Tweet

15 Followers

1 Following

Enough games. The RL field needs to mature. New blog post with shiemannor avivtamar.substack.com/p/deployablerl…

Meta-RL is all about inferring the task from a history of observations. But how to best learn a history embedding? In ContraBAR ( #ICML2023 w\ Era Choshen ) we investigate a contrastive learning approach. Paper: arxiv.org/pdf/2306.02418… Code: github.com/ec2604/ContraB… 👇

Check out these beautiful videos 🤩 Deep *dynamic* latent particles is a new object-based video prediction method, led by Tal Daniel Key idea: Latent variables = particles, making it easier to learn latent dynamics 👇

We recently had a bit of a breakthrough in generalization in RL, led by Ev Zisselman TL;DR: learning MaxEnt exploration generalizes better than maximizing reward. We use this to set a new SOTA for ProcGen + significantly improve on hard games like Heist! #NeurIPS2023 Details👇

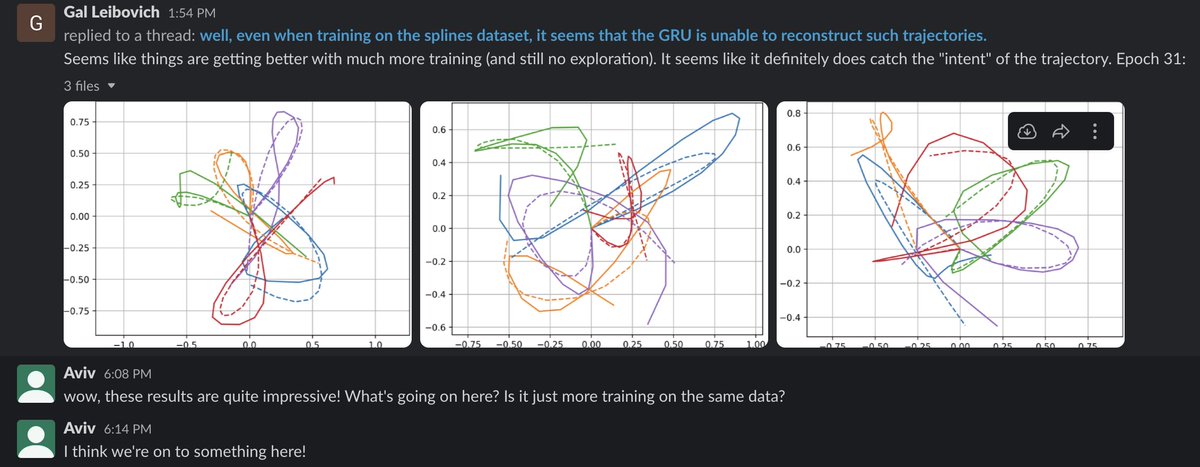

Our work "MAMBA: An Effective World Model Approach for Meta Reinforcement Learning" got accepted to ICLR 2026! It was super fun working on this one with tom jurgenson, Orr Krupnik, Gilad Adler, and Aviv Tamar Paper: arxiv.org/abs/2403.09859 Code: github.com/zoharri/mamba 🧵 [1/9]

Generalization in RL is hard. Compositional generalization is even harder… We made some progress in our #ICLR2024 spotlight w/ Dan Haramati and Tal Daniel RL trains a robotic manipulation policy that generalizes to different numbers of objects Code+paper: sites.google.com/view/entity-ce…

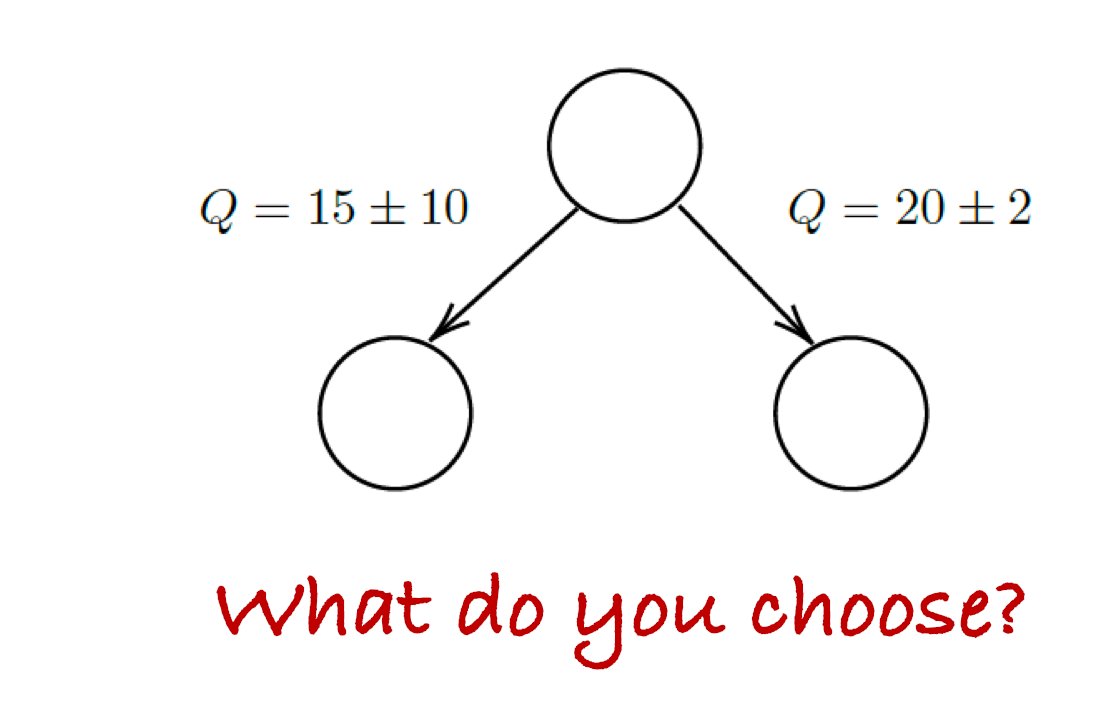

When does meta training truly benefits RL efficiency? In our #ICML2024 paper, Aviv Tamar and me analyse the conditions under which fast regret rates can be achieved at test time arxiv.org/abs/2406.02282 1/5

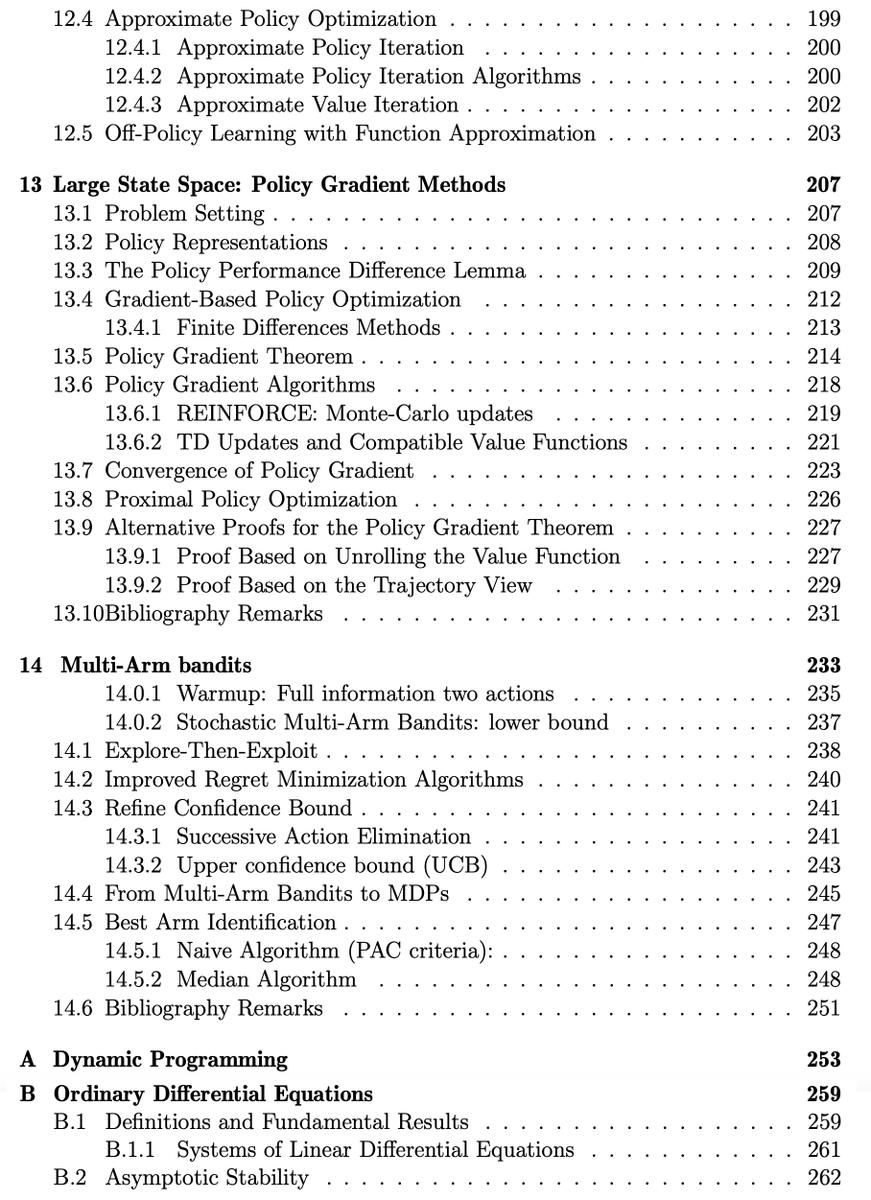

Want to learn / teach RL? Check out new book draft: Reinforcement Learning - Foundations sites.google.com/view/rlfoundat… W/ shiemannor and Yishay Mansour This is a rigorous first course in RL, based on our teaching at TAU CS and Technion ECE.