Arthur Zucker

@art_zucker

Head of transformers @huggingface 🤗

ID: 1444622906756063235

03-10-2021 11:18:53

893 Tweet

4,4K Takipçi

465 Takip Edilen

stop writing CUDA kernels yourself we have launched Kernel Hub: easy optimized kernels for all models on Hugging Face Hub 🔥 use them right away! it's where the community populates optimized kernels 🤝 keep reading 😏

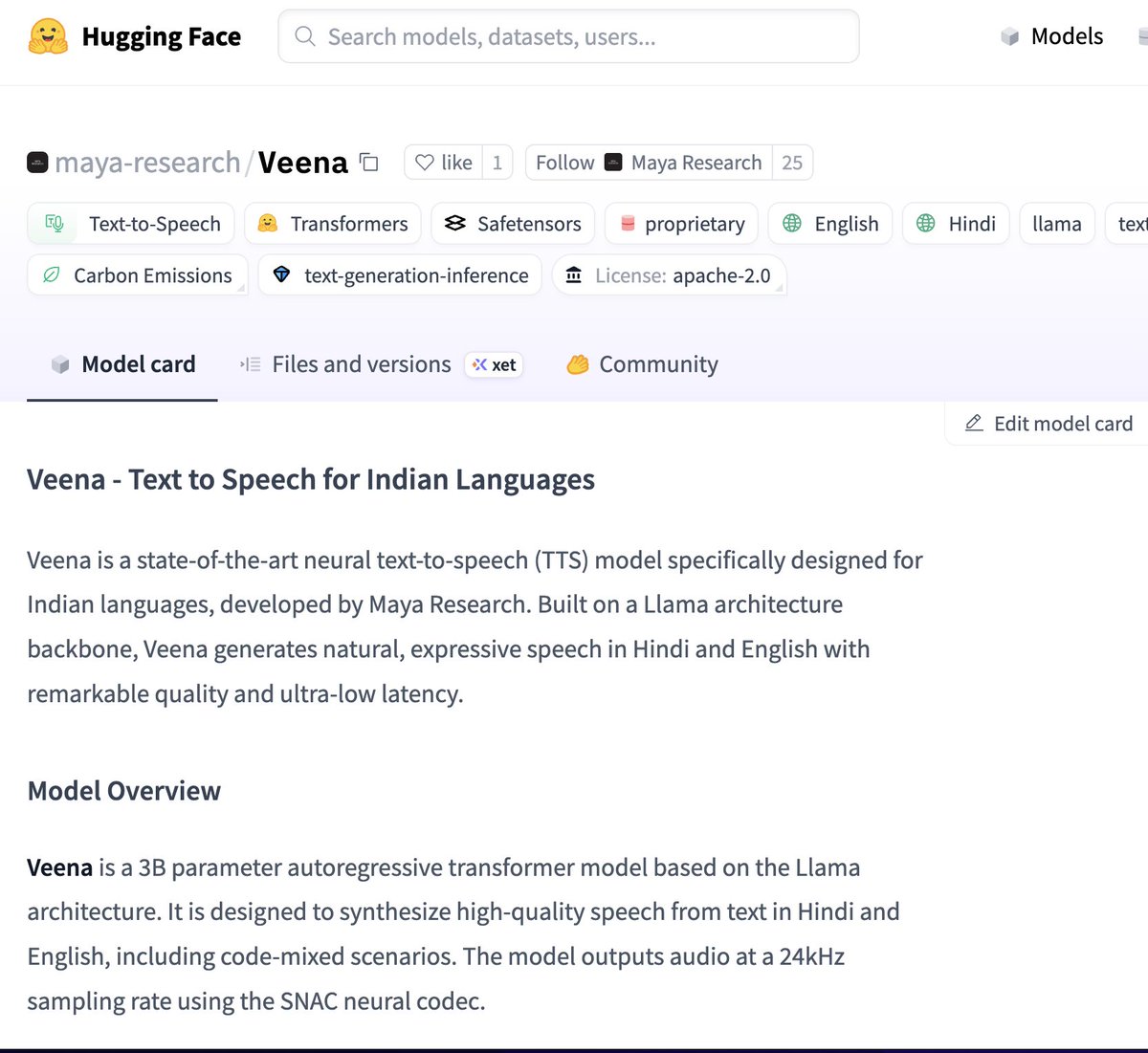

Finally, a TTS model that sounds Indian 🇮🇳. Also commendable that they open-sourced it and made it available on Hugging Face. Congrats Maya Research

I’m so excited to announce Gemma 3n is here! 🎉 🔊Multimodal (text/audio/image/video) understanding 🤯Runs with as little as 2GB of RAM 🏆First model under 10B with lmarena.ai score of 1300+ Available now on Hugging Face, Kaggle, llama.cpp, ai.dev, and more