Ari Morcos

@arimorcos

CEO and Co-founder @datologyai working to make it easy for anyone to make the most of their data. Former: RS @AIatMeta (FAIR), RS @DeepMind, PhD @PiN_Harvard.

ID: 29907525

http://www.datologyai.com 09-04-2009 03:18:43

1,1K Tweet

6,6K Followers

1,1K Following

Join DatologyAI if you have conviction on #4

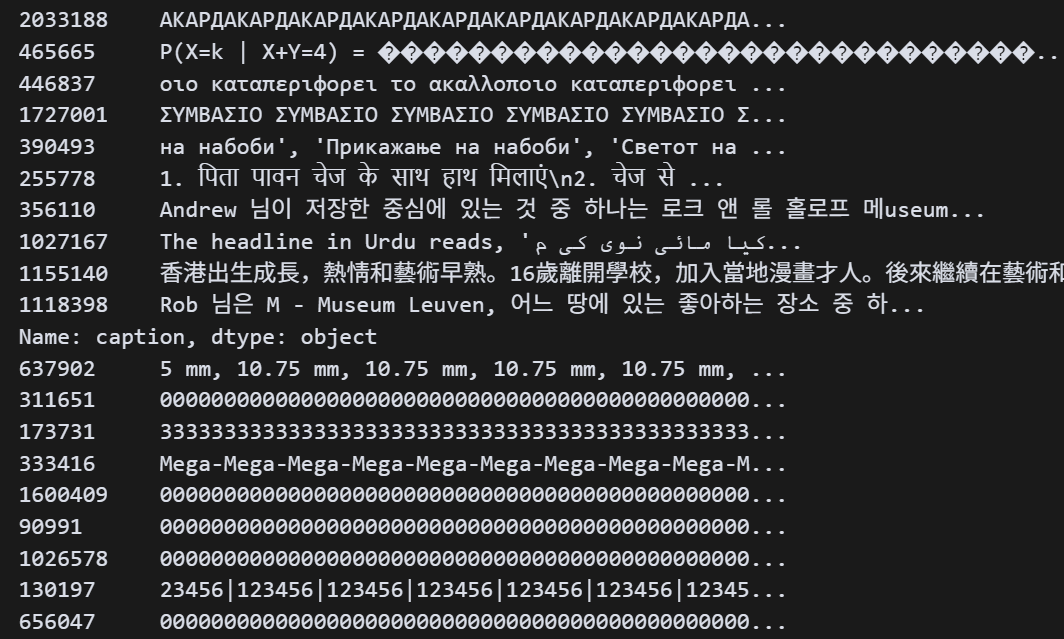

We teamed up with DatologyAI to build what we believe is the strongest pretraining corpus in the world—and I truly think we nailed it. Their team was absolutely key to the model’s success. We started with ~23T tokens of high-quality data and distilled it down to 6.58T through

This trend will only continue. Training your own model doesn't need to cost 10s of millions, especially in specialized domains. Better data is a compute multiplier, and DatologyAI's mission is to make this easy, massively reducing the cost and difficulty of training.

Data powered by DatologyAI 😎

Training costs have definitely been going down, but I think there's still a massive barrier when it comes to data, especially if you want to train on proprietary datasets. DatologyAI, we are razor focused on changing that.

🌞 We're excited to share our "Summer of Data Seminar" series at DatologyAI! We're hosting weekly sessions with brilliant researchers diving deep into pretraining, data curation, and everything that makes datasets tick. Are you data-obsessed yet? 🤓 Thread 👇