Simon Yu

@simon_ycl

1st Year PhD Student, supervised by @shi_weiyan | Incoming intern in @OrbyAI | MRes and BSc Student @EdinburghNLP | Member of @CohereForAI

ID: 3582852312

https://simonucl.github.io/ 16-09-2015 13:07:23

165 Tweet

290 Takipçi

623 Takip Edilen

🌍 Language shapes how we think and connect—but most AI models still struggle beyond English. Microsoft's July seminar discussed how we can bridge the gap and build #AIforEveryone with Marzieh Fadaee of Cohere Labs. 📽️microsoft.com/en-us/research…

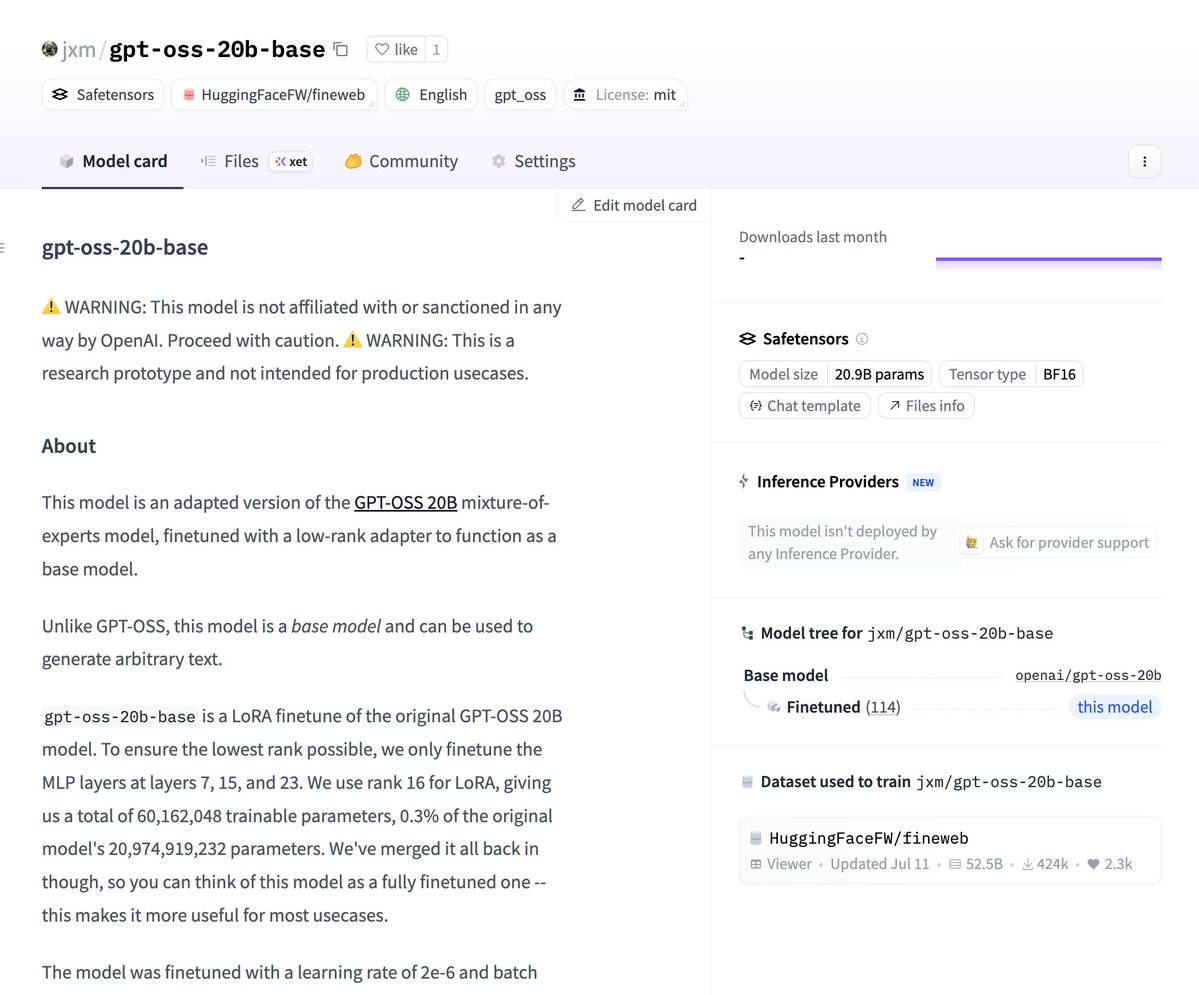

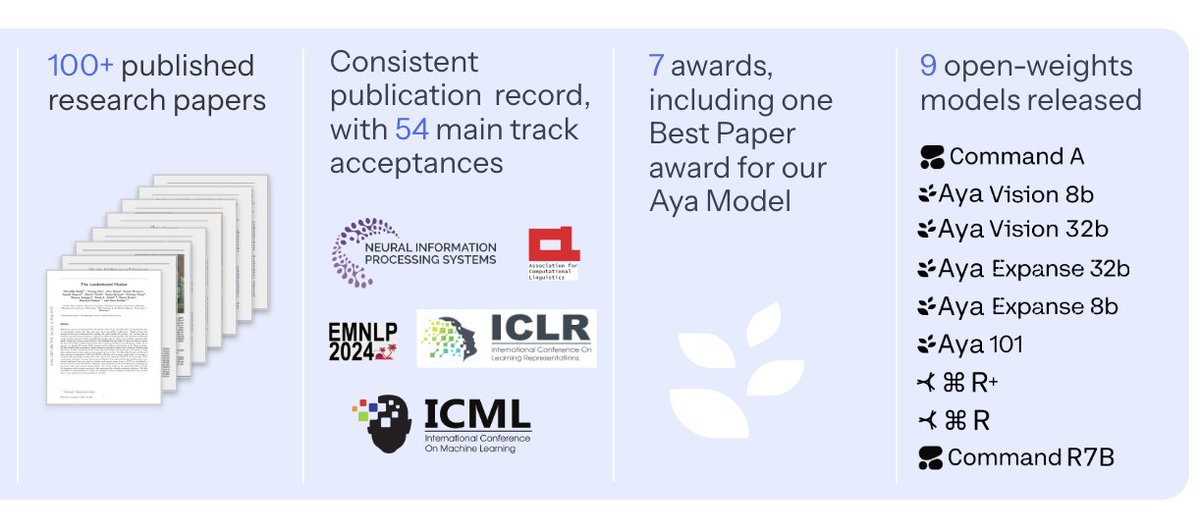

A little thank you from the Cohere Labs team. ✨ Thank you to everyone who has supported our work -- we just hit a special milestone. We have released 100 papers involving more than 150 institutions. 🔥

Teortaxes▶️ (DeepSeek 推特🐋铁粉 2023 – ∞) kalomaze Teknium (e/λ) it’s a nice idea, totally seems plausible that you can approximate some aspects of offline RL with a tweaked SFT objective, though for these experiments the most likely story is it’s triggering the same mode-collapse behavior that boots scores in many malformed Qwen GRPO setups

🚀 Still have a chance to submit to NeurIPS Conference for our Multi-Turn Workshop! 🏆 Best Paper Awards 🎓 10-15 Registration Waivers for student authors 🎤 New panelist: will brown from @primeintellect! ⏳ Deadline is August 22—only 10 days left! 🎉 Thanks to our sponsor

📢 4 days left to submit to the Workshop on Multi-Turn Interaction for LLMs at #NeurIPS2025! Exciting updates: 🥂 We're partnering with Prime Intellect to co-host a post-event reception! A great chance to connect with researchers from industry & academia. 🤖 Thrilled to have

thanks to will brown and Prime Intellect for their support at our workshop! they also just released Environment Hub, diverse collection of envs for RL training and evals

Submit to our workshop and join our post-event reception together with Prime Intellect 🥳🥳🥳