Shenghao Yang

@shenghao_yang

PhD student @UWCheritonCS. Machine learning and optimization over graphs. opallab.ca

ID: 1361877766233337858

https://cs.uwaterloo.ca/~s286yang 17-02-2021 03:19:07

47 Tweet

170 Takipçi

71 Takip Edilen

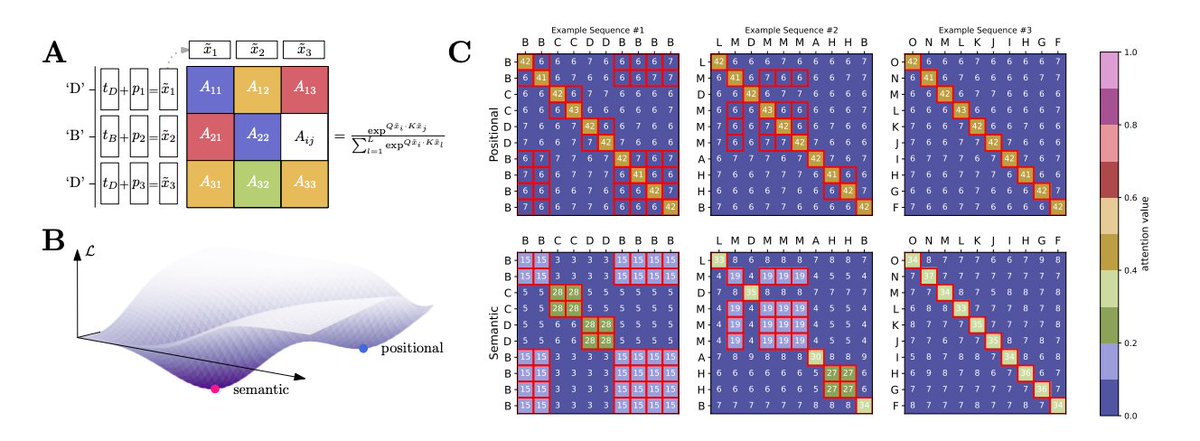

New video with Prof. Kimon Fountoulakis explaining his paper "Graph Attention Retrospective" is now available! youtu.be/duWVNO8_sDM Check it out to learn what GATs can and cannot learn for node classification in a stochastic block model setting!

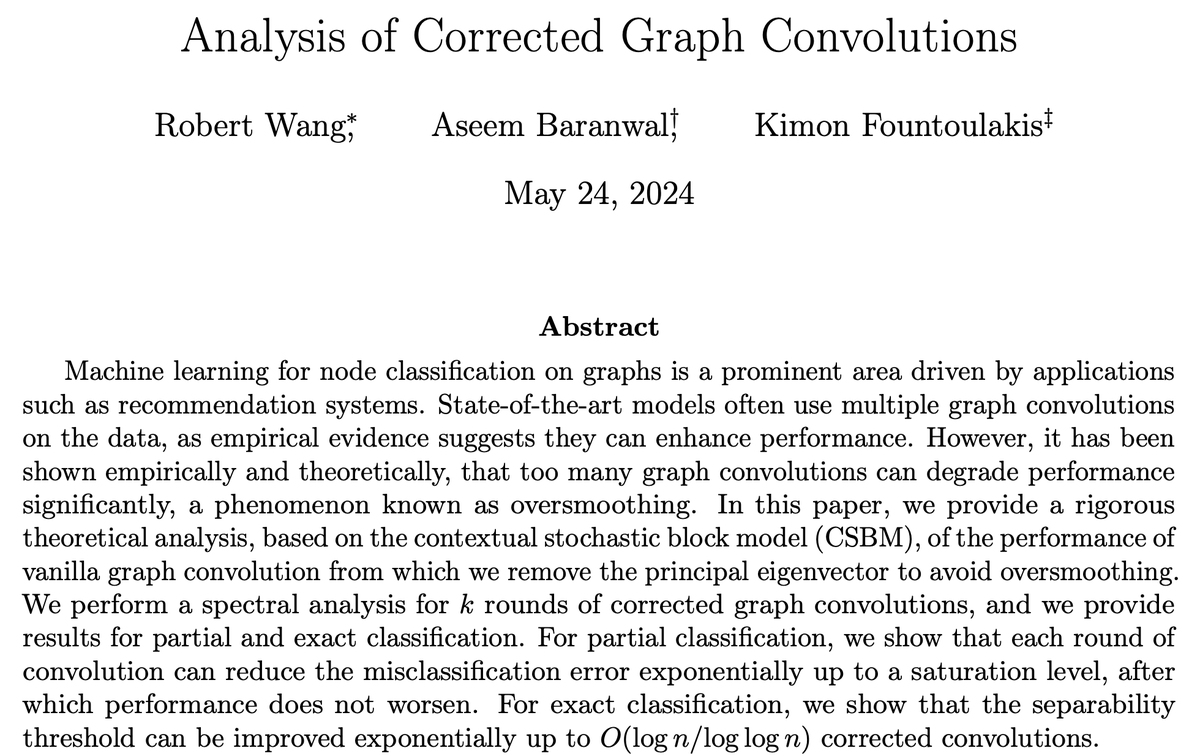

Here's our new work on the optimality of message-passing architectures for node classification on sparse feature-decorated graphs! Thanks to my advisors and co-authors Kimon Fountoulakis and Aukosh Jagannath. Details within the quoted tweet.

The November issue of SIAM News is now available! In this month's edition, Nate Veldt finds that even a seemingly minor generalization of the standard #hypergraph cut penalty yields a rich space of theoretical questions and #complexity results. Check it out! sinews.siam.org/Details-Page/g…

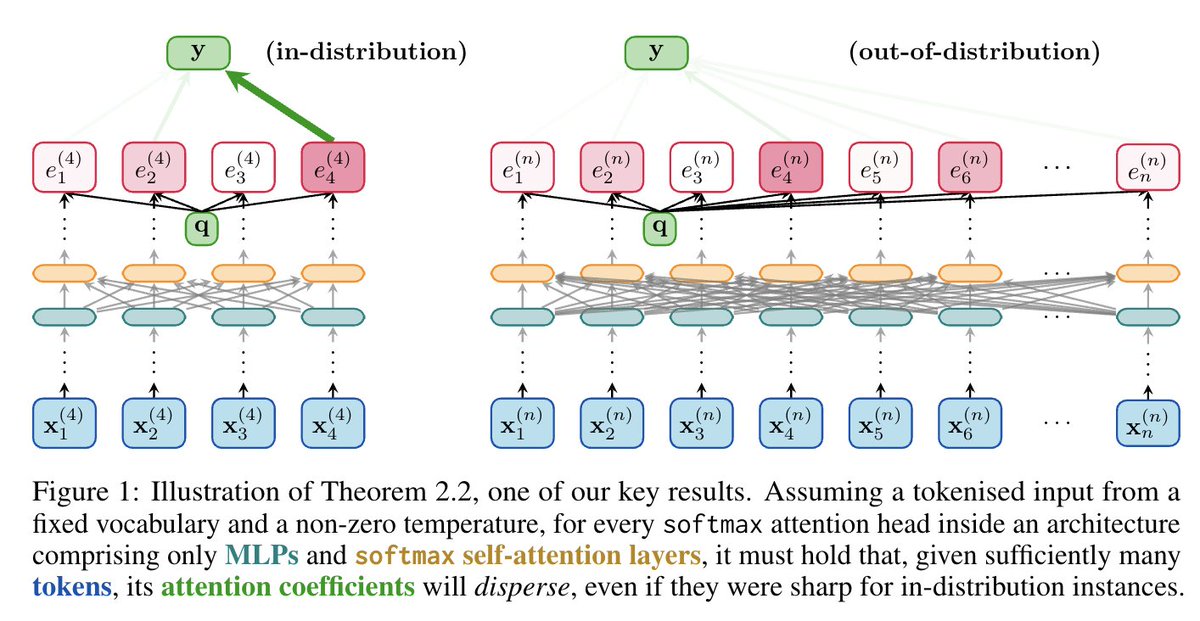

"Energy continuously flows from being concentrated, to becoming dispersed, spread out, wasted and useless." ⚡➡️🌬️ Sharing our work on the inability of softmax in Transformers to _robustly_ learn sharp functions out-of-distribution. Together w/ Christos Perivolaropoulos Federico Barbero & Razvan!

My PhD thesis is now available on UWspace: uwspace.uwaterloo.ca/items/291d10bc…. Thanks to my advisors Kimon Fountoulakis and Aukosh Jagannath for their support throughout my PhD. We introduce a statistical perspective for node classification problems. Brief details are below.

🎉 Huge congratulations to PhD student Peihao Wang (Peihao Wang ) on two major honors: 🏆 2025 Google PhD Fellowship in Machine Learning & ML Foundations 🌟 Stanford Rising Star in Data Science Incredibly proud of Peihao's outstanding achievements! 🔶⚡