✦ Rishiraj Acharya

@rishirajacharya

@GoogleDevExpert in ML ✨ | @Google SoC '22 @TensorFlow 👨🏻🔬 | @MLKolkata Organizer 🎙️ | @HuggingFace Fellow 🤗 | @Kaggle Master 🧠 | @Tensorlake MLE 👨🏻💻

ID: 2958082448

https://rishirajacharya.com 04-01-2015 06:27:07

1,1K Tweet

1,1K Takipçi

396 Takip Edilen

Sam Altman Lots of people need to be awake when load is high?

Want to pull models from ollama Hub and then serve them using libraries other than Ollama itself, say Llama.cpp? Here's how you can run Google DeepMind's Gemma3 using Llama.cpp easily - colab.research.google.com/github/xprilio… Thanks to ✦ Rishiraj Acharya for providing the GGUF loading and

Excited to share I’m now a @GoogleDevExpert in 3 categories: AI, Google Cloud & recently Kaggle Spent 11 years on Kaggle, mentored BIPOC women via KaggleX, & recently onboarded 100+ learners Catch me talking on Gemma, Gemini, Agentic AI, and Kaggle at Cloud Community Days APAC

For the first time, Aakash Kumar Nain is giving an in-person talk & he chose Kolkata for that. Then you will have a talk from Aritra Roy Gosthipaty, too. This is an invite-only event. Make sure to come with specific questions in mind and give the speakers a hard time. partiful.com/e/QLrn6A2QWFIl…

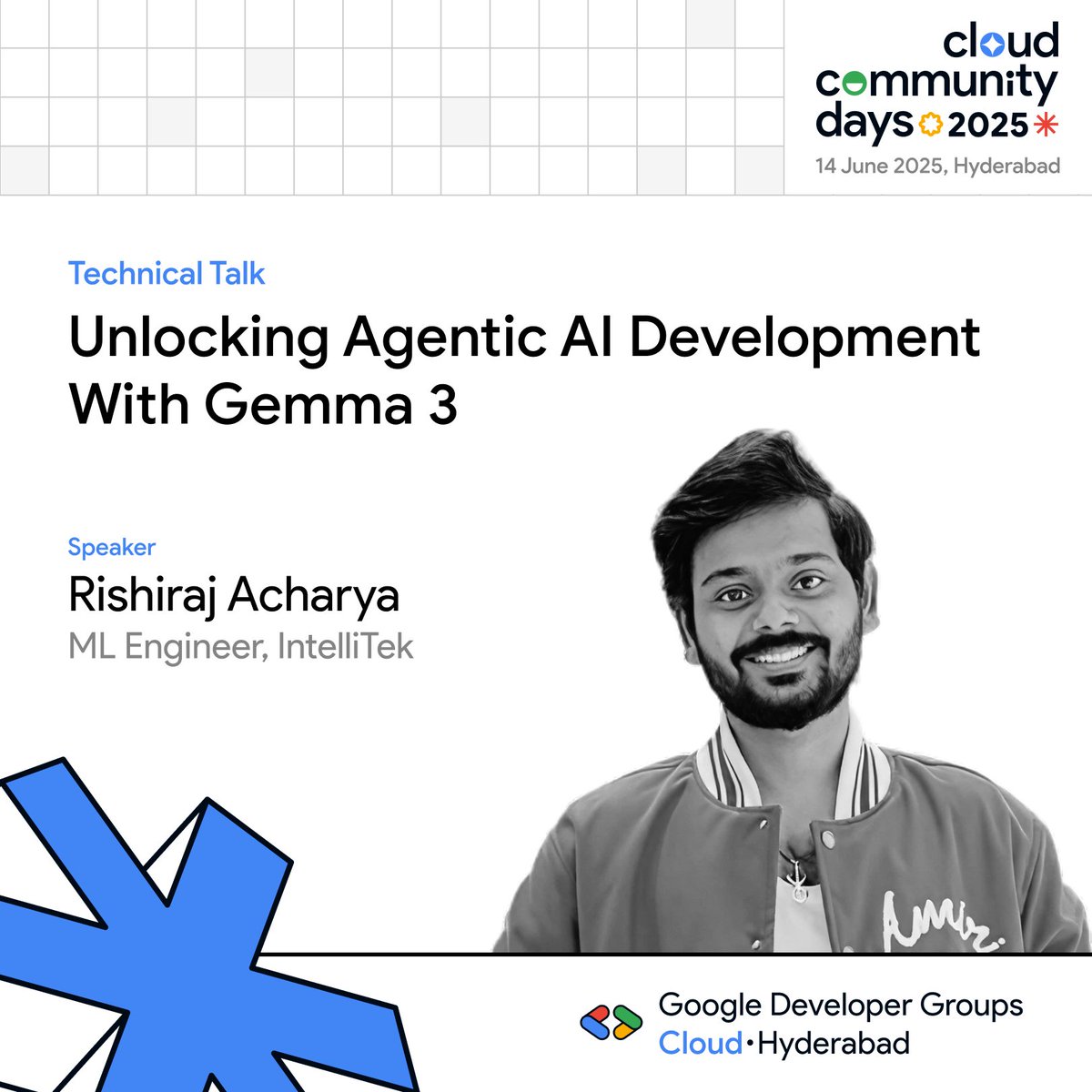

📢 Meet our next speaker ✦ Rishiraj Acharya, ML Engineer at IntelliTek & GDE (AI/Cloud/Kaggle) Topic: Unlocking Agentic AI Dev with Gemma 3. Learn how to build intelligent agents with function calling, planning & reasoning using open models like Gemma!✨ #GCCDHyderabad #AgenticAI

It was only a matter of time, and IMHO the correct decision by Hugging Face If Google are reducing investment in those frameworks, why should others carry them?

. We (Aritra Roy Gosthipaty ✦ Rishiraj Acharya) got Aakash Kumar Nain to give a PHYSICAL talk at Kolkata!

At ICLR this year Sayak Paul and I had a simple idea of forming a club around ML in the city of joy (where we come from). Today we hosted Aakash Kumar Nain who flew all the way from Delhi to talk about Small Language Models. I think we are onto forming a good club with like minded

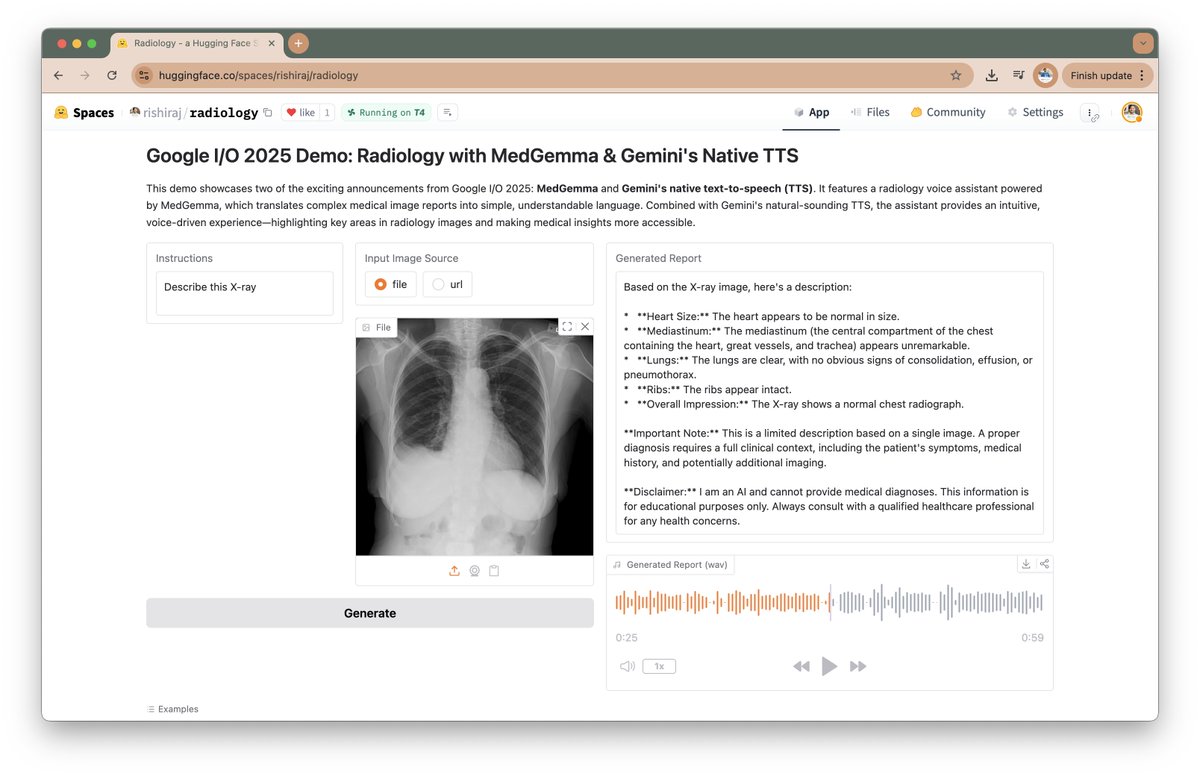

🔍 Think your quantized LLM is “just as good” because MMLU barely dropped? Think again. Your model might be flipping answers - silently degrading - even when the score looks fine. 🚨 It's time to measure KLD, not just accuracy. Full Hugging Face post 👉 huggingface.co/blog/rishiraj/…