Rahul Venkatesh

@rahul_venkatesh

CS Ph.D. student at Stanford @NeuroAILab @StanfordAILab

ID: 88371693

https://rahulvenkk.github.io/ 08-11-2009 07:16:27

27 Tweet

114 Takipçi

253 Takip Edilen

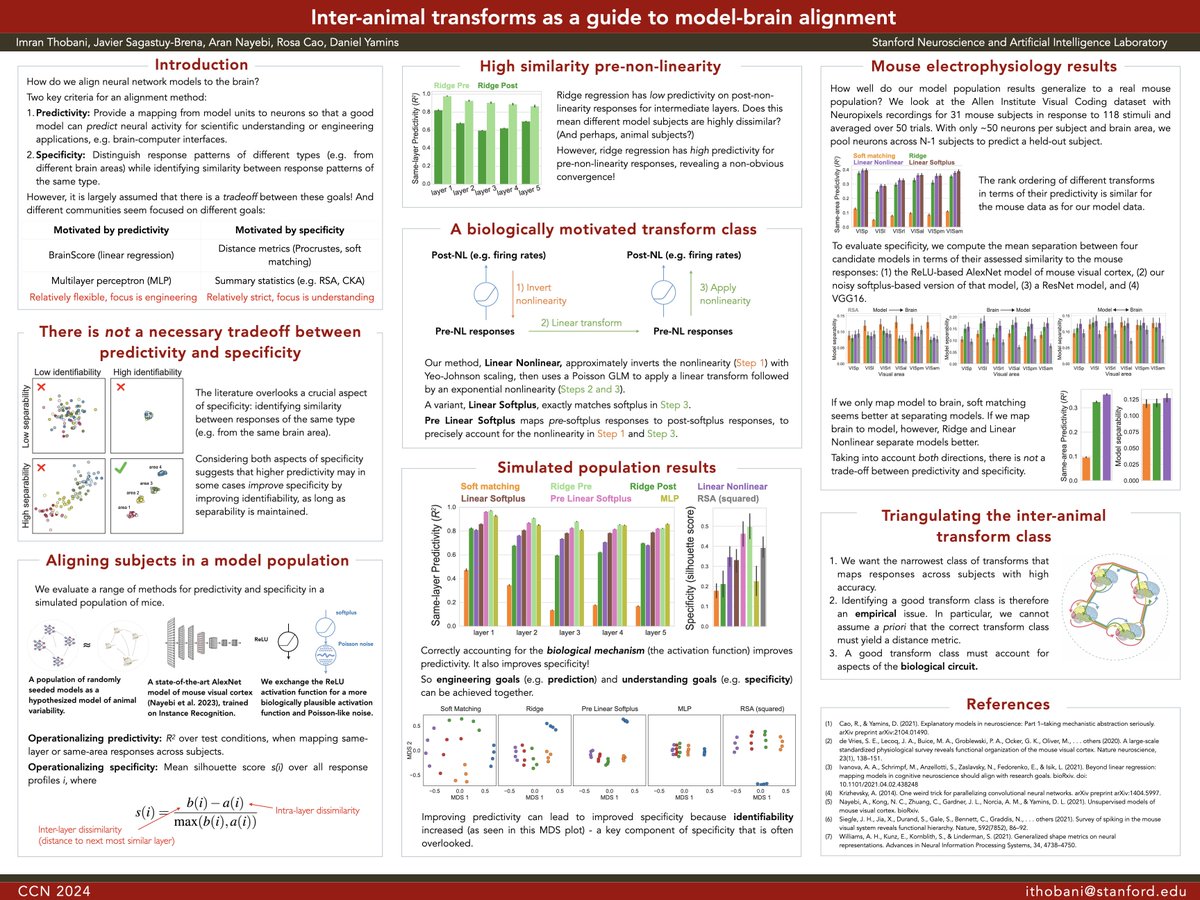

Excited to give a talk on our work (w/ Javier Sagastuy mohammad hossein Rosa Cao Daniel Yamins) on inter-animal transforms at the CogCompNeuro Battle of the Metrics (5:15-7 pm EST)! We develop a principled approach to measuring similarity between DNNs and the brain. #CCN2024

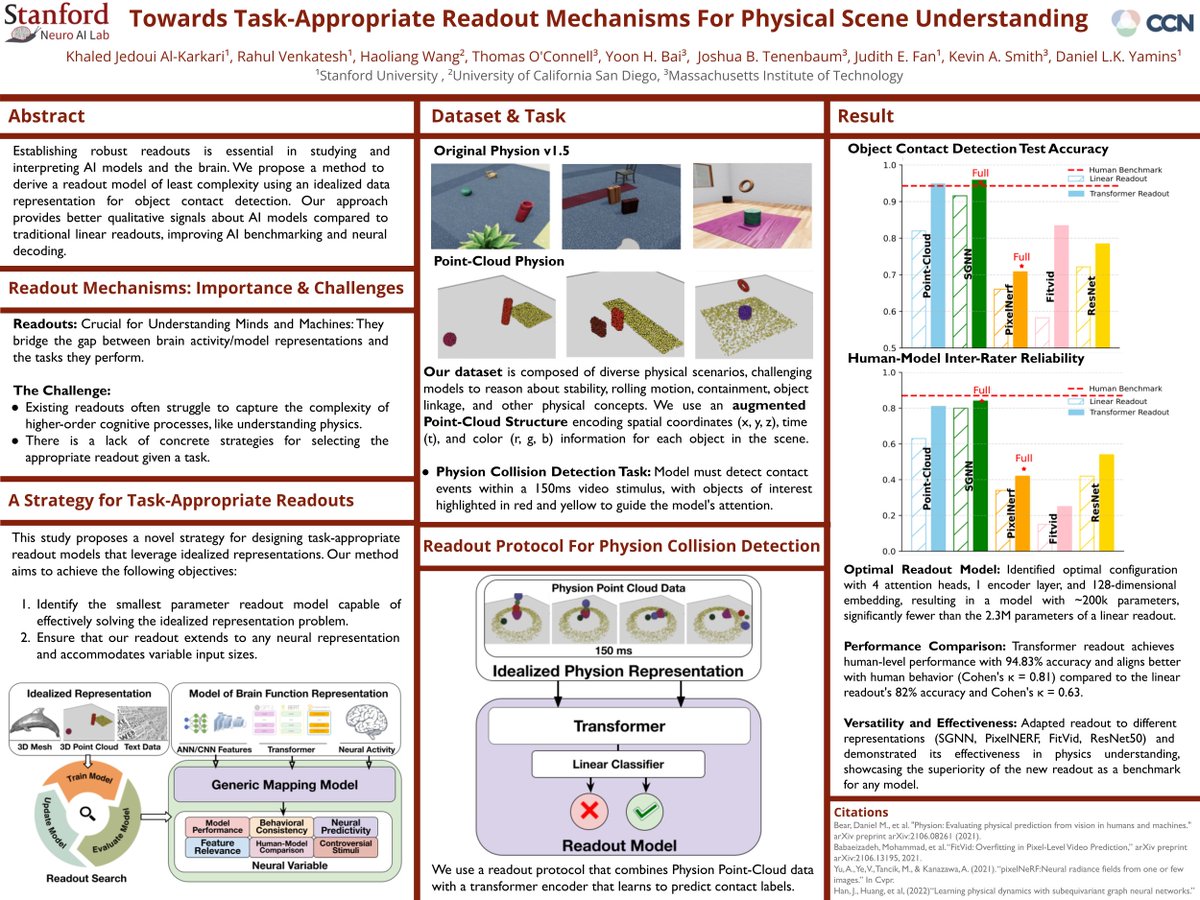

Excited to present at #CCN2024! Join me and Daniel Yamins today at 11:15-1:15 (C54) for our poster: "Towards Task-Appropriate Readout Mechanisms For Physical Scene Understanding". We propose a novel strategy for designing task-appropriate readout models using idealized representations

New paper on self-supervised optical flow and occlusion estimation from video foundation models. Stefan Stojanov Jiajun Wu Seungwoo (Simon) Kim Rahul Venkatesh tinyurl.com/dpa3auzd @

Excited to share our recent work on self-supervised discovery of motion concepts with counterfactual world modeling. It has been a privilege to work on this project with amazing collaborators Stefan Stojanov Seungwoo (Simon) Kim David Wendt, Kevin Feigelis, Jiajun Wu and Daniel Yamins

Video prediction foundation models implicitly learn how objects move in videos. Can we learn how to extract these representations to accurately track objects in videos _without_ any supervision? Yes! 🧵 Work done with: Rahul Venkatesh, Seungwoo (Simon) Kim, Jiajun Wu and Daniel Yamins