Pierric Cistac

@pierrci

🤗 Hub @huggingface

ID: 125798546

http://hf.co 23-03-2010 22:30:47

558 Tweet

1,1K Takipçi

992 Takip Edilen

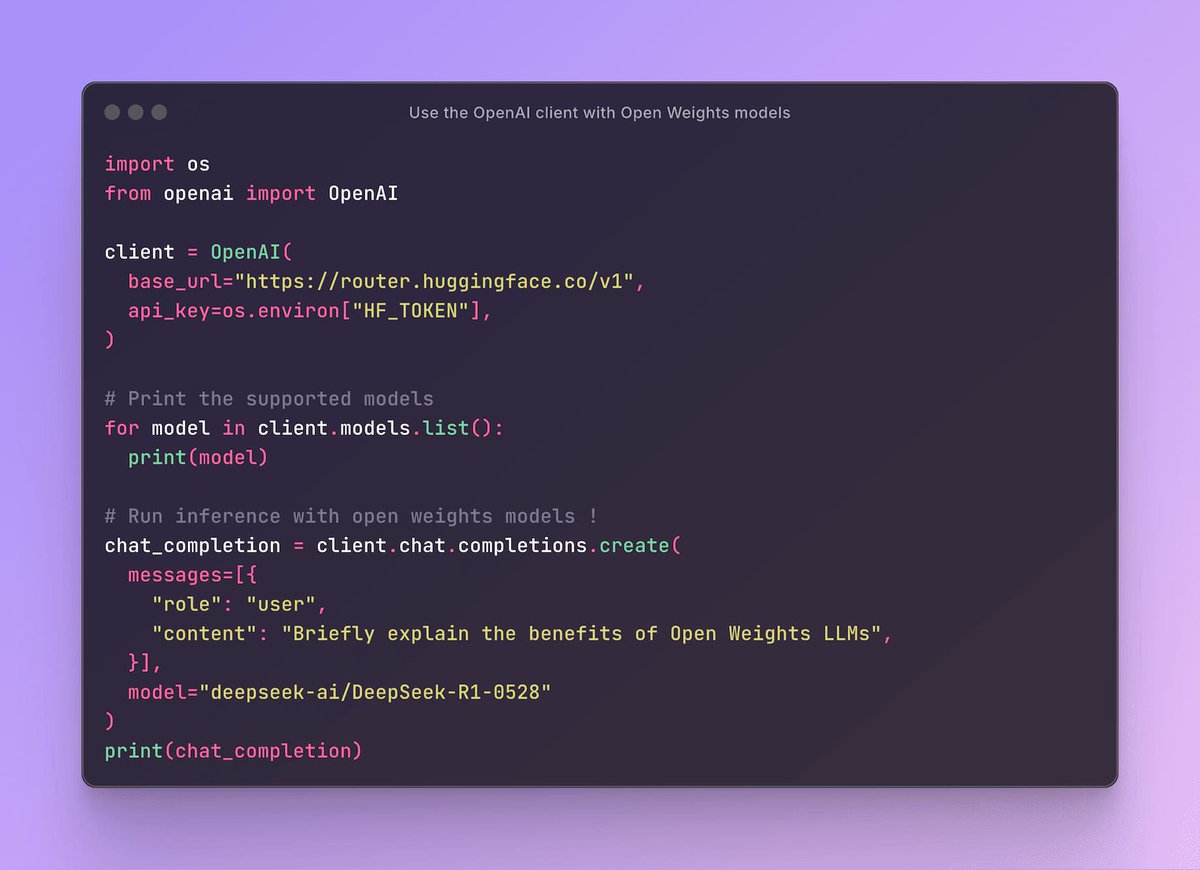

An easy way to use open weights models without too much change in your workflow: use Hugging Face Inference Providers! Compatible with the OpenAI client - with automatic provider selection 📕 Docs: hf.co/docs/inference… 🔗 Snippet: ray.so/jLkS4WT

Jan v0.6.5 is out: SmolLM3-3B now run locally Highlights 💫 - Support for Hugging Face's SmolLM3-3B - Fully responsive design across all screen sizes - New layout for Model Providers Update your Jan or download the latest.

I'm notorious for turning down 99% of the hundreds of requests every months to join calls (because I hate calls!). The Hugging Face team saw an opportunity and bullied me in accepting to do a zoom call with users who upgrade to pro. I only caved under one strict condition:

Jan v0.6.6 is out: Jan now runs fully on llama.cpp. - Cortex is gone, local models now run on Georgi Gerganov's llama.cpp - Toggle between llama.cpp builds - Hugging Face added as a model provider - Hub enhanced - Images from MCPs render inline in chat Update Jan or grab the latest.

Hugging Face 🤝 Jan You can now use Hugging Face as a remote model provider in Jan. Go to Settings -> Model Providers -> add your Hugging Face API key. Then open a new chat and pick a model from Hugging Face. Works with any model in Hugging Face in Jan.

Student credits for gpt-oss With Hugging Face, we’re offering 500 students $50 in inference credits to explore gpt-oss. We hope these open models can help unlock new opportunities in class projects, research, fine-tuning, and more: tally.so/r/mKKdXX

Starting today, you can use Hugging Face Inference Providers directly in GitHub Copilot Chat on Visual Studio Code! 🔥 which means you can access frontier open-source LLMs like Qwen3-Coder, gpt-oss and GLM-4.5 directly in VS Code, powered by our world-class inference partners -

🚀 Scaleway is now officially listed as an Inference Provider on Hugging Face! This means developers can now select Scaleway directly on the Hugging Face Hub to run serverless inference requests, with full flexibility to use either Hugging Face routing or their own Scaleway API

Long-awaited feature has dropped! You can now edit GGUF metadata directly from Hugging Face, without having to download the model locally 🔥 Huge kudos to Mishig Davaadorj for implementing this! ❤️