Peter Hase

@peterbhase

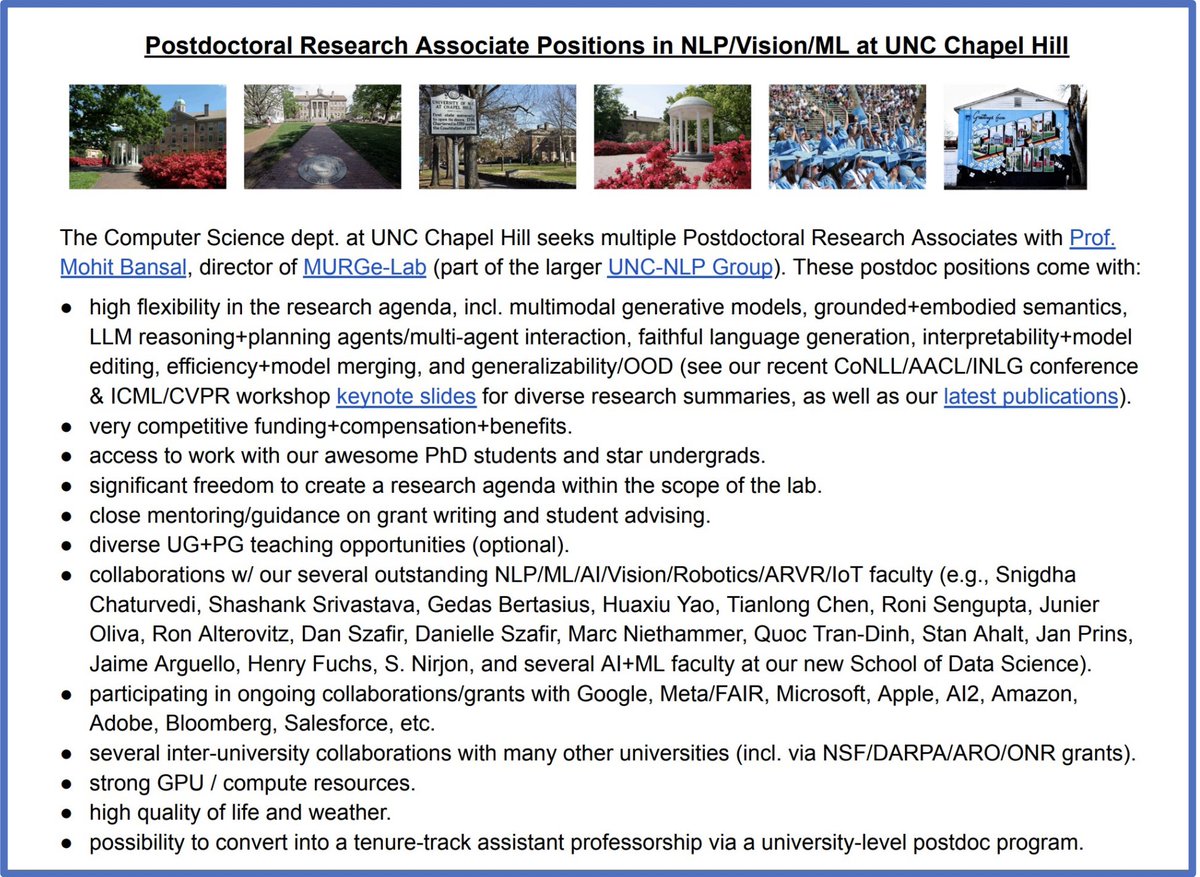

AI research @AnthropicAI. Interested in AI safety. PhD from UNC Chapel Hill (Google PhD Fellow). Previously: AI2, Google, Meta

ID: 1119252439050354688

https://peterbhase.github.io/ 19-04-2019 14:52:30

399 Tweet

2,2K Takipçi

813 Takip Edilen

Challenges of LLM fact-checking with atomic facts: 👉 Too little info? Atomic facts lack context 👉 Too much info? Risk losing error localization benefits We study this and present 🧬molecular facts🧬, which balance two key criteria: decontextuality and minimality. w/Greg Durrett

New letter from Geoffrey Hinton, Yoshua Bengio, Lawrence @Lessig, and Stuart Russell urging Gov. Newsom to sign SB 1047. “We believe SB 1047 is an important and reasonable first step towards ensuring that frontier AI systems are developed responsibly, so that we can all better

The Linear Representation Hypothesis is now widely adopted despite its highly restrictive nature. Here, Csordás Róbert, Atticus Geiger, Christopher Manning & I present a counterexample to the LRH and argue for more expressive theories of interpretability: arxiv.org/abs/2408.10920