Alvaro Somoza

@ozzygt

ML Engineer @HuggingFace

ID: 26274548

24-03-2009 17:01:53

86 Tweet

270 Takipçi

176 Takip Edilen

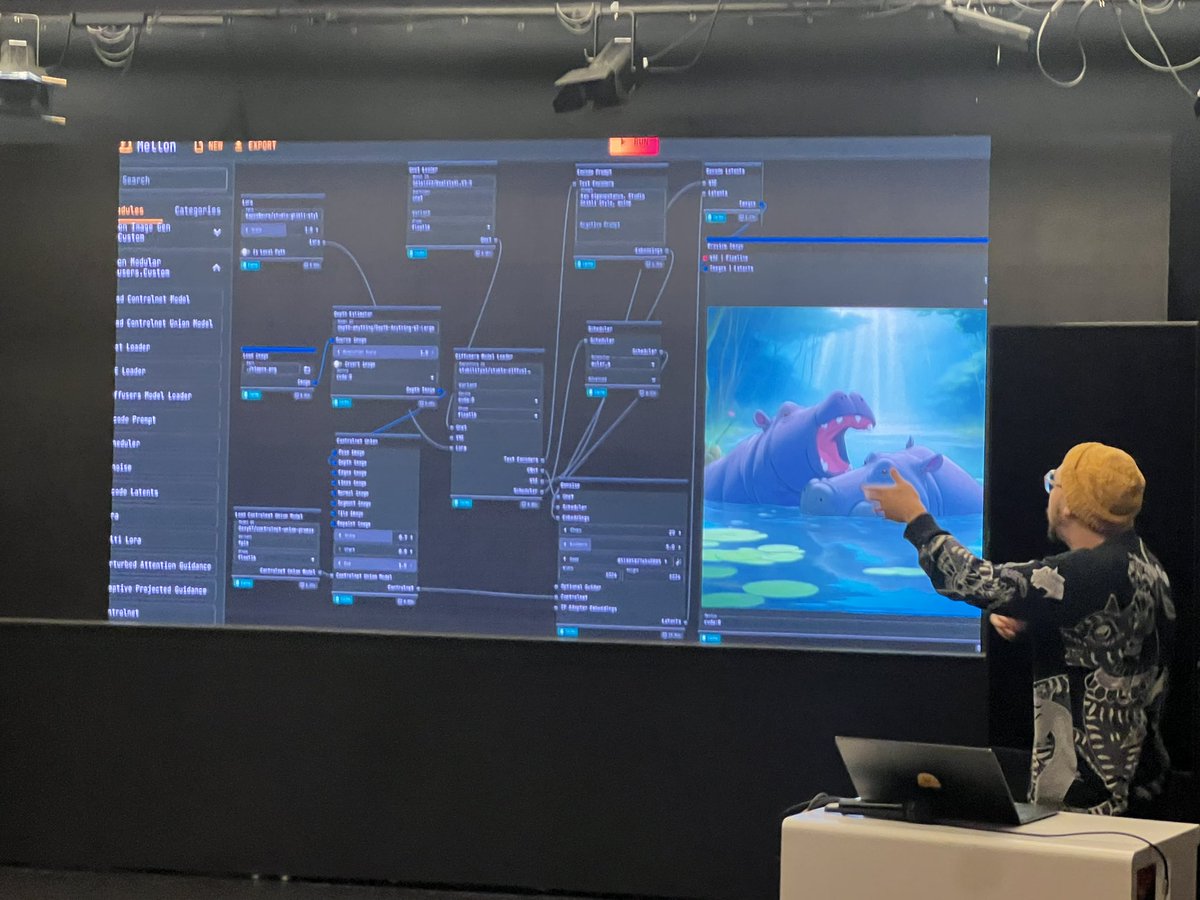

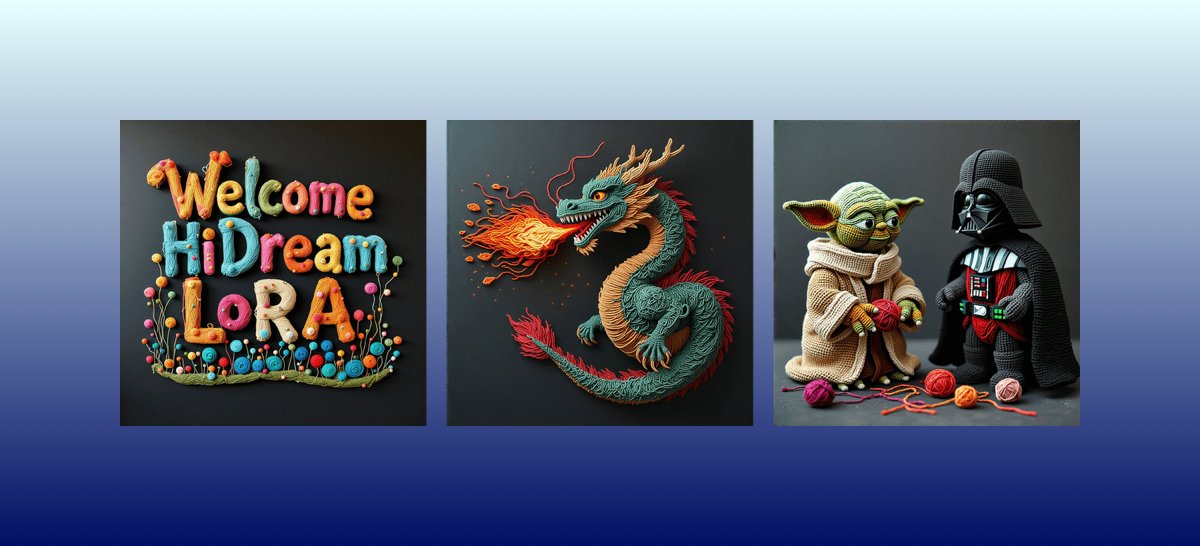

Hackathon today with Lightricks, sponsored by @FAL, Hugging Face and Google With a Modular Diffusers/Mellon sneak peak by apolinario 🌐!