Gabriel Recchia

@mesotronium

Cognitive scientist, previously at @Cambridge_Uni 's Winton Centre for Risk and Evidence Communication, now working on LLM capability evaluation & alignment

ID: 182045911

http://gabrielrecchia.com 23-08-2010 18:07:31

503 Tweet

269 Takipçi

322 Takip Edilen

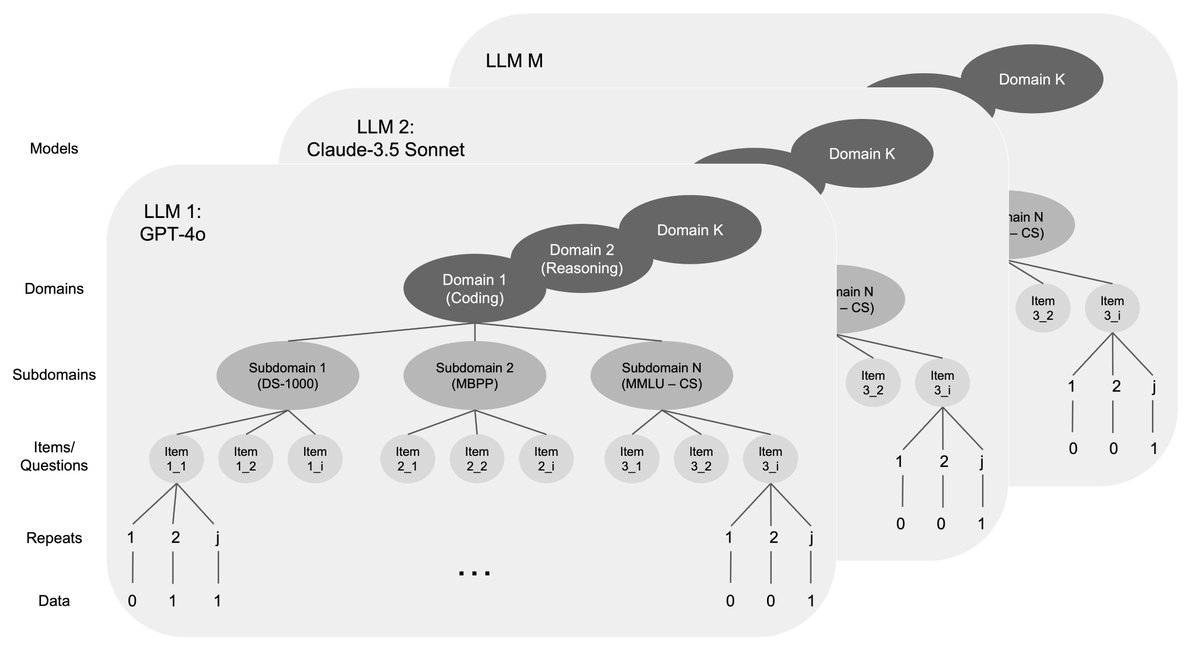

This has been accepted at ICML 2025! See you all in Vancouver. Credit to Tingchen Fu for leading this work and to my wonderful collaborators! mrinank 🍂 philip Shay B.Cohen and David Krueger

This is an excellent and, I think, very important piece that I hope gets the attention it deserves within the AI safety community. Many congratulations to Josh Engels, David D. Baek, Subhash Kantamneni, Max Tegmark

Humans are often very wrong. This is a big problem if you want to use human judgment to oversee super-smart AI systems. In our new post, Geoffrey Irving argues that we might be able to deal with this issue – not by fixing the humans, but by redesigning oversight protocols.