Lysandre

@lysandrejik

Chief Open-Source Officer (COSO) at Hugging Face

ID: 1105862126432894976

13-03-2019 16:04:11

1,1K Tweet

8,8K Takipçi

603 Takip Edilen

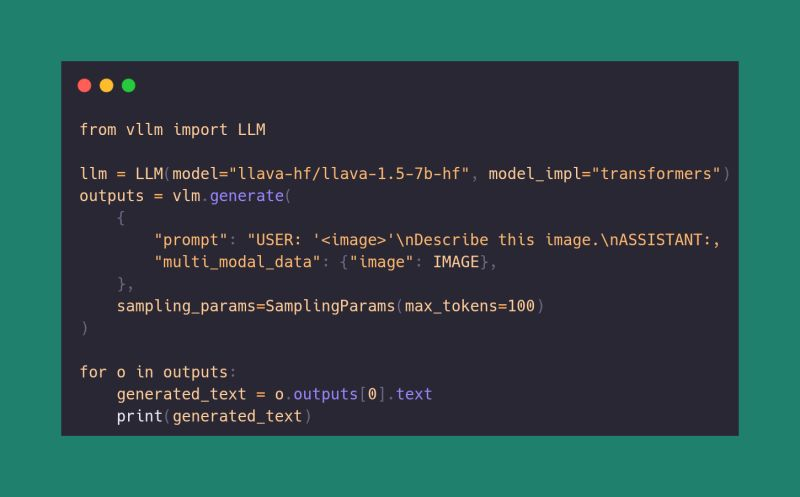

The Hugging Face Transformers ↔️ vLLM integration just leveled up: Vision-Language Models are now supported out of the box! If the model is integrated into Transformers, you can now run it directly with vLLM. github.com/vllm-project/v… Great work Raushan Turganbay 👏

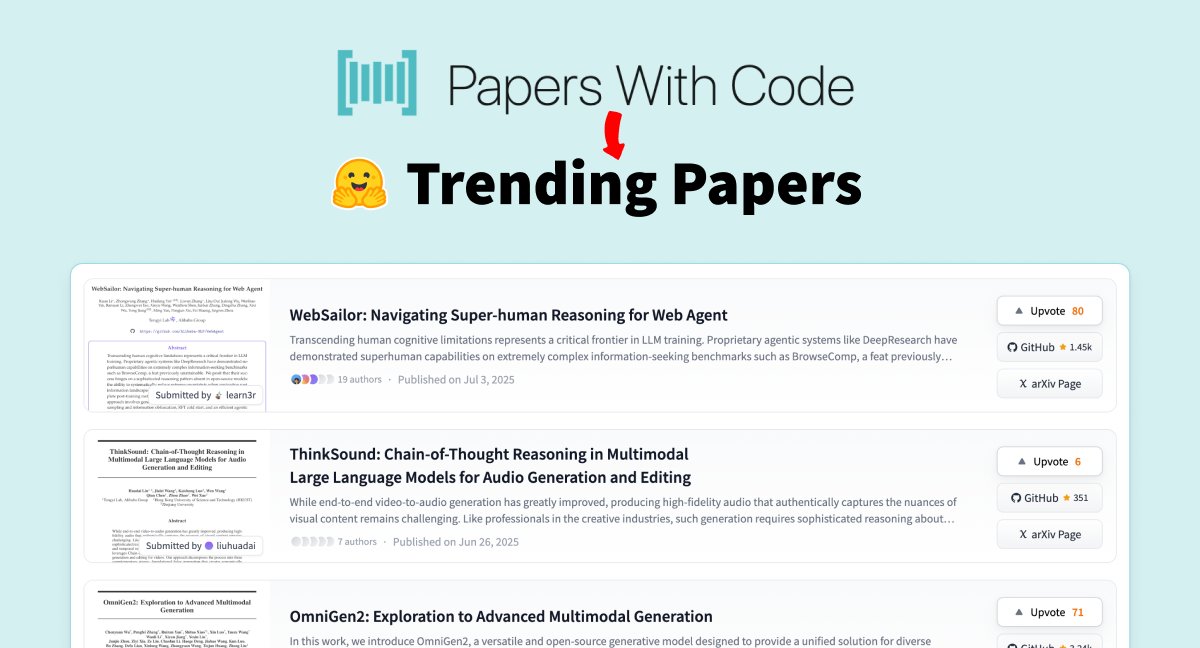

Hugging Face just dropped Trending Papers partnered with AI at Meta and Papers with Code to build a successor to papers with code (which was sunsetted yesterday) HF papers provides a new section for the community to follow trending Papers, linked to their code implementations

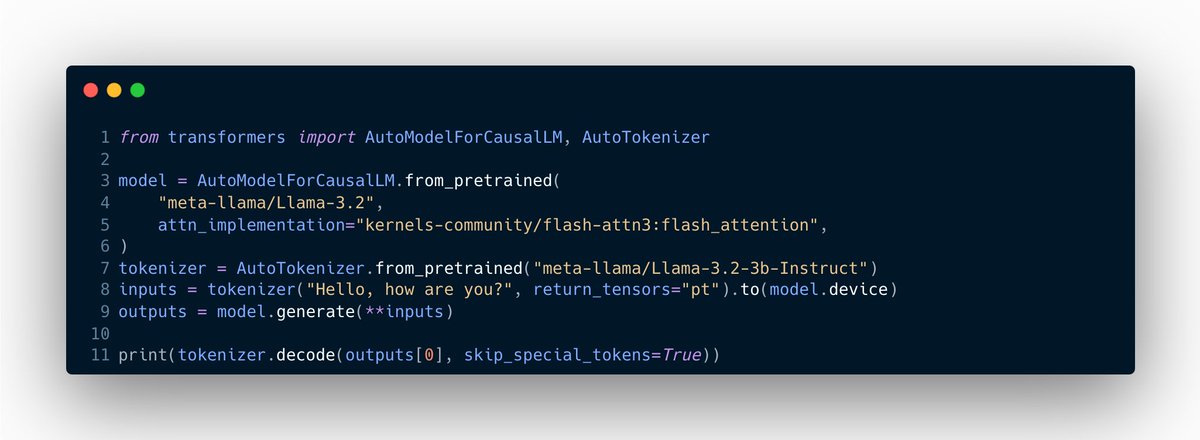

With the latest release, I want to make sure I get this message to the community: we are listening! Hugging Face we are very ambitious and we want `transformers` to accelerate the ecosystem and enable all hardwares / platforms! Let's build AGI together 🫣 Unbloat and Enable!

Hugging Face 🤝 Jan You can now use Hugging Face as a remote model provider in Jan. Go to Settings -> Model Providers -> add your Hugging Face API key. Then open a new chat and pick a model from Hugging Face. Works with any model in Hugging Face in Jan.

When Sam Altman told me at the AI summit in Paris that they were serious about releasing open-source models & asked what would be useful, I couldn’t believe it. But six months of collaboration later, here it is: Welcome to OSS-GPT on Hugging Face! It comes in two sizes, for both

This is by far my favorite feature so far this year. Unlocks a lot of exciting possibilities for post-training larger models in the Hugging Face ecosystem such as improved multi-node and slurm support.