LIT AI Lab & ELLIS Unit Linz

@litailab

The LIT Lab is committed to scientific excellence. Our focus is on theoretical and experimental research in machine learning and artificial intelligence.

ID: 1198944544877924352

https://bit.ly/2DfsiRc 25-11-2019 12:41:26

208 Tweet

542 Takipçi

594 Takip Edilen

Join stream by our associated partner Chelonia Applied Science speaking how to Scale business ideas.

... on #mission for #AI at Parlament Österreich 👏

some news from our Ellis NederUnitLinz - LIT AI Lab & ELLIS Unit Linz Ellis Neder ELLIS Amsterdam CambridgeEllisUnit @ellislifehd ELLIS Munich unit EPFL ELLIS unit ELLIS Unit Stuttgart @ELLIS Cuenta oficial de ELLIS Unit Madrid ELLIS Unit Milan

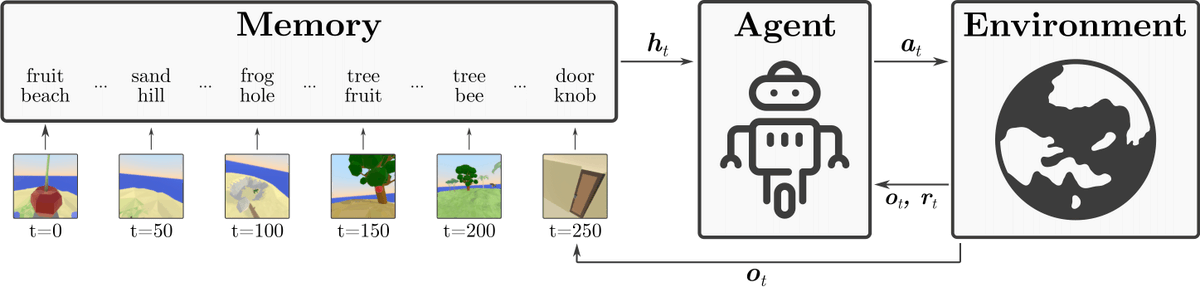

Excellent opportunity to do a PhD at the intersection of academia and industry! Apply now and join the https://bsky.app/profile/aichemist.bsky.social network ⬇️ #compchem #xai #chemtwitter

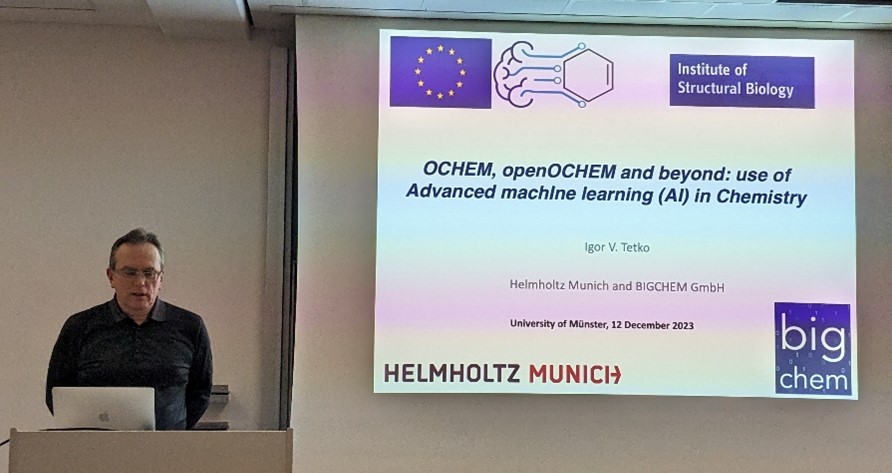

Slides of Igor Tetko's lecture at PharmaCampus University of Münster @PharmaCampus_MS are available at ai-dd.eu/news (see lecture overview at uni-muenster.de/Chemie.pz/phar…). Many thanks to Oliver Koch Koch Group and his group for hospitality!