Lisa Dunlap

@lisabdunlap

messin around with model evals @berkeley_ai and @lmarena_ai

ID: 1453078457978552320

http://lisabdunlap.com 26-10-2021 19:18:12

355 Tweet

1,1K Takipçi

252 Takip Edilen

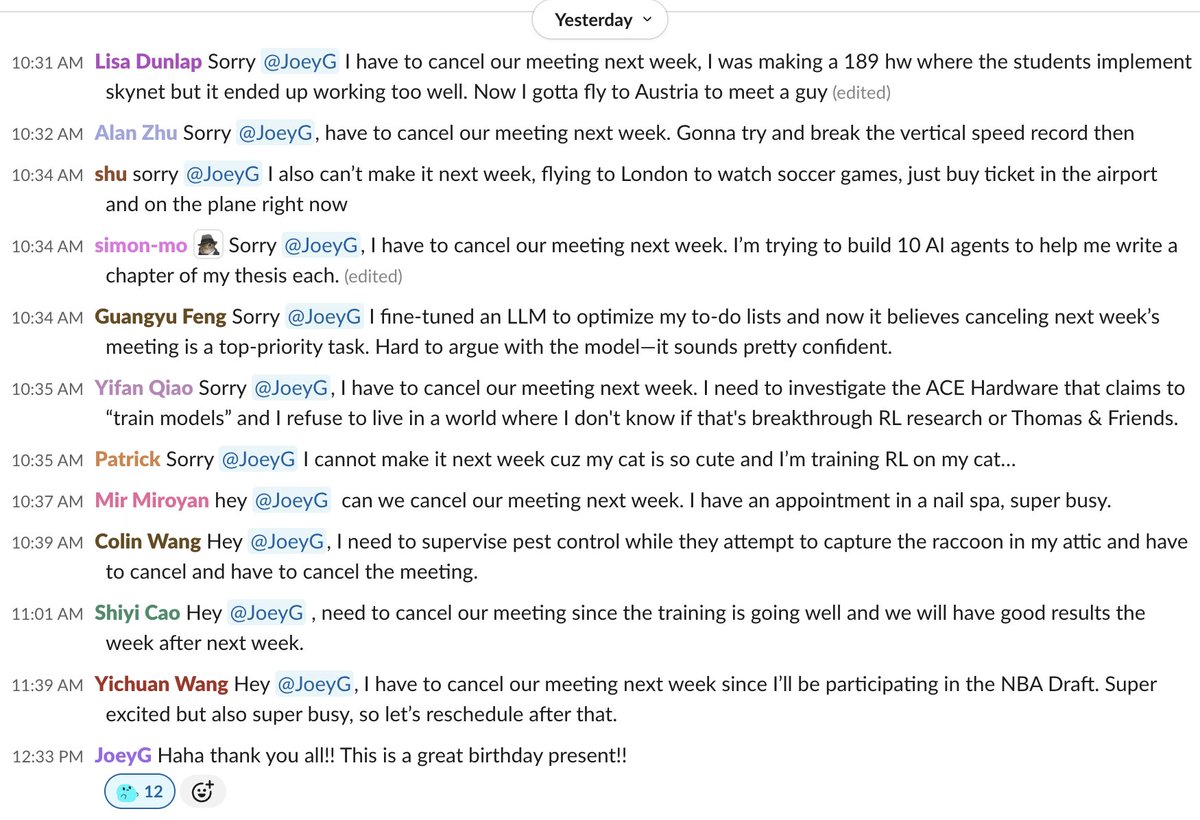

Don't know what to get your advisor for their birthday? Give them the best gift of all: their time back. Happy (late) birthday Joey Gonzalez :)

🔍 How do we teach an LLM to 𝘮𝘢𝘴𝘵𝘦𝘳 a body of knowledge? In new work with AI at Meta, we propose Active Reading 📙: a way for models to teach themselves new things by self-studying their training data. Results: * 𝟔𝟔% on SimpleQA w/ an 8B model by studying the wikipedia

SGLang now supports deterministic LLM inference! Building on Thinking Machines batch-invariant kernels, we integrated deterministic attention & sampling ops into a high-throughput engine - fully compatible with chunked prefill, CUDA graphs, radix cache, and non-greedy sampling. ✅