Leonardo Viana

@leonardovt18

Mechatronics Engineer and Deep Learning Researcher.

ID: 979358016381292546

29-03-2018 14:02:01

42 Tweet

19 Takipçi

78 Takip Edilen

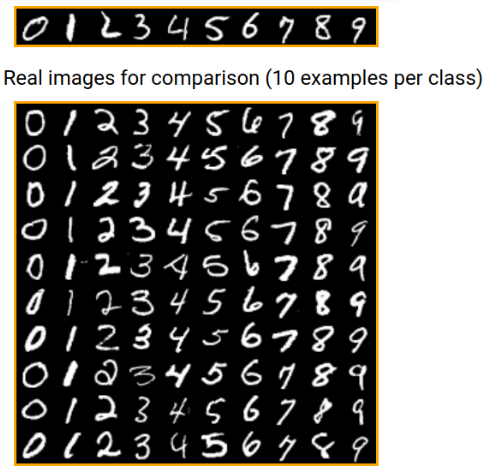

How quickly can we build a captcha reader using deep learning? Check out yourself the Captcha cracker built TensorFlow 2.0 and #Keras colab.research.google.com/drive/16y14HuN…

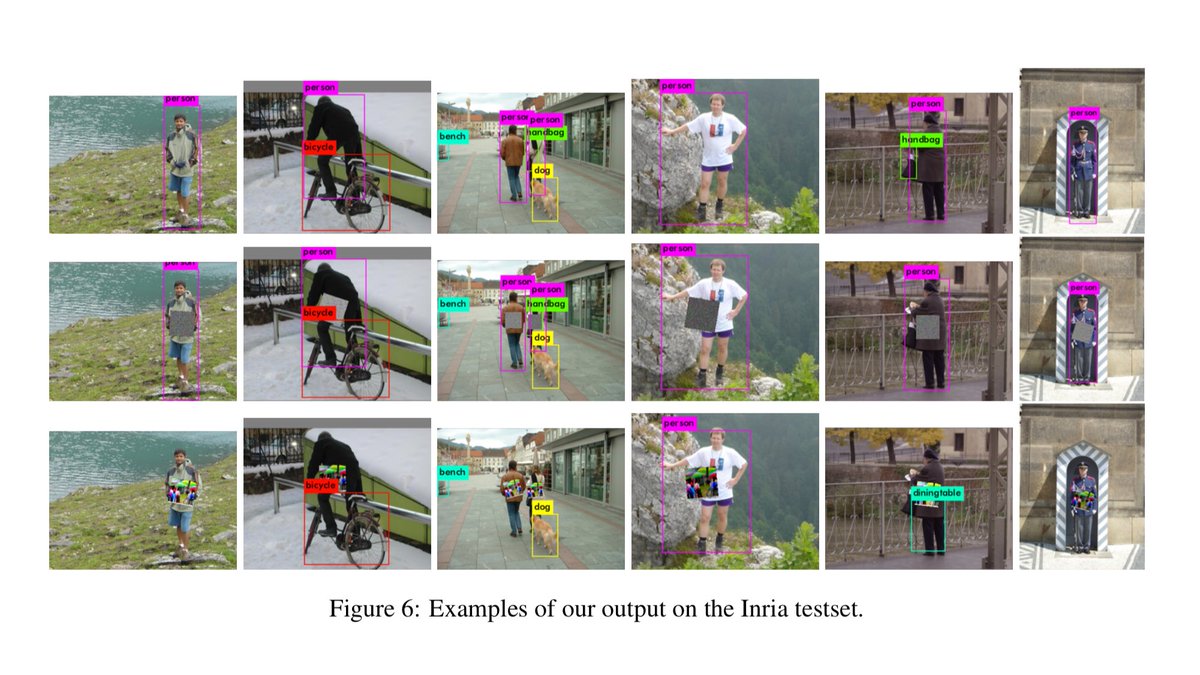

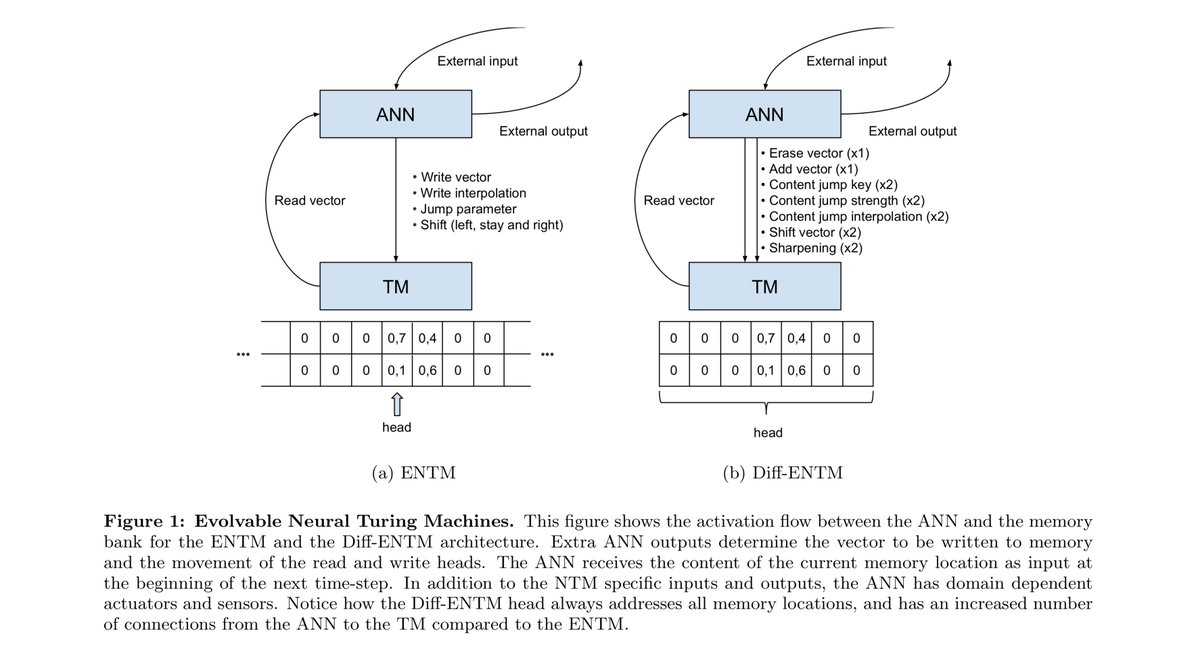

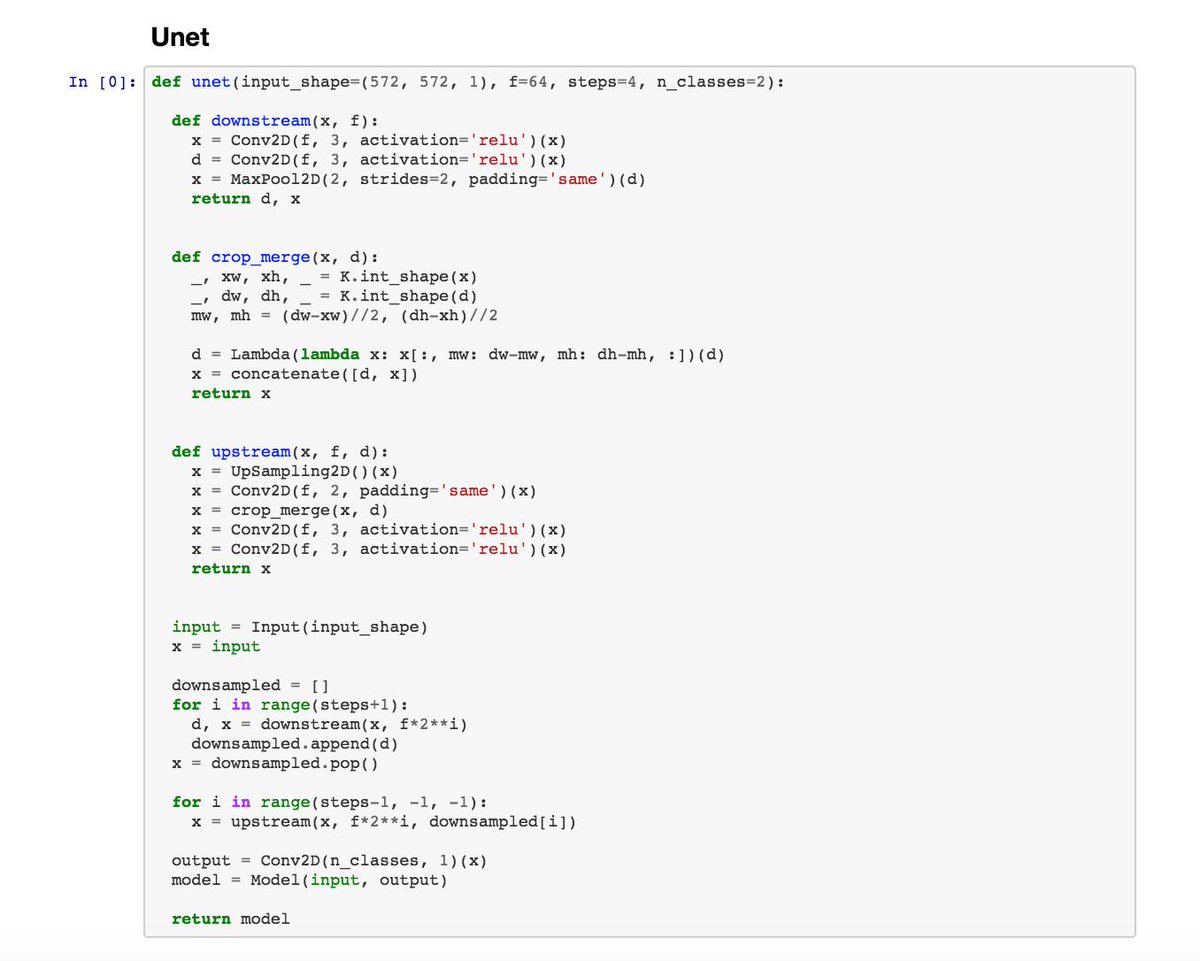

Super excited to be at DeepCon on June 8! We'll look at AlexNet VGG Inception MobileNet ShuffleNet ResNet DenseNet Xception U-Net SqueezeNet YOLO RefineNet The workshop will be recorded, you can find our code on GitHub (Part I: ConvNets.ipynb) Dimitris Katsios github.com/Machine-Learni…

Chapter 9 of "Deep Learning with JavaScript" was recently released to #MEAP. It covers the basics of generative deep learning (VAE, GAN, & RNN-based sequence generation) and how to train and serve such models in TensorFlow.js. Stan Bileschi François Chollet manning.com/books/deep-lea…

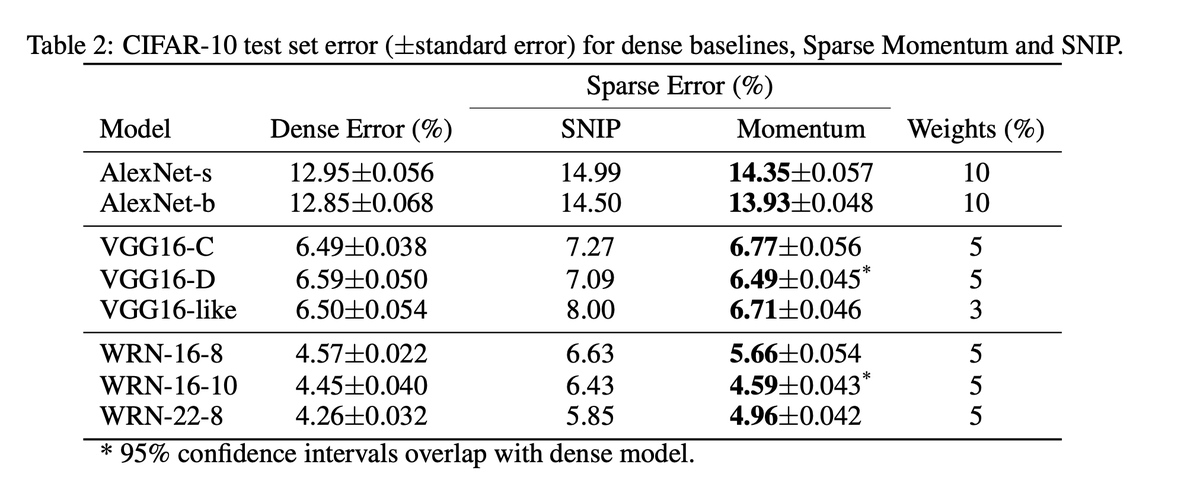

Sparse Networks from Scratch: Faster Training without Losing Performance By Tim Dettmers Finds "winning lottery tickets" – sparse configurations with 20% weights and similar performance. SoTA on MNIST, CIFAR-10, and ImageNet-2012 among sparse methods arxiv.org/abs/1907.04840