Kaizhao Liang

@kyleliang5

@UTCompSci @IllinoisCDS

ID: 1070931144512823296

https://kyleliang919.github.io 07-12-2018 06:40:56

3,3K Tweet

598 Takipçi

67 Takip Edilen

Might as well inject some verification prompts into the official latex template… NeurIPS Conference if meddled, then desk reject

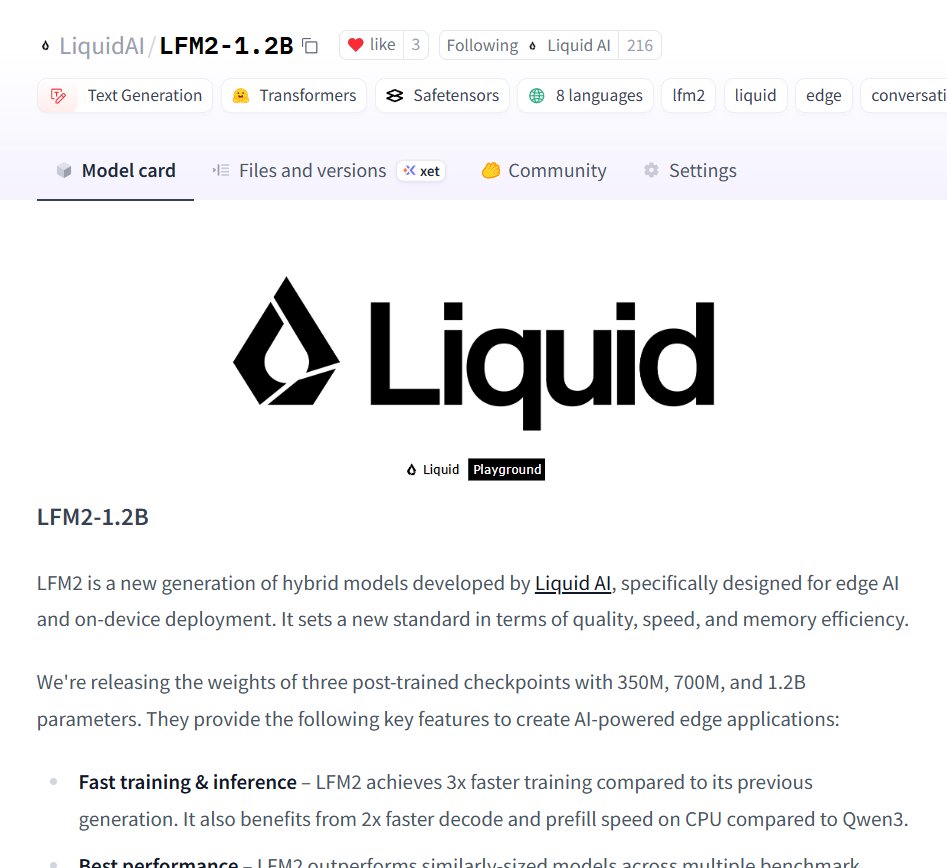

Liquid AI open-sources a new generation of edge LLMs! 🥳 I'm so happy to contribute to the open-source community with this release on Hugging Face! LFM2 is a new architecture that combines best-in-class inference speed and quality into 350M, 700M, and 1.2B models.

congrats! Great work! This is huge, and this model is trained end to end with Muon! Ping some Muon friends in case you guys missed! Jeremy Bernstein Seunghyun Seo

We are creating a multi-agent AI software company @xAI, where @Grok spawns hundreds of specialized coding and image/video generation/understanding agents all working together and then emulates humans interacting with the software in virtual machines until the result is excellent.