Josh Lipe-Melton

@joshlipemelton

ID: 422825765

27-11-2011 18:52:24

59 Tweet

79 Takipçi

275 Takip Edilen

An organization is its people. DM me or email me at [email protected]

New blog post on DSPy, a recent #llm ops innovation led by researchers from Databricks co-founder Matei Zaharia's lab, co-authored by Dan Pechi and Arnav Singhvi.

Databricks to acquire Tabular (now part of Databricks), a data platform from the original creators of Apache Iceberg. Together, we will bring format compatibility to the lakehouse for Delta Lake and Apache Iceberg databricks.com/blog/databrick…

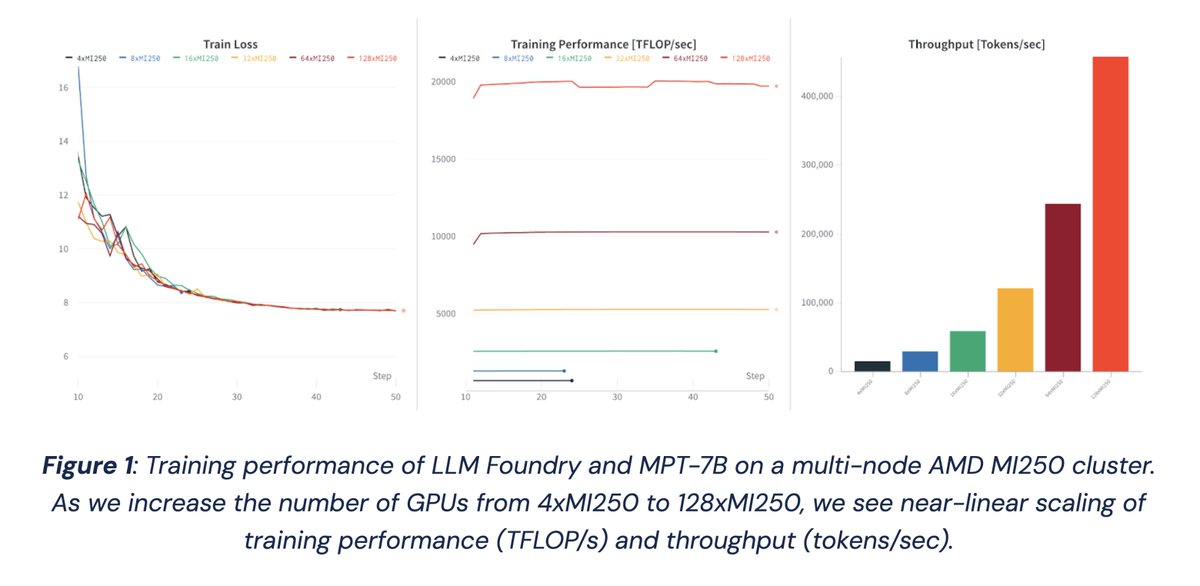

Lynx is a new hallucination detection model for #LLMs that is especially suited for real-world applications in industries like healthcare and fintech. PatronusAI trained Lynx on Databricks Mosaic AI using Composer, our open source PyTorch-based training library.