Jonathan Heek

@jonathanheek

ID: 1162013081834332160

15-08-2019 14:48:02

12 Tweet

319 Takipçi

5 Takip Edilen

If diffusion models are so great, why do they require modifications to work well? Like latent diffusion and superres diffusion? Introducing "simple diffusion": a single straightforward diffusion model for high res images (arxiv.org/abs/2301.11093) . w/ Jonathan Heek Tim Salimans

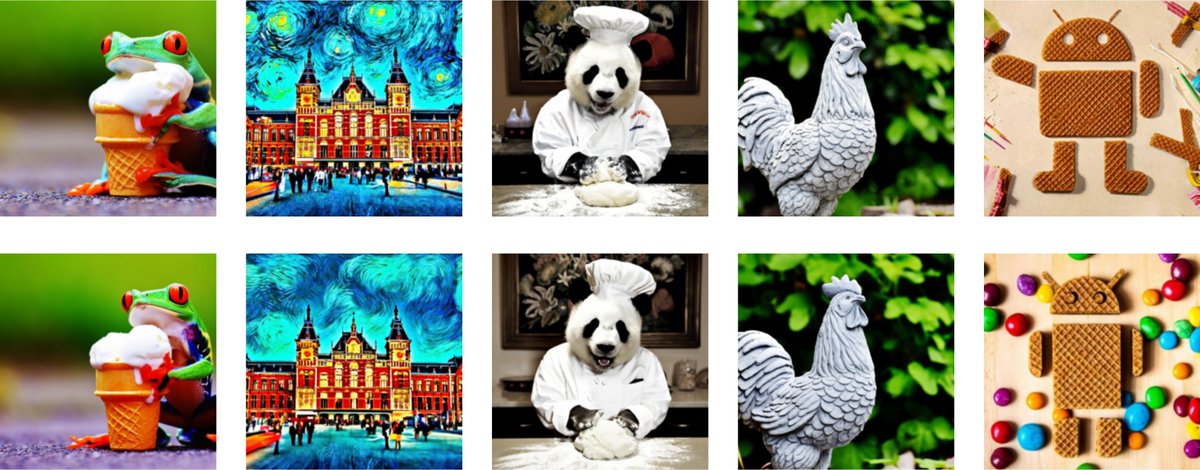

Fast sampling with 'Multistep Consistency Models': We get 1.6 FID on Imagenet64 in 4 steps and scale text-to-image models, generating 256x256 images with 16 steps. Guess which row is distilled? With Emiel Hoogeboom Tim Salimans Arxiv: arxiv.org/abs/2403.06807

We have a new distillation method that actually *improves* upon its teacher. Moment Matching distillation (arxiv.org/abs/2406.04103) creates fast stochastic samplers by matching data expectations between teacher and student. Work with Emiel Hoogeboom Jonathan Heek @tejmensin. 1/4

🚀 Interested in time series generation?⏲️Excited to share my Google DeepMind Amsterdam student researcher project: Rolling Diffusion Models! arxiv.org/abs/2402.09470 (to appear at ICML 2024) Thanks for the great collaboration Emiel Hoogeboom, Jonathan Heek, Tim Salimans! 🧵1/4

Is pixel diffusion passé? In 'Simpler Diffusion' (arxiv.org/abs/2410.19324) , we achieve 1.5 FID on ImageNet512, and SOTA on 128x128 and 256x256. We ablated out a lot of complexity, making it truly 'simpler'. w/ @tejmensink Jonathan Heek Kay Lamerigts Ruiqi Gao Tim Salimans