Jeremy Howard

@jeremyphoward

🇦🇺 Co-founder: @AnswerDotAI & @FastDotAI ;

Prev: professor @ UQ; Stanford fellow; @kaggle president; @fastmail/@enlitic/etc founder

jeremy.fast.ai

ID: 175282603

http://answer.ai 06-08-2010 04:58:18

61,61K Tweet

247,247K Takipçi

5,5K Takip Edilen

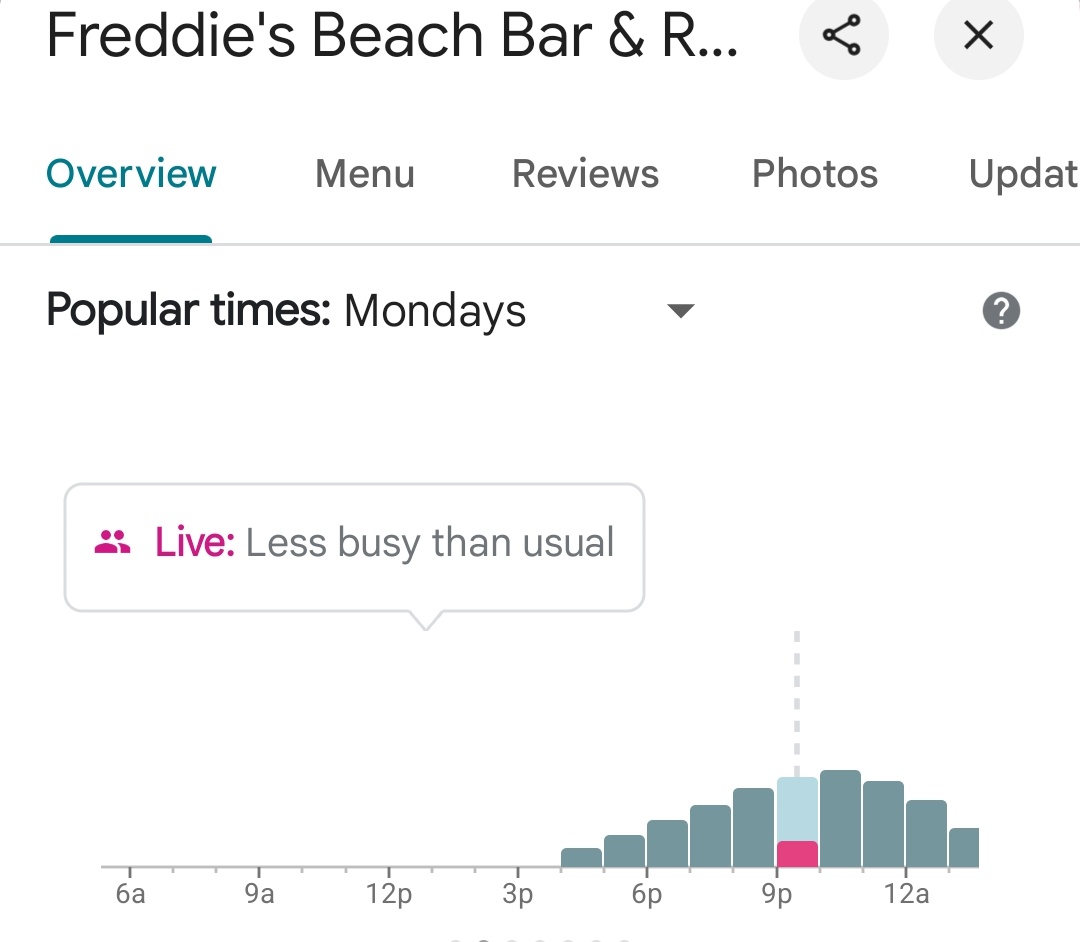

100% web servers should be gzipping the js on the way out & it should be cached forever afterwards minification destroys debuggability & the (underappreciated) #ViewSource affordance: htmx.org/essays/right-c… /cc Cory Doctorow NONCONSENSUAL BLUE TICK