Huck Yang 🇸🇬 ICLR 2025

@huckiyang

Sr. Research Scientist @NVIDIAAI Generative Voice Correction | Ph.D. MSc @GeorgiaTech | Past: @GoogleAI @AmazonScience | 🗣️ education

ID: 3268584816

https://huckiyang.github.io 05-07-2015 02:48:54

418 Tweet

764 Takipçi

684 Takip Edilen

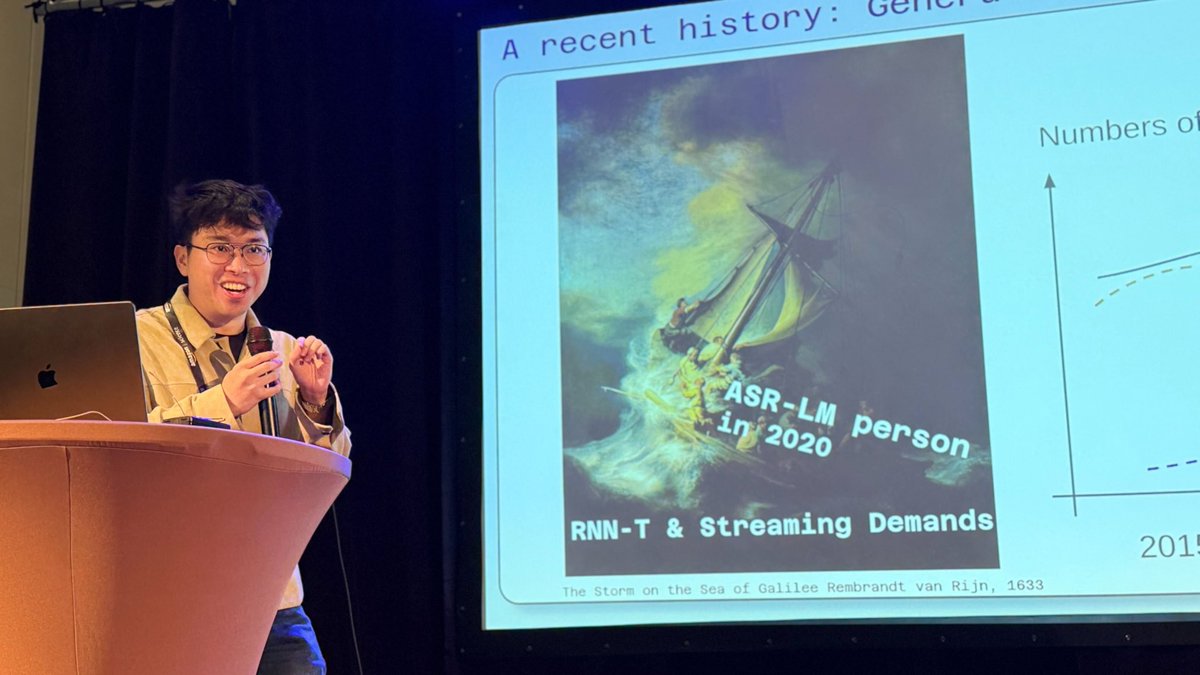

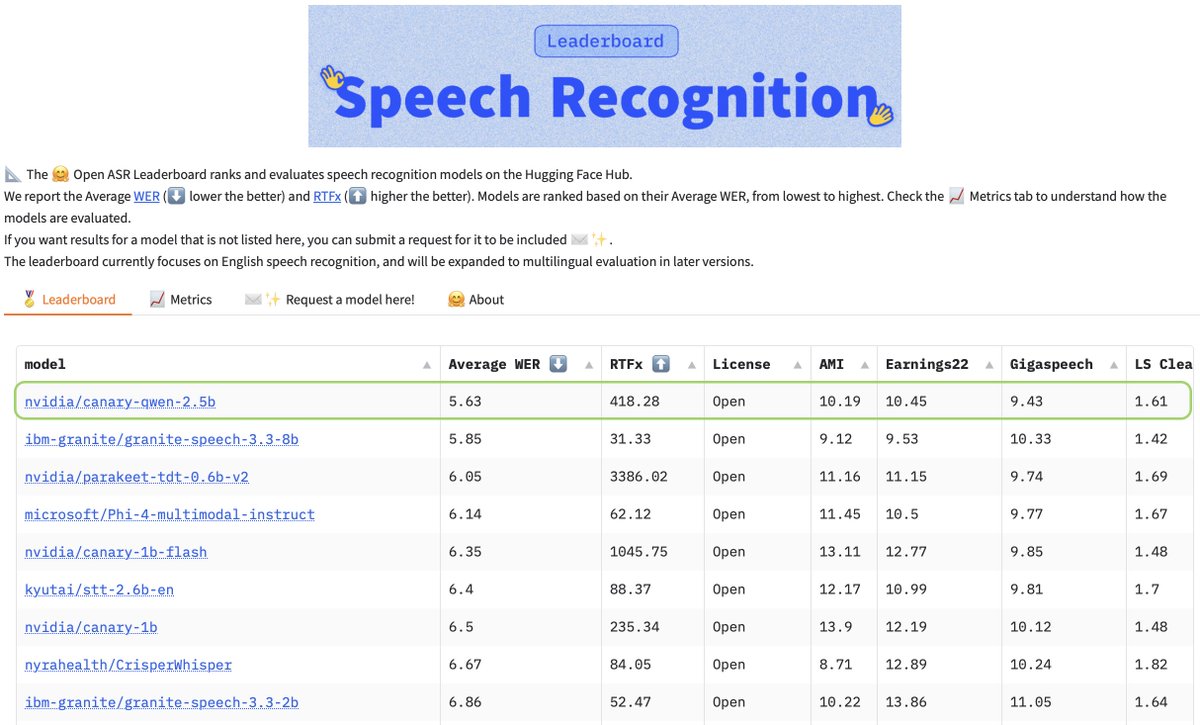

NeKo (ネコ) aims to be your pet model to work with ASR/AST/OCR NVIDIA AI Developer Yenting Lin et al. - back to 2020 conformer-transducer is dominant; people were not very interested in working in ASR-LM (i.e., internal LM of ASR / contextual biasing were popular) appreciate Andreas

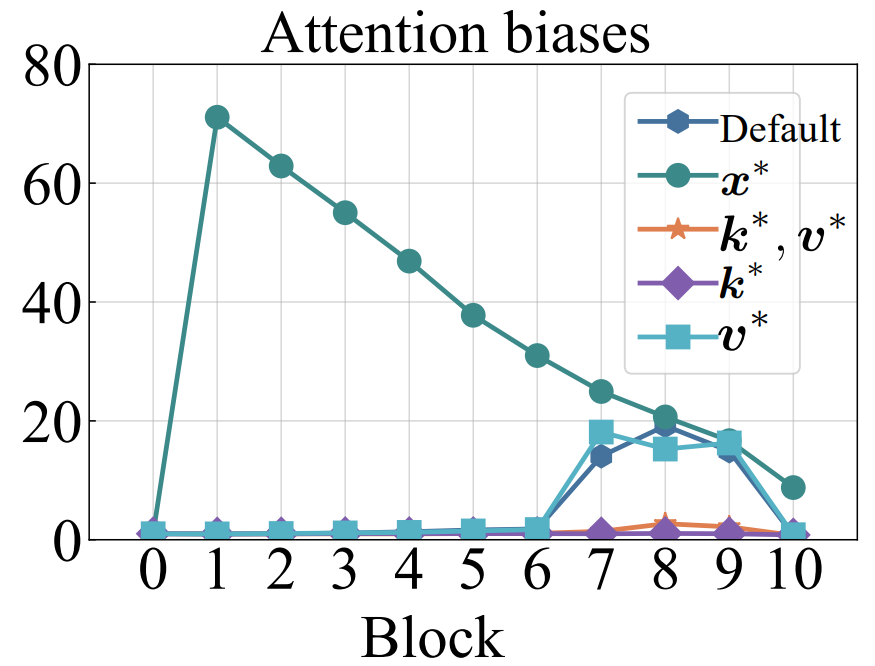

I noticed that OpenAI added learnable bias to attention logits before softmax. After softmax, they deleted the bias. This is similar to what I have done in my ICLR2025 paper: openreview.net/forum?id=78Nn4…. I used learnable key bias and set corresponding value bias zero. In this way,

3. OpusLM: Unified Speech-Language Models (led by Jinchuan Tian (田晋川)) Open family of speech language models scaled up to 7B. Poster — Wed, 8:30–10:30 | Foyer 3.2 arxiv.org/abs/2506.17611 Grateful to all collaborators who made this possible!

a nice done of “beyond end2end 🗣️ ASR: integrating long context acoustics & linguistics” tutorial w/ Shinji Watanabe Taejin park (NVIDIA), Kyu Han (Oracle) at INTERSPEECH 2025 happy to cover the semantics parts during my hotel coding vibe 🤣 | 📖 slides: docs.google.com/presentation/d…