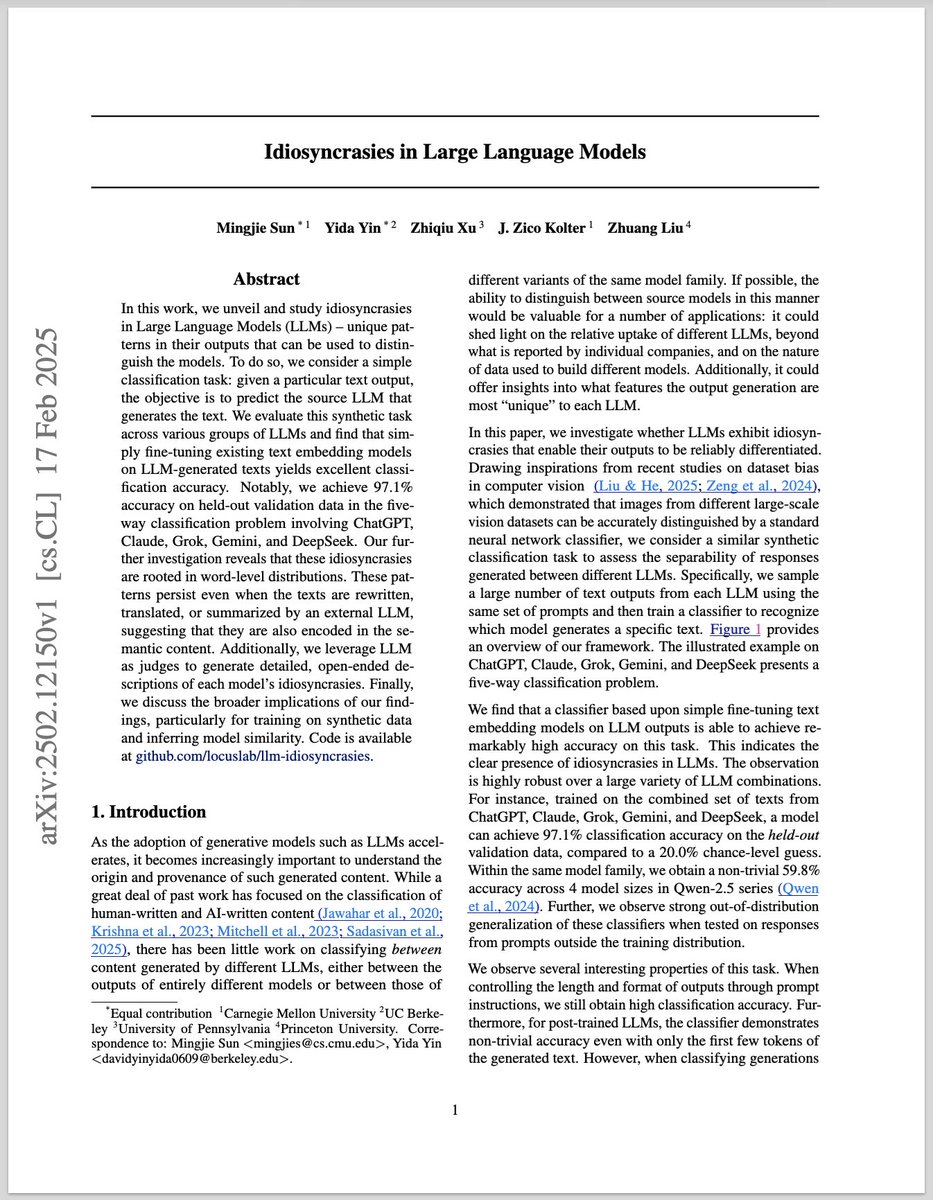

Yunzhen Feng

@feeelix_feng

PhD at CDS, NYU. Ex-Intern at FAIR @AIatMeta. Previously undergrad at @PKU1898

ID: 1523345547565879298

08-05-2022 16:54:28

87 Tweet

326 Takipçi

588 Takip Edilen

Check out our poster tmr at 10am at the ICLR Bidirectional Human-AI Alignment workshop! We cover how on-policy preference sampling can be biased and our optimal response sampling for human labeling. NYU Center for Data Science AI at Meta Julia Kempe Yaqi Duan x.com/feeelix_feng/s…