Caner Hazirbas

@drhazirbas

Research Scientist @MetaAI

ID: 1283545242

http://hazirbas.com 20-03-2013 15:29:44

1,1K Tweet

293 Takipçi

345 Takip Edilen

🤩Lowkey Goated When #PrivacyConscious Is The Vibe👌 Check out this groundbreaking paper from Caner Hazirbas, Cristian Canton et al. that uses Full-Body Person Synthesis to de-identify pedestrian datasets! deepai.org/publication/da…

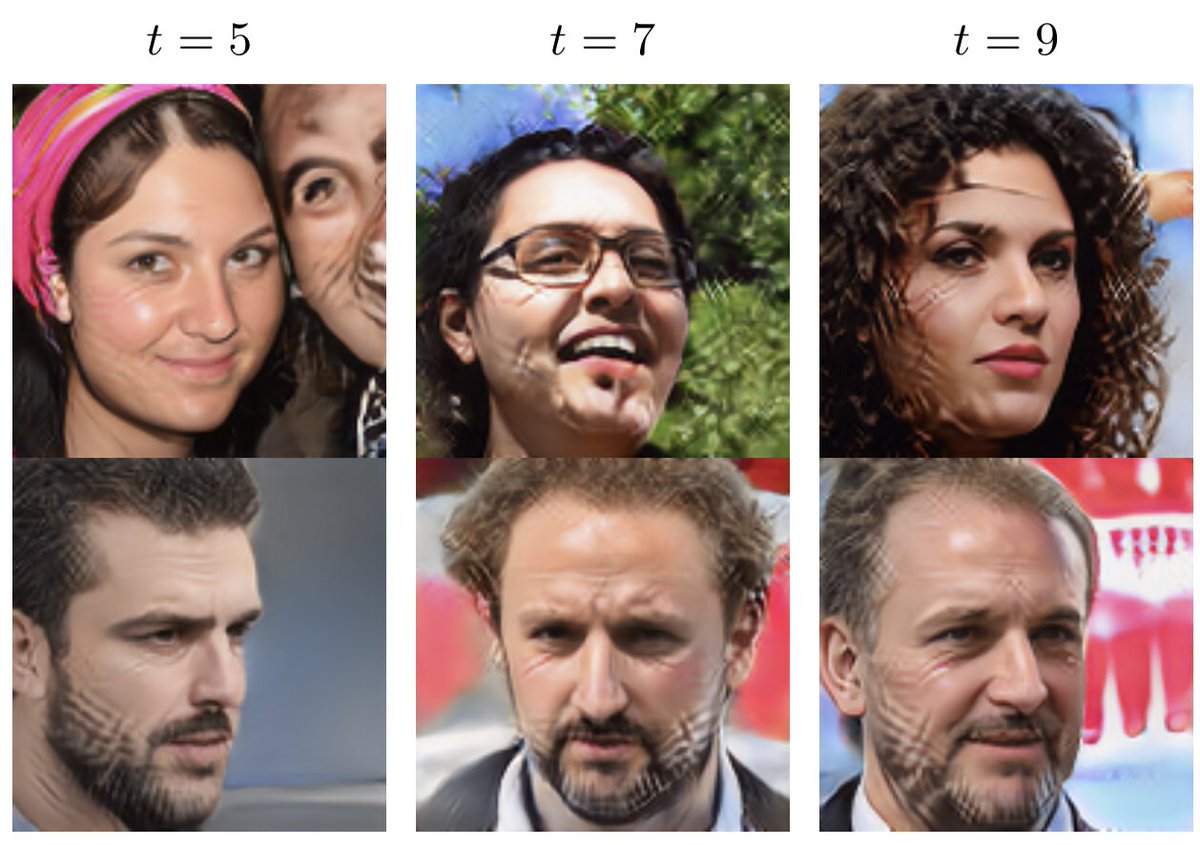

Cool paper from my friends at Rice. They look at what happens when you train generative models on their own outputs…over and over again. Image models survive 5 iterations before weird stuff happens. arxiv.org/abs/2307.01850 Credit: Sina Alemohammad, Imtiaz Humayun, @richbaraniuk

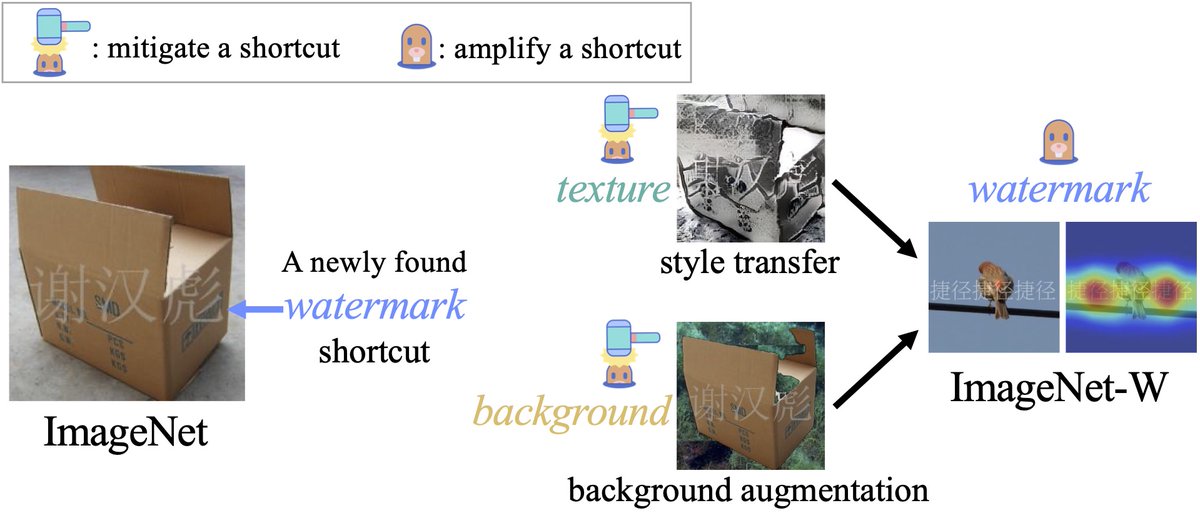

Rita Cucchiara Lorenzo Baraldi Tal Hassner Mario Fritz Georgia Gkioxari Jitendra MALIK Olga Russakovsky Caner Hazirbas (Caner Hazirbas) delivering a keynote on datasets and robustness featuring several of the contributions from the Responsive AI team at AI at Meta #ICCV2023

Excited to share our #NeurIPS2023 Spotlight paper using factor annotations to explain model mistakes across geographies / incomes (including our release of new annotations for DollarStreet!) Led by Laura Gustafson, with Melissa Hall Caner Hazirbas Diane Bouchacourt Mark Ibrahim.

We find vision language models are 4−13x more likely to harmfully classify individuals with darker skin tones—a bias not addressed by progress on standard vision benchmarks or model scale. arxiv.org/abs/2402.07329 Alicia Sun Mark Ibrahim #responsibleai #computervision

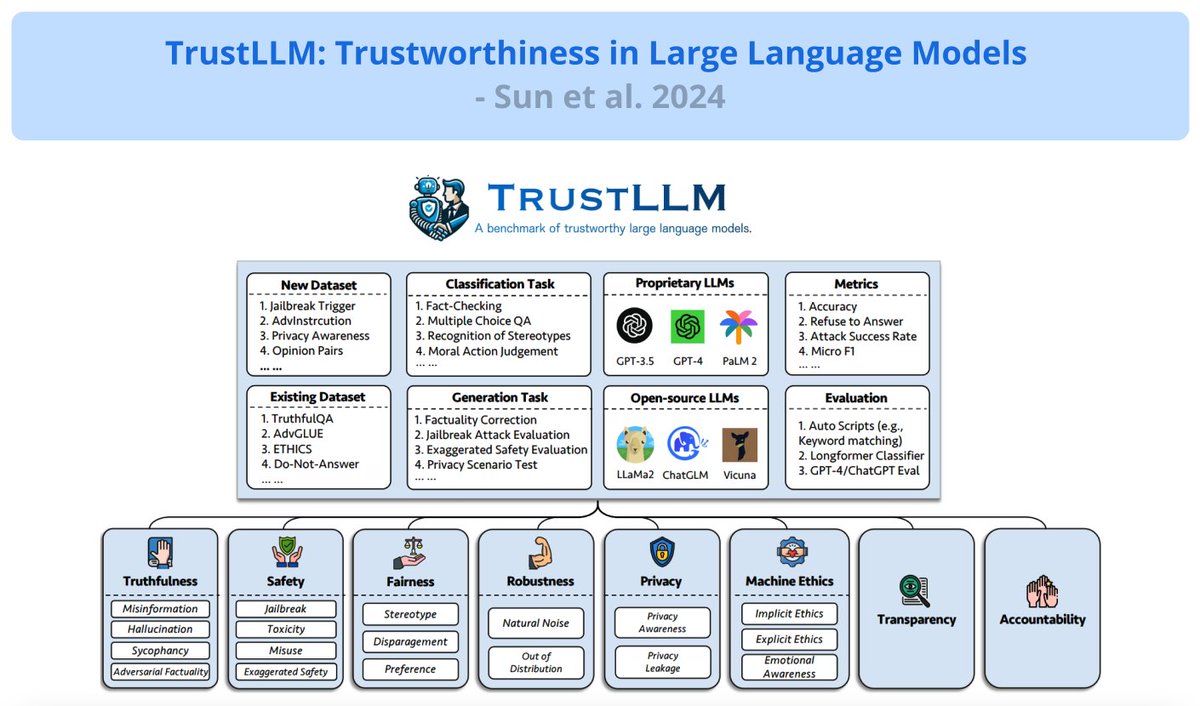

One-stop-shop for evaluating VLMs. Let us know what you think! Haider Al-Tahan, Quentin Garrido, Diane Bouchacourt, Mark Ibrahim