Colfax International

@colfaxintl

HPC & AI Solutions (colfax-intl.com) | Research (research.colfax-intl.com) | Colfax Experience Center (experience.colfax-intl.com)

ID: 21418950

https://colfax-intl.com 20-02-2009 18:17:08

650 Tweet

964 Takipçi

8 Takip Edilen

Introducing FlashAttention-3 🚀 Fast and Accurate Attention with Asynchrony and Low-precision. Thank you to Colfax International, AI at Meta, NVIDIA AI and Together AI for the collaboration here 🙌 Read more in our blog: hubs.la/Q02Gf4hf0

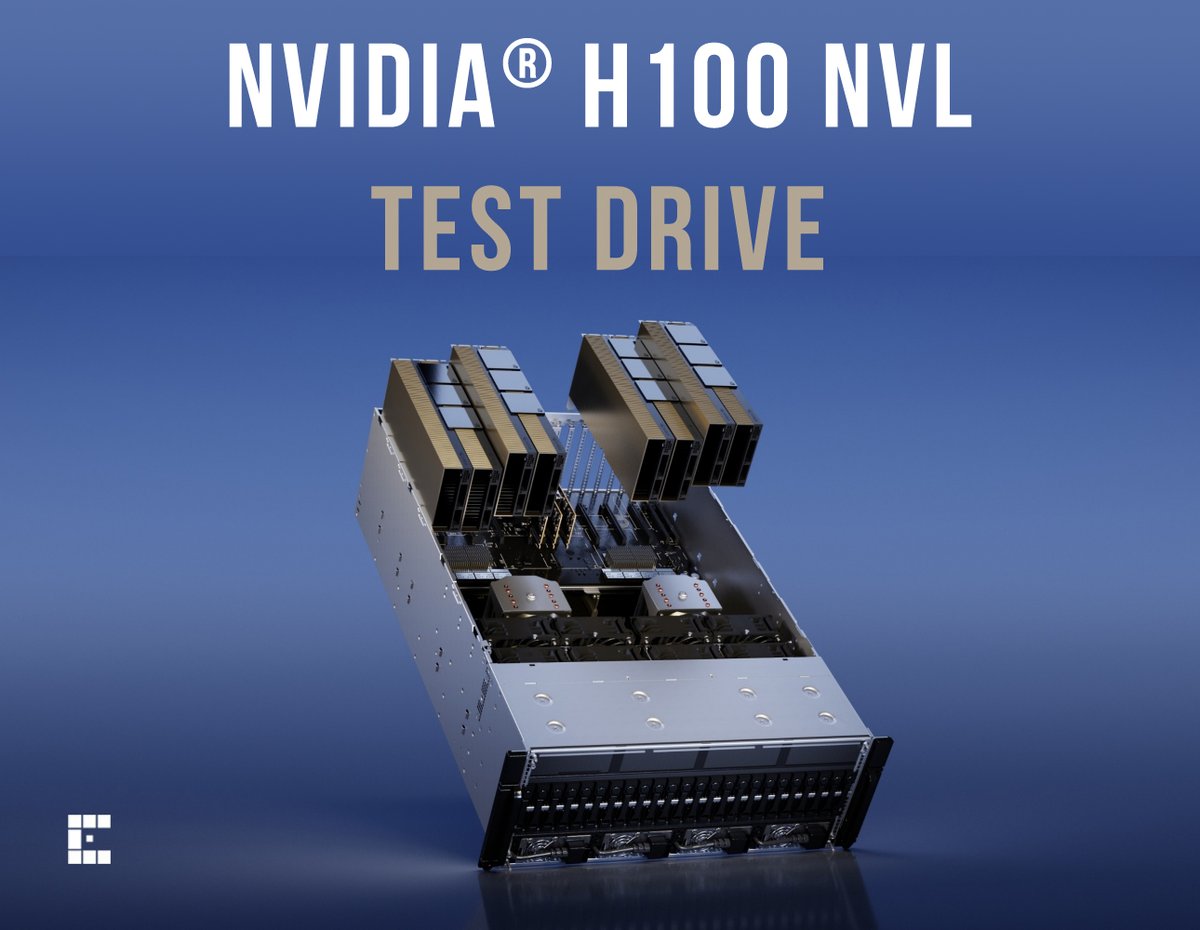

FlashAttention-3 is released! Over the last few months, I got the opportunity to collaborate on this amazing effort to implement FA-2 from scratch for H100 with Tri Dao, Colfax International, Meta, and my Pradeep Ramani. We can get up to 760 TFLOP/s on head dim 128 forward pass!

This project is a collab with Jay Shah & Ganesh Bikshandi (Colfax International), Ying Zhang (@meta), @DROP_ALL_TABLES and Pradeep Ramani (NVIDIA). Huge thanks to the CUTLASS team, cuDNN team, and Together AI, and Princeton PLI for their support! 9/9

We are thrilled to release FlashAttention-3 in partnership with Meta , NVIDIA, Princeton University, and Colfax International. The improvements from FlashAttention-3 will result in: • More efficient GPU Utilization - up to 75% from 35%. • Better performance with lower precision while

Cosmin Negruseri Tri Dao I am still learning it. Just like nobody knows all C++, nobody knows CUDA. For resources, I highly recommend the series of tutorials using CUTLASS and CUTE by Colfax International. I am obviously not biased 🤣

Checkout our newest CUDA tutorial. research.colfax-intl.com/cutlass-tutori… The topic is software pipelining: overlap mem copying with compute to hide latency. We present the concept in the context of GEMM (matmul), but it's applicable everywhere. e.g., Flash Attention 2 and 3. Conceptually

research.colfax-intl.com/epilogue_visit… A chess game typically has 3 phases: opening, middle game, and endgame. A GEMM (matmul) kernel typically has: prologue, main loop, and epilogue. Just like chess grandmasters must master endgames, GEMM programmers must master epilogue techniques to write

In this blog post, Jay Shah, Research Scientist at Colfax International, collaborated with Character.AI to explain two techniques (INT8 Quantization and Query Head Packing for MQA/GQA) that are important for using FlashAttention-3 for inference research.character.ai/optimizing-ai-…