Scott Condron

@_scottcondron

Helping build AI/ML dev tools at @weights_biases. I post about machine learning, data visualisation, software tools.

ID: 982132042845401088

https://www.scottcondron.com/ 06-04-2018 05:45:00

2,2K Tweet

5,5K Takipçi

1,1K Takip Edilen

Shawn Lewis W&B Weave Weights & Biases have to say kudos on this. i added a bunch of weave.ops around the code to debug a perf issue in my GRPO rollouts. and i got flamegraphs!! and it's great for inspecting all the env interactions

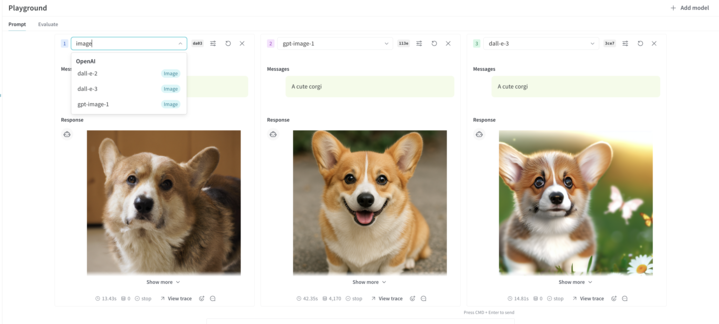

The Princeton University University lab built their agent evaluation harness (HAL) using W&B Weave. They're leveraging Weave to automatically track, monitor, and unify telemetry across different LLM providers and frameworks for consistent, in-depth evaluation.