Amanpreet Singh

@apsdehal

CTO @ContextualAI. Past: @huggingface and @MetaAI.

ID: 103540992

https://apsdehal.in 10-01-2010 10:48:02

618 Tweet

5,5K Takipçi

641 Takip Edilen

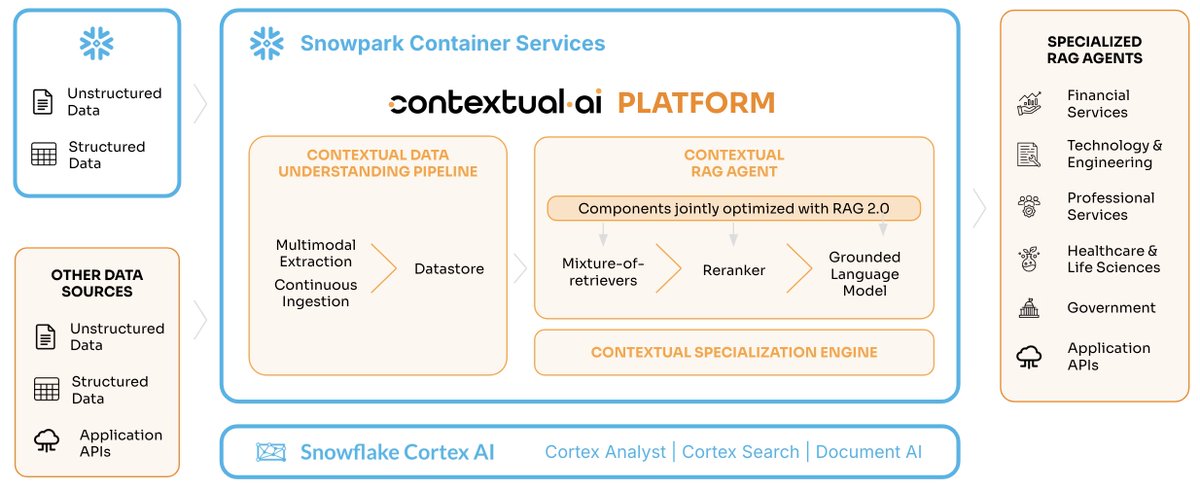

❄️ The Contextual AI Platform is now available as a Native app on SnowflakeDB Marketplace! Build specialized RAG agents directly within your Snowflake environment—no data movement required. 🧵

Life update: got a PhD, got an O1 visa, moved to San Francisco to work on agents at Contextual AI. I’m incredibly grateful to everyone who helped me along the way. Can’t wait to see what’s next. 🚀 I especially want to thank some key mentors that made all this possible: Thomas

Thrilled to share that Contextual AI has been named to Fast Company’s 2025 Most Innovative Companies list for Applied AI! A huge thank you to our team, customers, and partners for being part of this journey. We're so honored to be recognized for our innovative approach to

Strong BCV portfolio company representation in Fast Company's Most Innovative Companies 2025 list Congrats and keep building!

Your agent is as good as the data you train it on. Check out how our data engine under Bertie Vidgen leads to high-quality enterprise data today.

Go behind the scenes with Contextual AI CTO Amanpreet Singh as he breaks down the future of enterprise AI with Daniel Darling. You'll learn about grounded LLMs, specialized AI agents, and what’s next for AI in the enterprise—read the full article 👇 dub.sh/NHryEMB