Athul Paul Jacob

@apjacob03

@MIT_CSAIL @UWaterloo | Co-created AIs: CICERO, Diplodocus | Ex: @google, @generalcatalyst, FAIR @MetaAI, @MSFTResearch AI, @MITIBMLab, @Mila_Quebec

ID: 373885410

15-09-2011 10:27:31

356 Tweet

1,1K Takipçi

481 Takip Edilen

Thank you to the NeurIPS Conference for this recognition of the Generative Adversarial Nets paper published ten years ago with my colleagues Ian Goodfellow, Jean Pouget-Abadie, @memimo, Bing Xu, David Warde-Farley 🇺🇦 @[email protected] , Sherjil Ozair and Aaron Courville.

The (true) story of development and inspiration behind the "attention" operator, the one in "Attention is All you Need" that introduced the Transformer. From personal email correspondence with the author 🇺🇦 Dzmitry Bahdanau @ NeurIPS ~2 years ago, published here and now (with permission) following

Is your CS dept worried about what academic research should be in the age of LLMs? Hire one of my lab members! Leshem Choshen (Leshem (Legend) Choshen 🤖🤗), Pratyusha Sharma (Pratyusha Sharma) and Ekin Akyürek (Ekin Akyürek) are all on the job market with unique perspectives on the future of NLP: 🧵

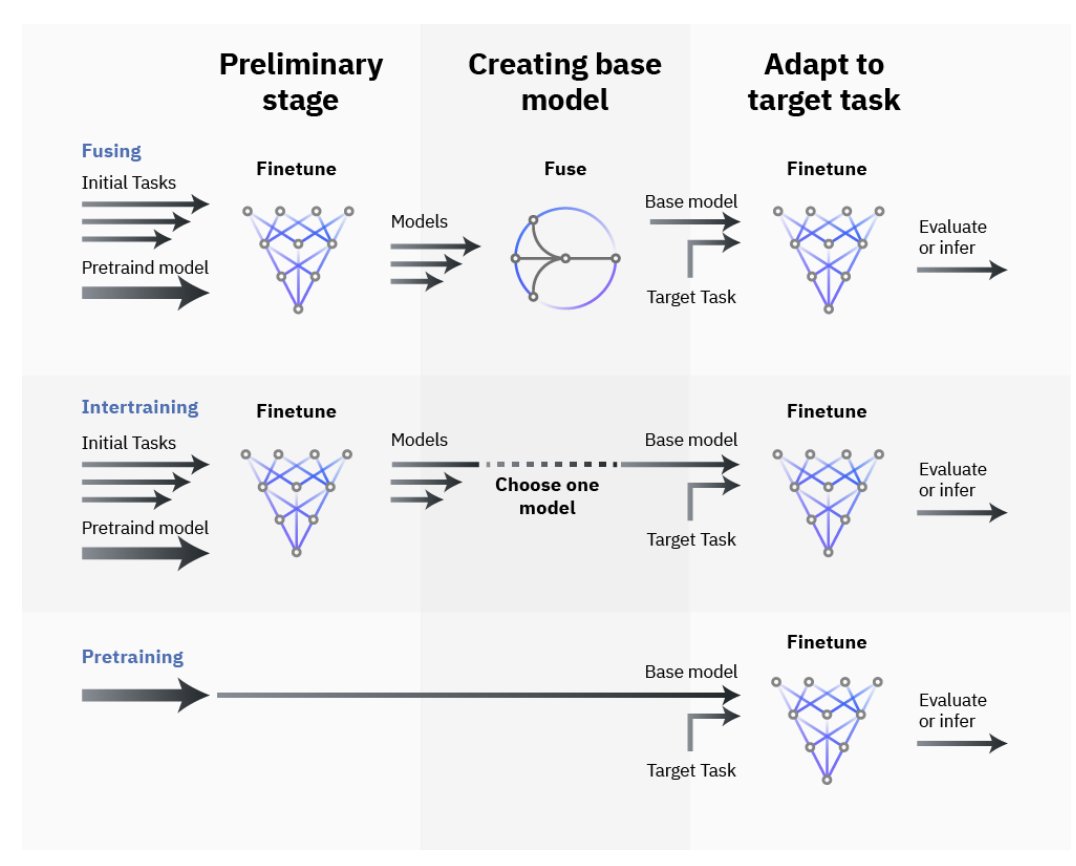

Leshem Choshen (Leshem (Legend) Choshen 🤖🤗 @ACL) is developing the technical+social infrastructure needed to support communal training of large-scale ML systems. Before MIT was one of the inventors of "model merging" techniques; these days he’s thinking about collecting & learning from user feedback.

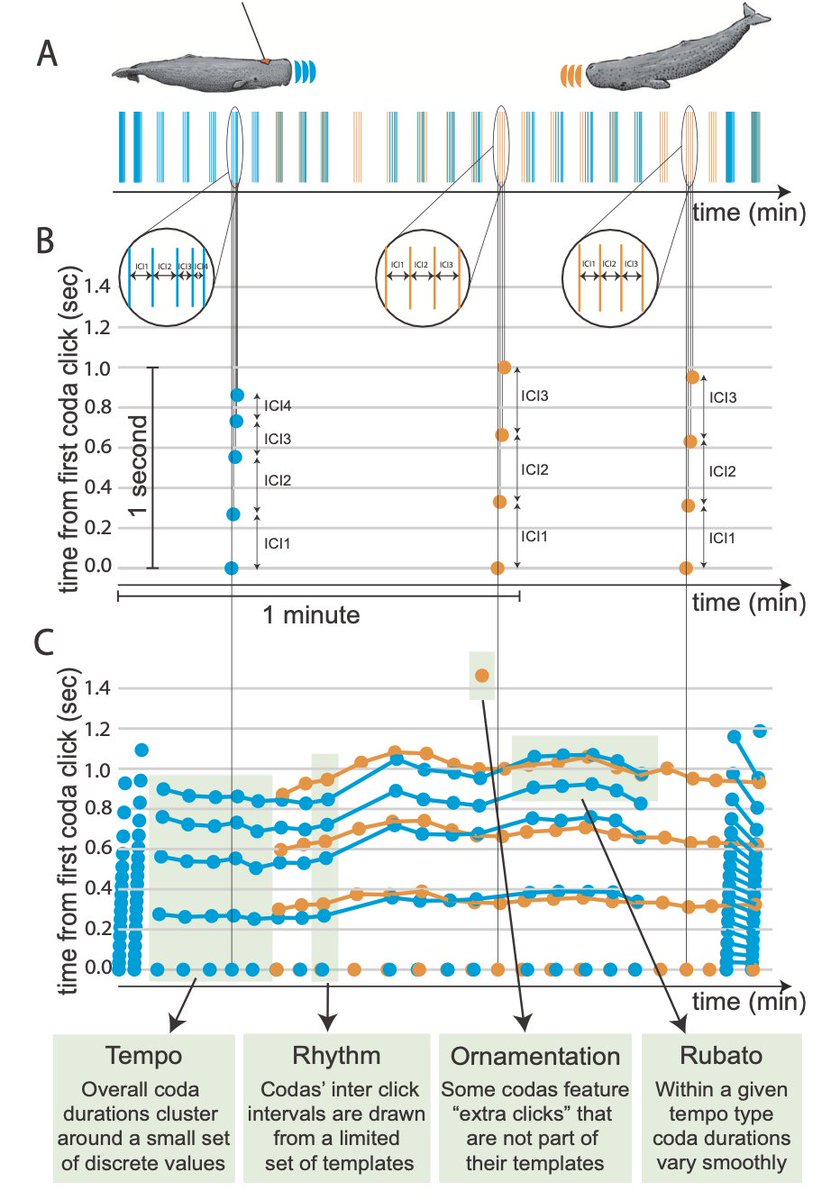

Pratyusha Sharma (Pratyusha Sharma) studies how language shapes learned representations in artificial and biological intelligence. Her discoveries have shaped our understanding of systems as diverse as LLMs and sperm whales.

Ekin Akyürek (Ekin Akyürek) builds tools for understanding & controlling algorithms that underlie reasoning in language models. You’ve likely seen his work on in-context learning; I'm just as excited about past work on linguistic generalization & future work on test-time scaling.

There are so many cool things that can be built today with RL. Was great catching up with Nathan Lambert and talking about RL scaling and self-driving

You've got a couple of GPUs and a desire to build a self-driving car from scratch. How can you turn those GPUs and self-play training into an incredible looking agent? Come to W-821 at 4:30 in B2-B3 to learn from Marco Cusumano-Towner Taylor W. Killian Ozan Sener