Anish

@anishhacko

AI and Neuro

ID: 359346904

https://anish.bearblog.dev/ 21-08-2011 12:35:05

287 Tweet

53 Takipçi

453 Takip Edilen

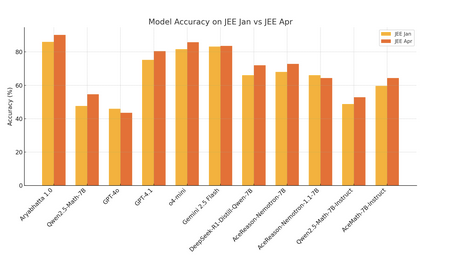

Excited to share Aryabhatta 1.0, our leading model that scores 90.2% on JEE Mains, outperforming frontier models like o4 mini and Gemini Flash 2.5 Trained by us at AthenaAgent , in collaboration with Physics Wallah (PW), using custom RLVR training on 130K+ curated JEE problems