Animesh Singh

@animeshsingh

#ArtificialIntelligence #DeepLearning #MachineLearning #MLOps #AI #ML #DL #Kubernetes #Cloud

ID: 28748501

04-04-2009 05:39:48

5,5K Tweet

2,2K Takipçi

2,2K Takip Edilen

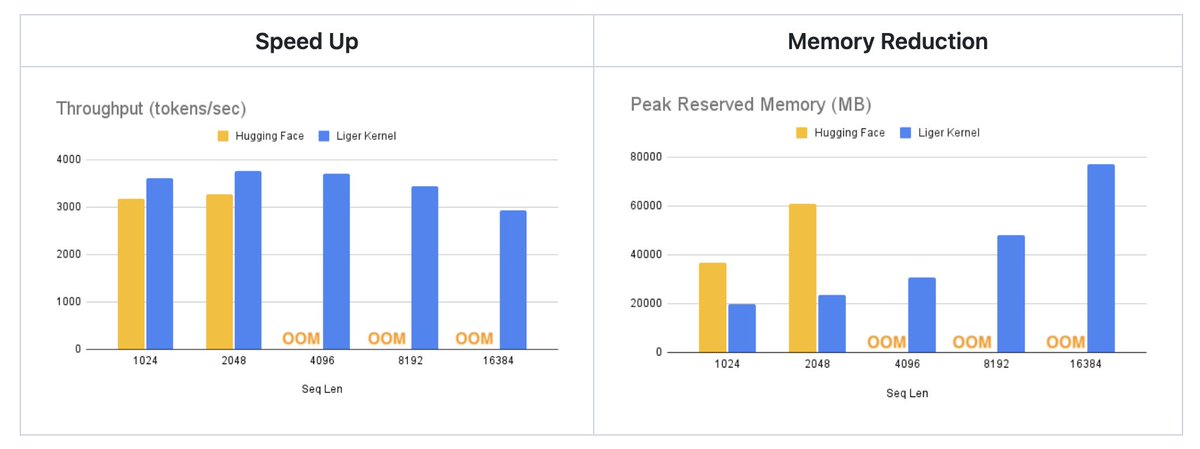

Lol what, Linkedin casually dropped a kernel repo that reduces LLM training time on multi GPU by 20% while reducing memory by 60%? 😂 I'll start posting more on linkedin from now on h/t Wing Lian (caseus) (who of course already merged this into Axolotl 👏) github.com/linkedin/Liger…

If you've read this far, be sure to star our repo at github.com/linkedin/Liger…! We would like to thank Animesh Singh, Haowen Ning, Yanning Chen for the leadership support, shao, Qingquan Song, Yun Dai Vignesh Kothapalli Shivam Sahni Zain Merchant for the

As the biggest Andrej Karpathy fanboy, all the hard work paid off 🫡

from intervitens > previously I was maxing out vram with cpu param offload, and now even without offloading, I only get 75% vram usage It actually... just works™ (4b FFT on 4x3090s, bs1 @ 8192 context)

Liger Kernel is officially supported in SFTTrainer, the most popular trainer for LLM fine tuning. Add `--use_liger_kernel` to supercharge the training with one flag. Hugging Face Thomas Wolf Lewis Tunstall

…iinfragpuskernelsllmsa.splashthat.com Last 3 days to RSVP the insightful ML sys meetup at LinkedIn campus! Lianmin Zheng has joined as a speaker on SGLang! Animesh Singh Guanhua Wang Ying Sheng Pradeep Ramani