Andreea Bobu

@andreea7b

Assistant Professor @MITAeroAstro and @MIT_CSAIL ∙ PhD from @Berkeley_EECS ∙ machine learning, robots, humans, and alignment

ID: 2200242594

https://andreea7b.github.io/ 17-11-2013 21:37:37

100 Tweet

2,2K Followers

436 Following

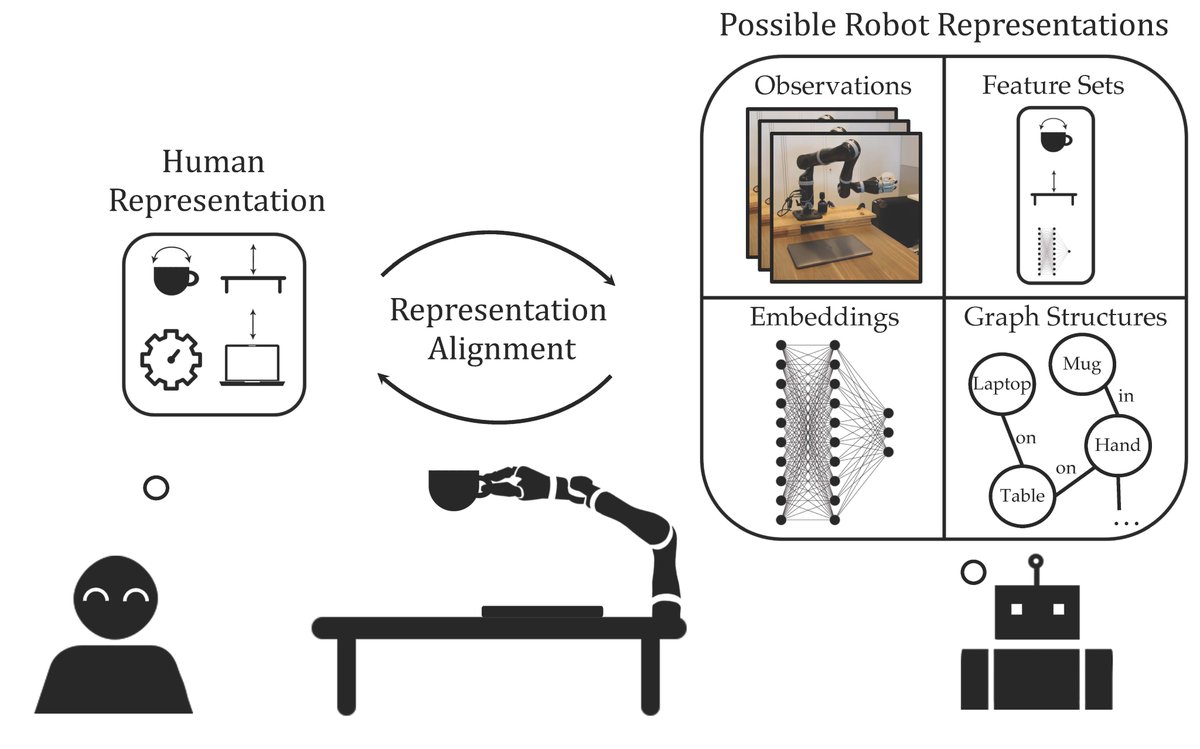

What does it mean for humans and robots to align their representations of their tasks and how do current approaches fare? Come see at #HRI2024 in the Tuesday 17:10 session! Paper: arxiv.org/abs/2302.01928 w/ Andi Peng,Pulkit Agrawal, Julie Shah, Anca Dragan

Andreea Bobu explores how robots can align their representation with those of humans in manipulation tasks. #hri2024

Excited to share our RSS 2024 (Robotics: Science and Systems) workshop, Mechanisms for Mapping Human Input to Robots: From Robot Learning to Shared Control/Autonomy, on July 15 in Delft! mechanisms-hri.github.io Andreea Bobu Tesca Fitzgerald, Mario Selvaggio, Harold Soh, Julie Shah

Deadline extended to submit to our #RSS2024 Robotics: Science and Systems Workshop: Mechanisms for Mapping Human Input to Robots mechanisms-hri.github.io New deadline: 5/17 AOE!

Final Deadline Extension for Contributions: Now 5/24 AOE! Please consider submitting to our workshop (with Andreea Bobu, Tesca Fitzgerald, Mario Selvaggio, Harold Soh ,Julie Shah)!

Get ready to explore robotics with RoboLaunch! 🚀 Our next speaker is Dr. Andreea Bobu (Andreea Bobu), an incoming Assistant Professor at MIT. 🤖 Tune in this Wednesday 7/10/24, 11:00 AM EDT and join this conversation on our YouTube: youtube.com/watch?v=lgt255…

Excited to share our work on smarter inference-time compute allocation! By estimating query difficulty and focusing resources on harder problems, we cut compute by up to 50% with no performance loss on math/coding tasks. Huge shoutout to Mehul Damani for leading this!