Aaron Mueller

@amuuueller

Postdoc with @boknilev/@davidbau ≡ Incoming Asst. Prof. in CS at @BU_Tweets ≡ Interested in #NLProc, interpretability, and evaluation ≡ Formerly: PhD @jhuclsp

ID: 3743366715

http://aaronmueller.github.io 22-09-2015 23:04:12

244 Tweet

1,1K Followers

689 Following

💡 New ICLR paper! 💡 "On Linear Representations and Pretraining Data Frequency in Language Models": We provide an explanation for when & why linear representations form in large (or small) language models. Led by Jack Merullo , w/ Noah A. Smith & Sarah Wiegreffe

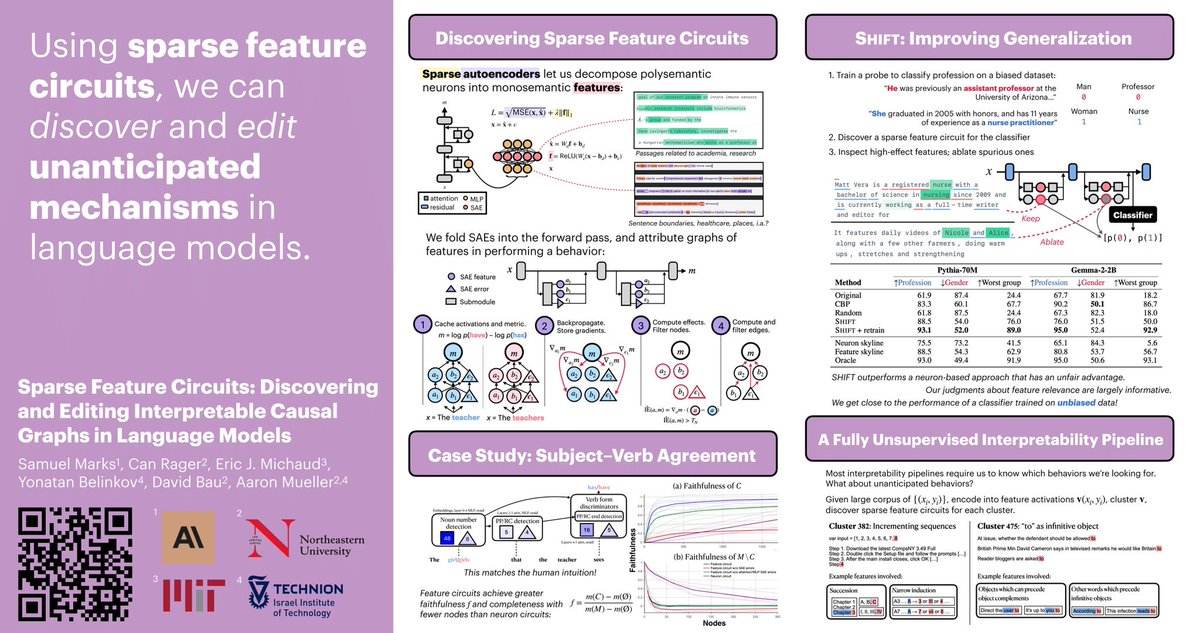

Our paper "Position-Aware Circuit Discovery" got accepted to ACL! 🎉 Huge thanks to my collaborators🙏 Hadas Orgad @ ICML David Bau Aaron Mueller Yonatan Belinkov See you in Vienna! 🇦🇹 #ACL2025 ACL 2025

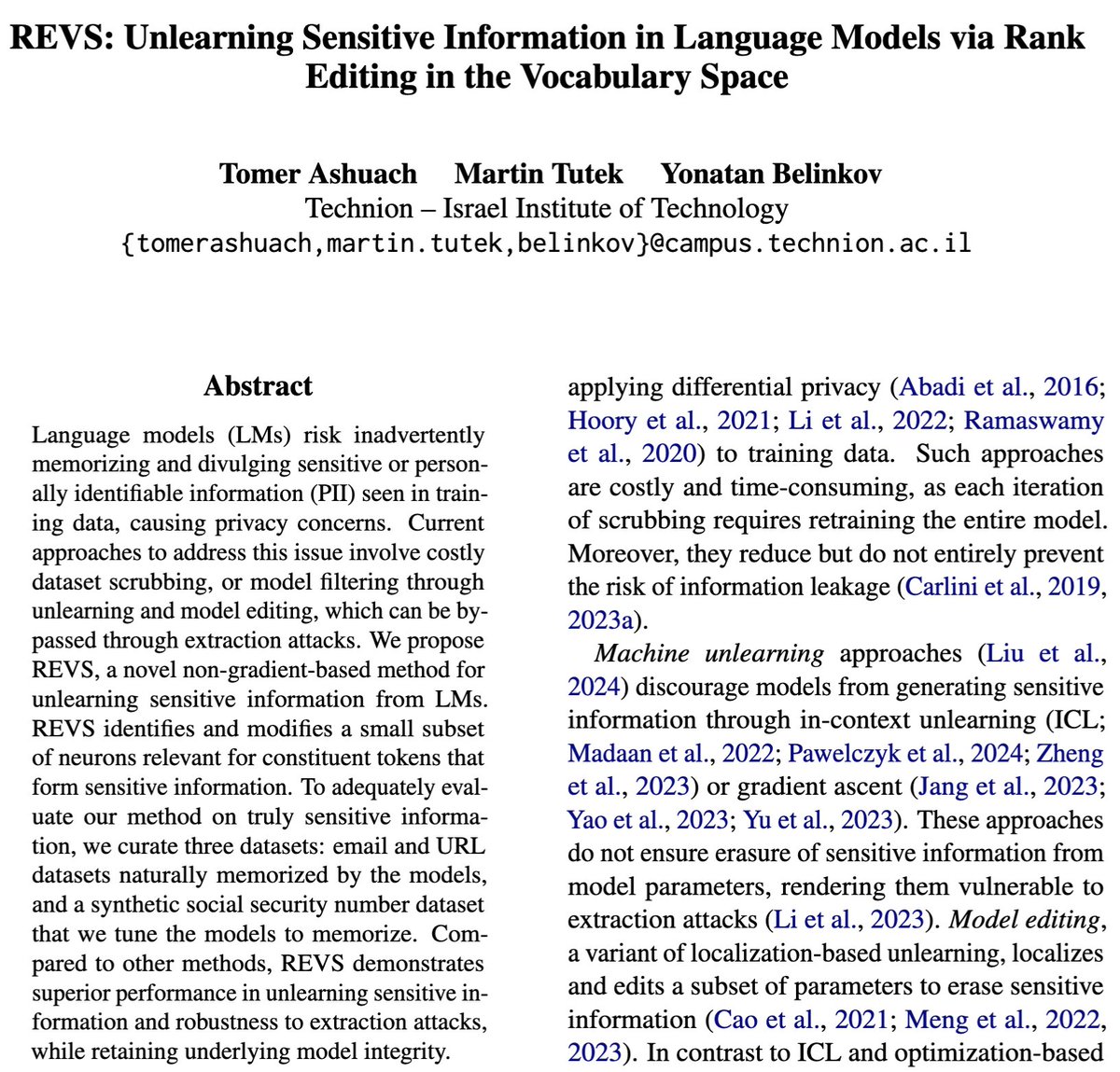

🚨New paper at #ACL2025 Findings! REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space. LMs memorize and leak sensitive data—emails, SSNs, URLs from their training. We propose a surgical method to unlearn it. 🧵👇w/Yonatan Belinkov Martin Tutek 1/8

BabyLMs first constructions: new study on usage-based language acquisition in LMs w/ Leonie Weissweiler, Cory Shain. Simple interventions show that LMs trained on cognitively plausible data acquire diverse constructions (cxns) babyLM 🧵

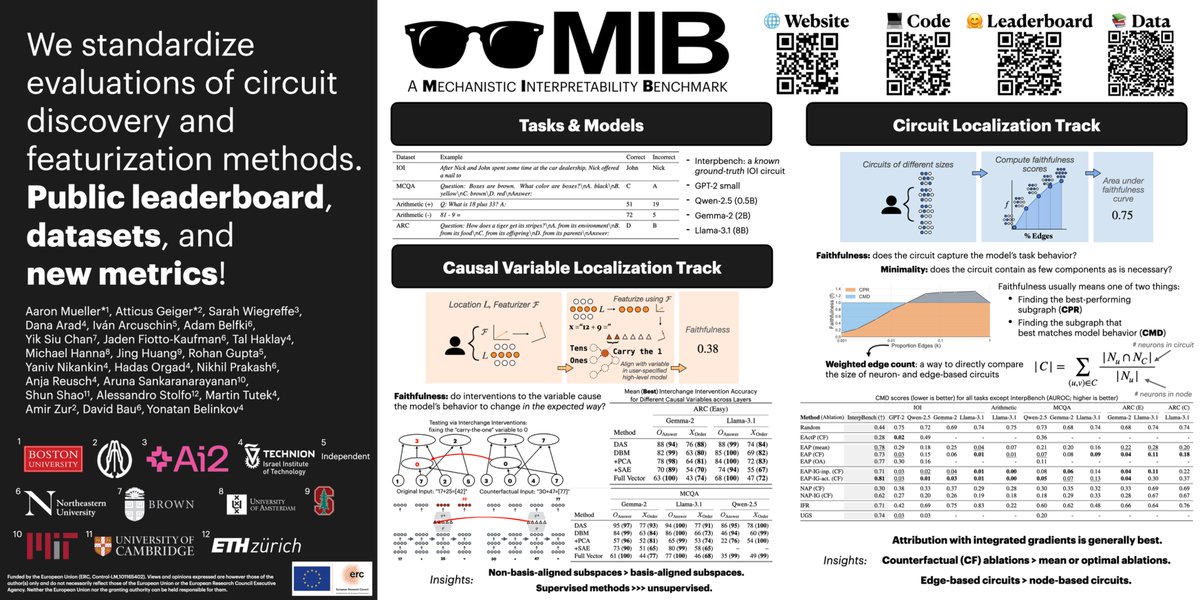

If you're at #ICML2025, chat with me, Sarah Wiegreffe, Atticus, and others at our poster 11am - 1:30pm at East #1205! We're establishing a 𝗠echanistic 𝗜nterpretability 𝗕enchmark. We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

We're presenting the Mechanistic Interpretability Benchmark (MIB) now! Come and chat - East 1205. Project led by Aaron Mueller Atticus Geiger Sarah Wiegreffe