Alireza Mousavi @ ICLR 2025

@alirezamh_

CS PhD student @UofT and @VectorInst. Interested in deep learning theory.

ID: 1844793221240483848

https://mousavih.github.io 11-10-2024 17:32:44

28 Tweet

215 Followers

183 Following

Join us on Wednesday, November 13th, at 12:30 PM EDT for a talk by Alireza Mousavi (UofT) on "Learning and Optimization with Mean-Field Langevin Dynamics" at Mila - Institut québécois d'IA in Montreal

Honored to receive this fellowship. Thank you RBC Borealis for supporting our research, and for the cool sweatshirts :)

Congratulations to Vector-affiliated researchers Alireza Mousavi-Hosseini and Mohammed Adnan, who were named RBC Borealis Fellows. The RBC Borealis Fellowships Program represents excellence in Canadian AI research and innovation. We’re proud to see our affiliated researchers

New work arxiv.org/abs/2506.05500 on learning multi-index models with Alex Damian and Joan Bruna. Multi-index are of the form y= g(Ux), where U=r by d maps from d dimension to r dimension and d>>r. g is an arbitrary function. Examples of multi-index models are any neural net

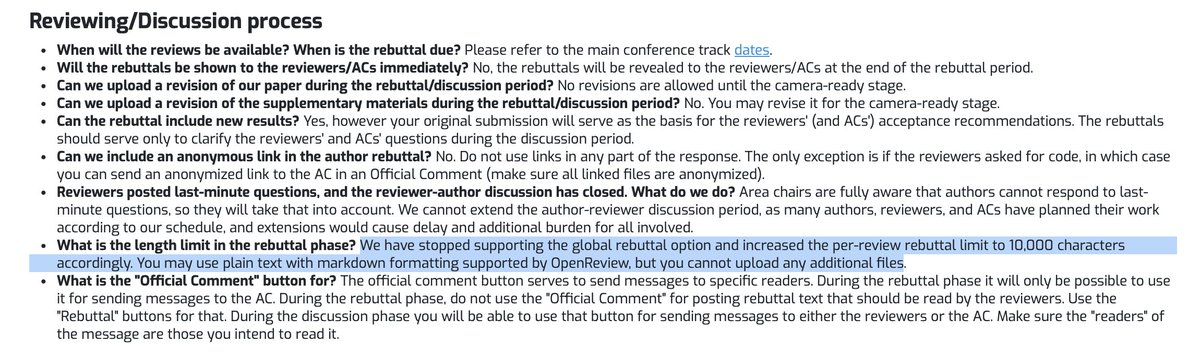

NeurIPS Conference, why take the option to provide figures in the rebuttals away from the authors during the rebuttal period? Grounding the discussion in hard evidential data (like plots) makes resolving disagreements much easier for both the authors and the reviewers. Left: NeurIPS