Alex Damian

@alex_damian_

ID: 1374506243612536841

23-03-2021 23:40:10

13 Tweet

290 Followers

84 Following

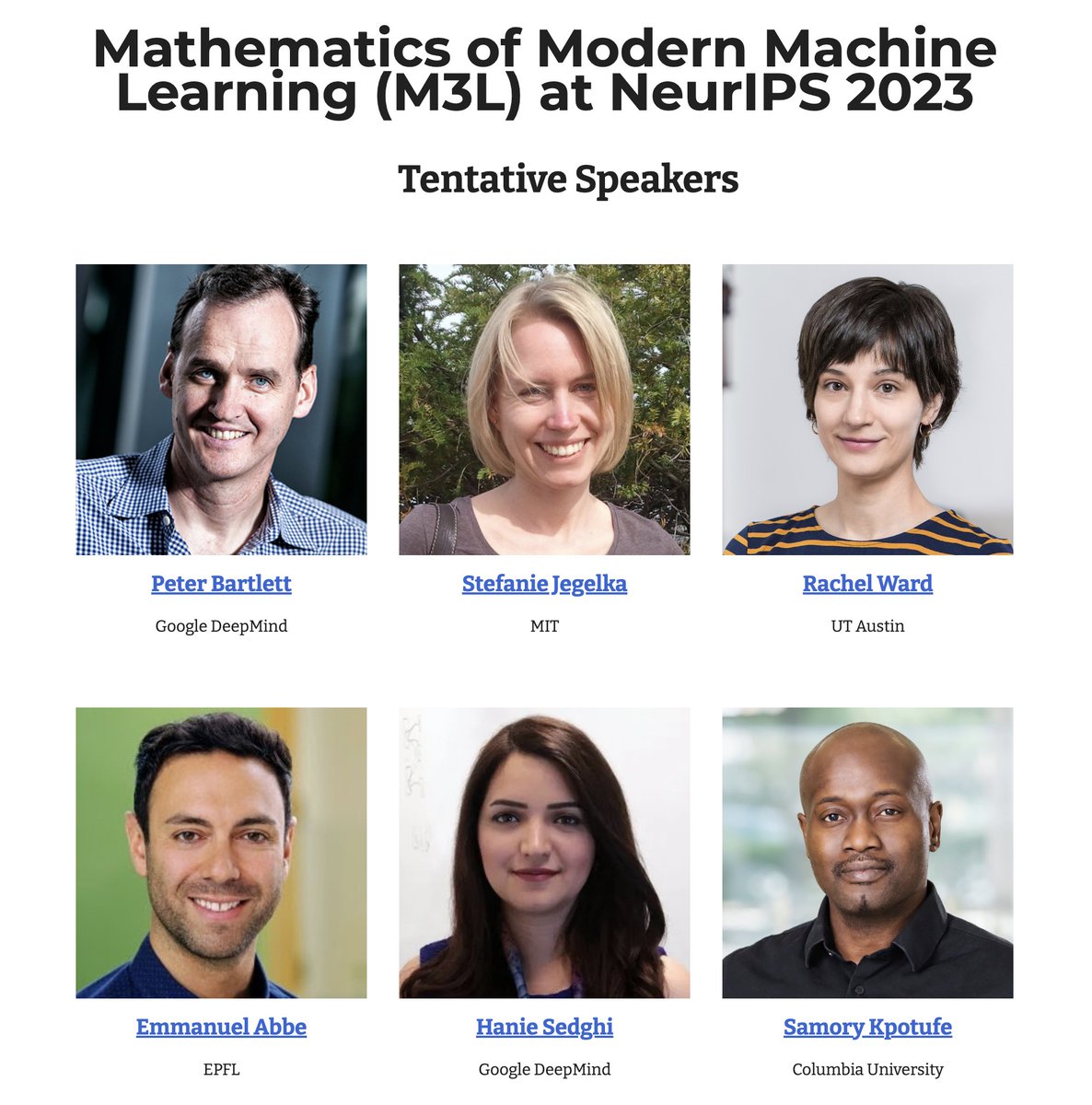

🚨💡We are organizing a workshop on Mathematics of Modern Machine Learning (M3L) at #NeurIPS2023! 🚀Join us if you are interested in exploring theories for understanding and advancing modern ML practice. sites.google.com/view/m3l-2023 Submission ddl: October 2, 2023 M3L Workshop @ NeurIPS 2024

![fly51fly (@fly51fly) on Twitter photo [LG] How Transformers Learn Causal Structure with Gradient Descent

E Nichani, A Damian, J D. Lee [Princeton University] (2024)

arxiv.org/abs/2402.14735

- The paper studies how transformers learn causal structure through gradient descent when trained on a novel in-context learning [LG] How Transformers Learn Causal Structure with Gradient Descent

E Nichani, A Damian, J D. Lee [Princeton University] (2024)

arxiv.org/abs/2402.14735

- The paper studies how transformers learn causal structure through gradient descent when trained on a novel in-context learning](https://pbs.twimg.com/media/GHGdmklbsAACyzp.jpg)