Amirkeivan Mohtashami

@akmohtashami_a

PhD - EPFL

ID: 1661997220768432128

26-05-2023 07:28:55

31 Tweet

183 Followers

88 Following

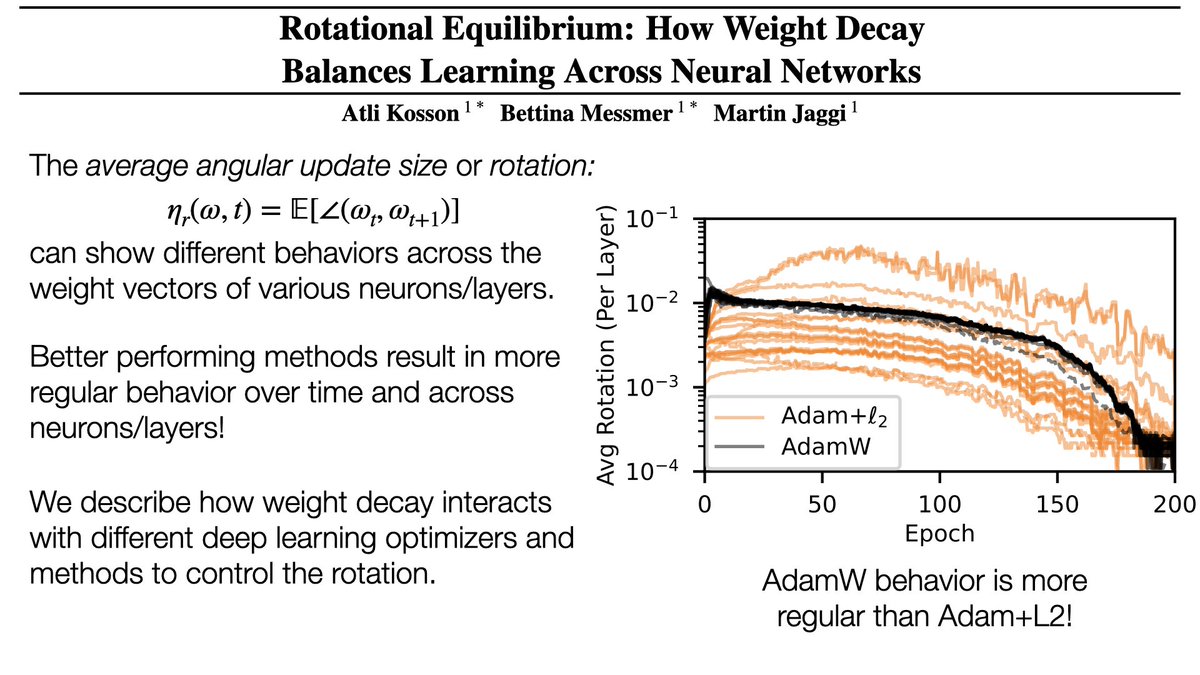

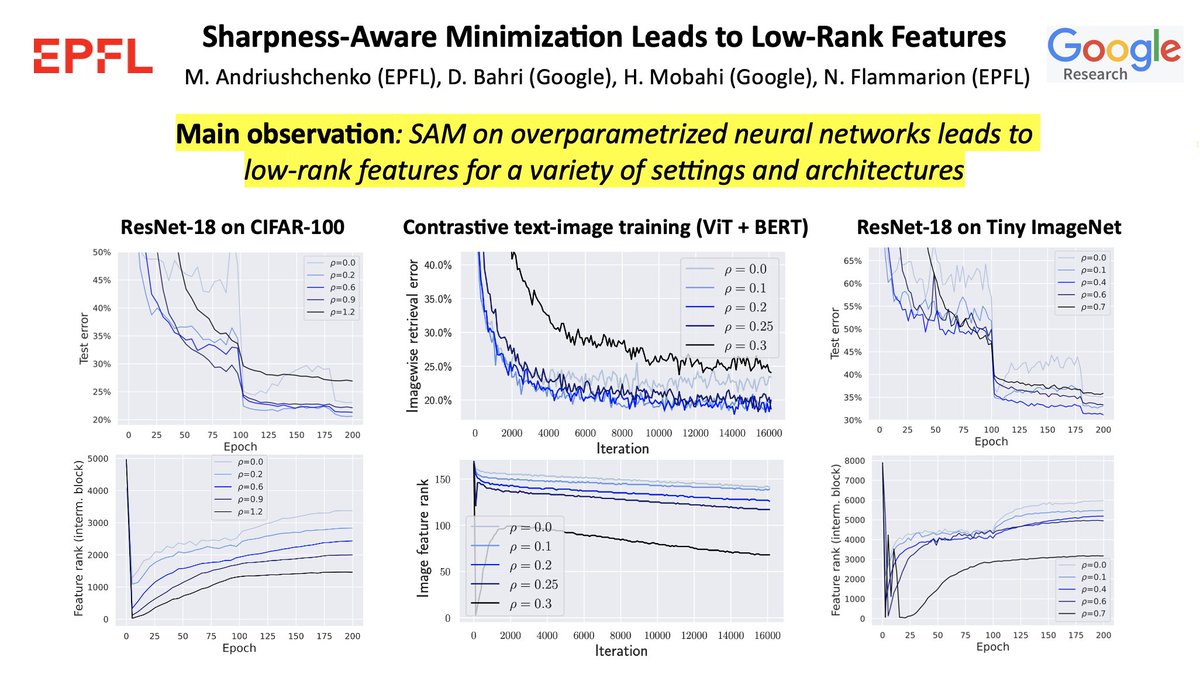

🚨Excited to share our new work “Sharpness-Aware Minimization Leads to Low-Rank Features” arxiv.org/abs/2305.16292! ❓We know SAM improves generalization, but can we better understand the structure of features learned by SAM? (with Dara Bahri, Hossein Mobahi, N. Flammarion) 🧵1/n

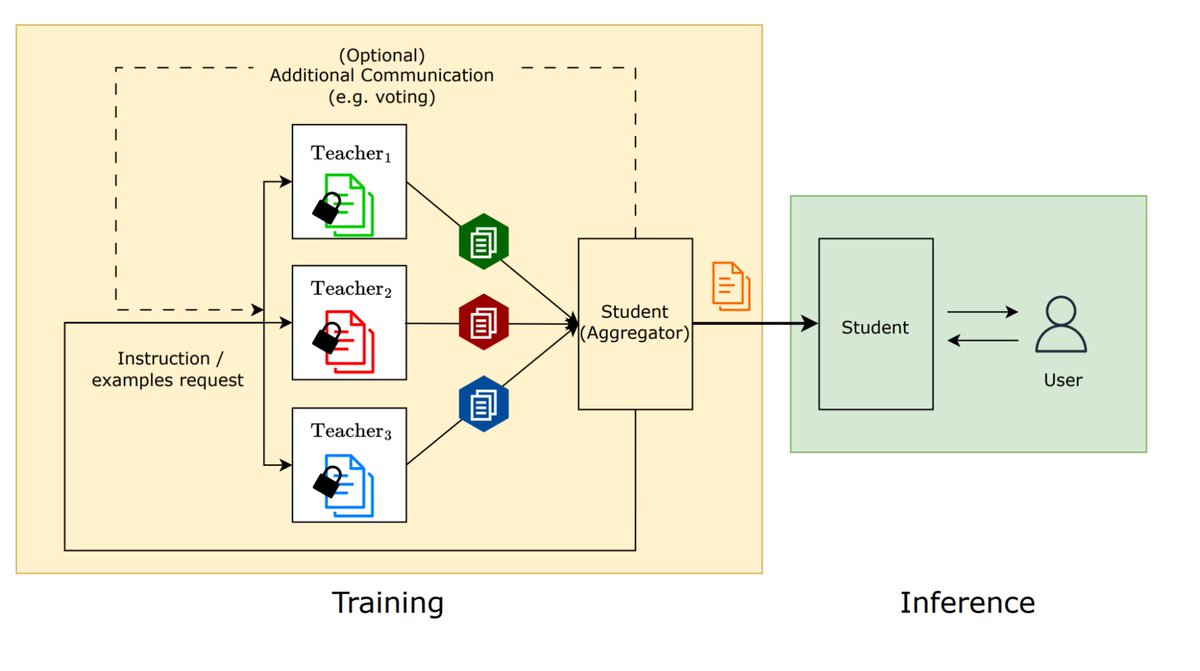

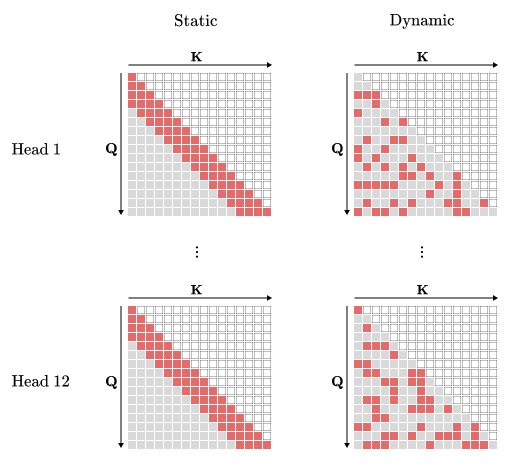

How to speed up the training of transformers over large sequences? Many methods sparsify the attention matrix with static patterns. Could we use dynamic (e.g. adaptive) patterns? A thread! Joint work with Daniele Paliotta (equal contribution), François Fleuret , and Martin Jaggi

Both ICML Conference and NeurIPS Conference held in the US in 2022-23! The US is one of the most visa unfriendly states (appointment wait times 6+ months, processing another 6+ months), this is significantly hurting diversity & inclusion. We should strive to do better! #ICML2023 #NeurIPS2023

Now out in Nature Communications! We developed EnsembleTR, an ensemble method to combine genotypes from 4 major tandem repeat callers and generated a genome-wide catalog of ~1.7 million TRs from 3550 samples in the 1000 Genomes and H3Africa cohorts. doi.org/10.1038/s41467…

If you're a Python programmer looking to get started with CUDA, this weekend I'll be doing a free 1 hour tutorial on the absolute basics. Thanks to Andreas Köpf, Mark Saroufim, and dfsgdf for hosting this on the CUDA MODE server. :D Click here: discord.gg/6z79K5Yh?event…

Skip connections are not enough! We show that providing the individual outputs of previous layers to each Transformer layer significantly boosts its performance. See the thread for more! Had an amazing time collaborating with Matteo Pagliardini, François Fleuret, and Martin Jaggi.

[1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features. With Amirkeivan Mohtashami @max_croci Dan Alistarh Torsten Hoefler 🇨🇭 James Hensman and others Paper: arxiv.org/abs/2404.00456 Code: github.com/spcl/QuaRot

![Saleh Ashkboos (@ashkboossaleh) on Twitter photo [1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features.

With <a href="/akmohtashami_a/">Amirkeivan Mohtashami</a> @max_croci <a href="/DAlistarh/">Dan Alistarh</a> <a href="/thoefler/">Torsten Hoefler 🇨🇭</a> <a href="/jameshensman/">James Hensman</a> and others

Paper: arxiv.org/abs/2404.00456

Code: github.com/spcl/QuaRot [1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features.

With <a href="/akmohtashami_a/">Amirkeivan Mohtashami</a> @max_croci <a href="/DAlistarh/">Dan Alistarh</a> <a href="/thoefler/">Torsten Hoefler 🇨🇭</a> <a href="/jameshensman/">James Hensman</a> and others

Paper: arxiv.org/abs/2404.00456

Code: github.com/spcl/QuaRot](https://pbs.twimg.com/media/GKItBQxW4AAh30A.jpg)