Akhil Mathur

@akhilmathurs

Research Scientist in Generative AI @MetaAI | ex-@BellLabs

ID: 896724155843973120

https://akhilmathurs.github.io 13-08-2017 13:24:33

176 Tweet

488 Followers

144 Following

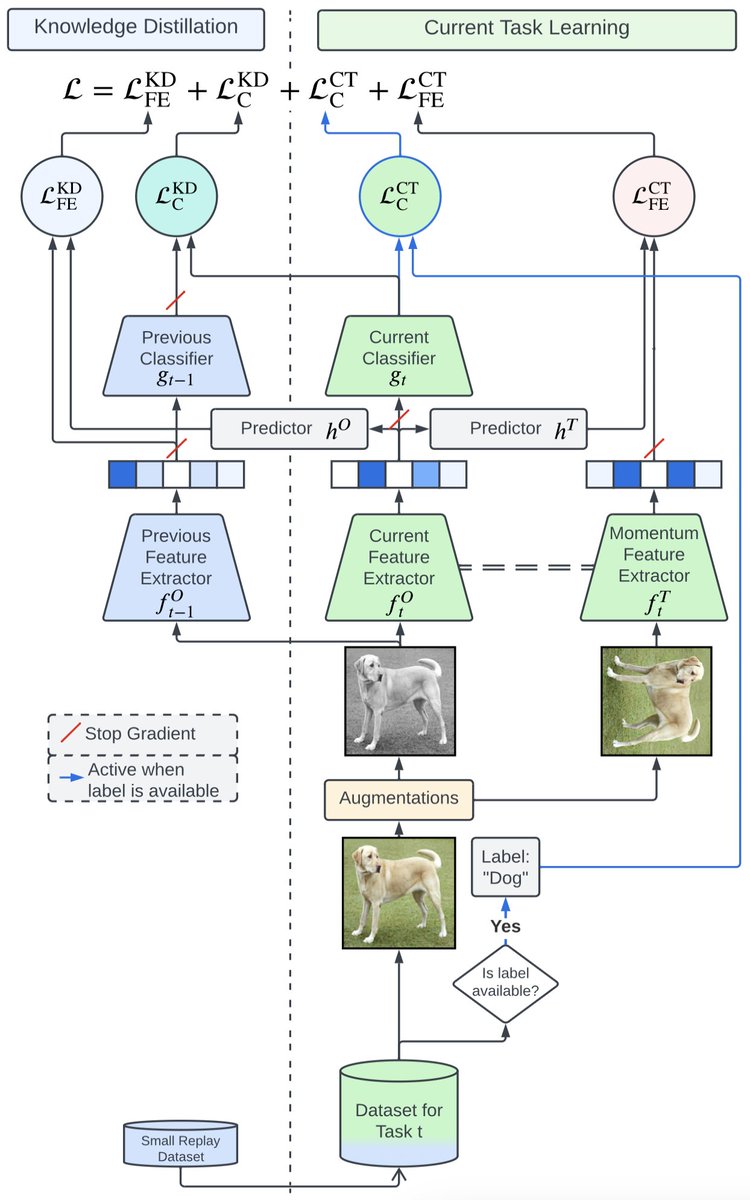

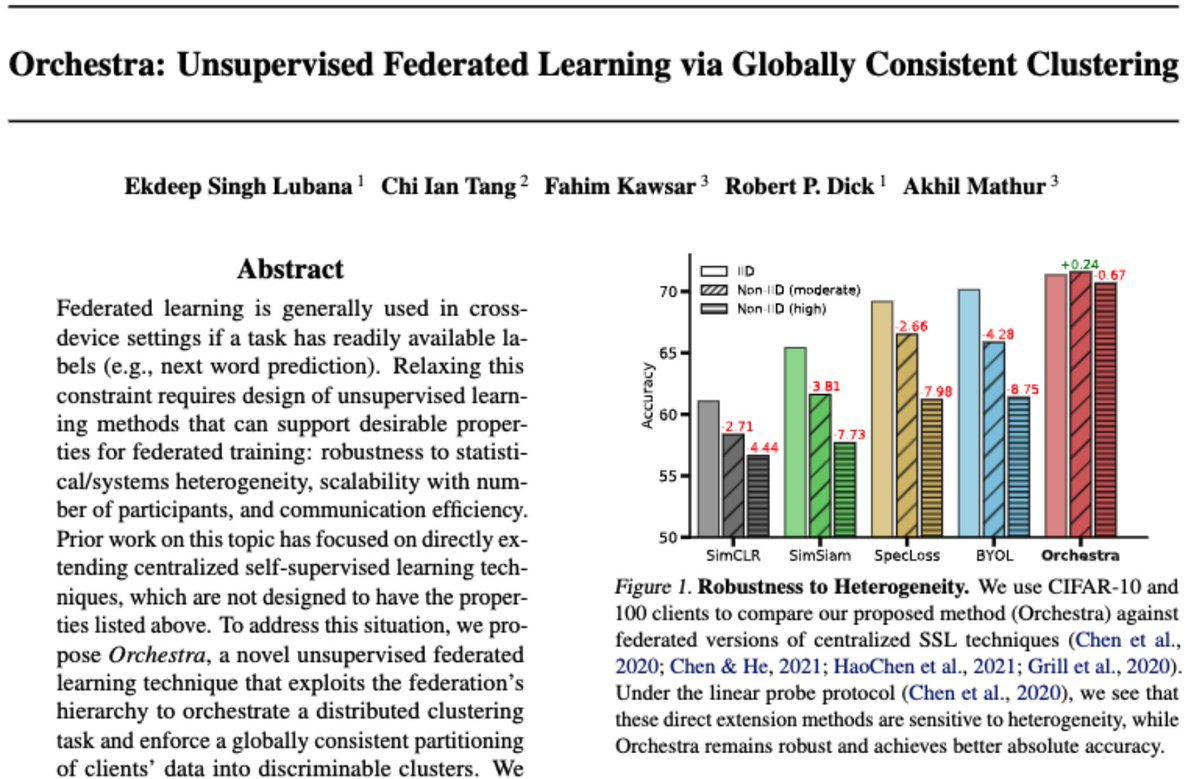

Very happy to share that our work on self-supervised federated learning in resource-constrained settings has been accepted at #ICML 2022. A fantastic outcome for the internship work by Ekdeep Singh Lubana in collaboration with Ian Tang Fahim Kawsar. Arxiv link and more details are coming soon.

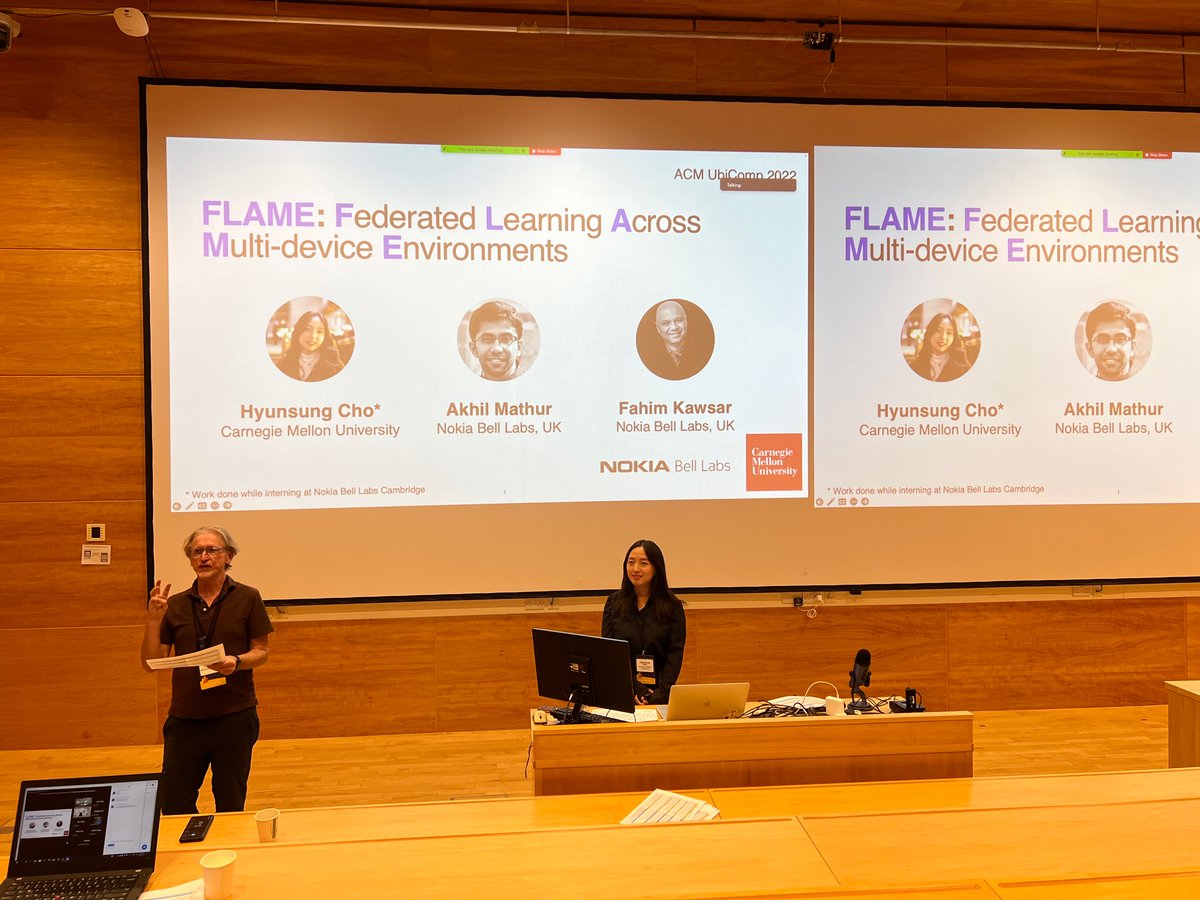

Glad to share that our work FLAME has been accepted to IMWUT '22. We explore algorithmic & system challenges for federated learning in the presence of multiple data-generating devices on a user. Proud of our intern Hyunsung Cho who led this work. arxiv.org/abs/2202.08922 Fahim Kawsar

Hyunsung Cho (Hyunsung Cho) is presenting her work FLAME 🔥 which shows how to make federated learning work in multi-device environments #UbiComp2022

🥁🥁 Join us for ACM SIGCHI Symposium for 𝐇𝐂𝐈 𝐚𝐧𝐝 𝐅𝐫𝐢𝐞𝐧𝐝𝐬 at IIT Bombay, India, 9-11 December 2022!🧵 Speakers: namastehci.in/#speakers November 9th: Applications due namastehci.in/#registration 🙏 Anupriya, Akhil Mathur, Anirudha Joshi, @Pushpendra_S__, Neha Kumar