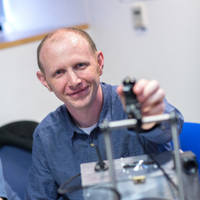

Andrew Davison

@ajddavison

From SLAM to Spatial AI; Professor of Robot Vision, Imperial College London; Director of the Dyson Robotics Lab; Co-Founder of Slamcore. FREng, FRS.

ID: 1446792746

http://www.doc.ic.ac.uk/~ajd/ 21-05-2013 16:40:29

3,3K Tweet

18,18K Followers

2,2K Following

Congratulations to Xin Kong who passed his PhD viva today! Thanks to his examiners Christian Rupprecht and Tolga Birdal. EscherNet was his important paper in generative 3D modelling, introducing Camera Positional Encoding (CaPe) to allow any number of input and output frames.

A new type of neural SLAM: ACE-SLAM builds super-efficient Scene Coordinate Regression maps of scenes in real-time from an RGB-D stream, enabling always-on relocalisation. Inspired by ACE from Eric Brachmann et al. from Niantic Spatial. Dyson Robotics Lab at Imperial College.

In 4DPM, we segment first, then locally reconstruct and track across many frames in 3D, inferring which objects are moving together. We can create an X-ray replay view of the objects inside a drawer after it's closed. Dyson Robotics Lab at Imperial College London Imperial Computing