AIneojk

@aineojk

RL (ML . CV)

ID: 1649323480104730624

21-04-2023 08:05:42

2,2K Tweet

255 Followers

7,7K Following

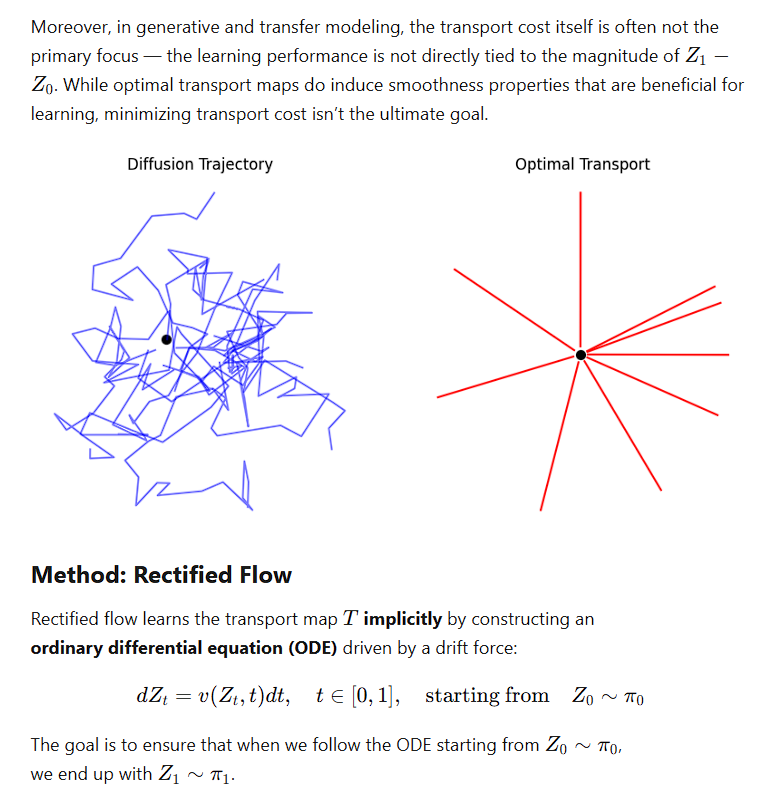

New paper on the generalization of Flow Matching arxiv.org/abs/2506.03719 🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn **can only generate training points**? with Quentin Bertrand, Anne Gagneux & Rémi Emonet 👇👇👇