AI Explained

@aiexplainedyt

300k+ YouTube subs, growing a friendly, professional AI networking hub, w/ exclusive videos, podcast and interviews on Patreon. ($7.51/month)

ID: 1617841388745363459

https://www.patreon.com/AIExplained 24-01-2023 11:07:20

107 Tweet

11,11K Takipçi

239 Takip Edilen

Exclusive: As OpenAI looks to raise more capital, it's trying to launch AI that can reason through tough problems and help it develop a new AI model, 'Orion.' theinformation.com/articles/opena… From Erin Woo, Stephanie Palazzolo and Amir Efrati

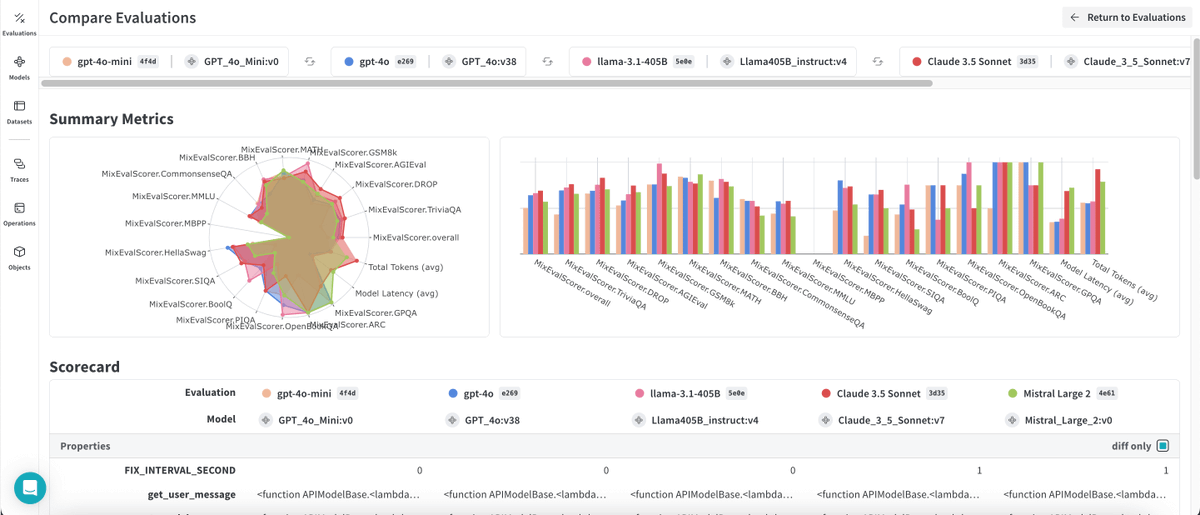

🪄 Think you’re an AI wizard? Prove it. We’ve partnered w/ AI Explained to launch the Simple Bench Evals Competition—a challenge so tough, he said: “If anyone gets 20/20 with a general-purpose prompt, I would be truly shocked.” 😳 Details below 👇