AI Habitat

@ai_habitat

A platform for Embodied AI research.

Project by FAIR (@MetaAI) and Facebook Reality Labs, in collaboration with @gtcomputing, @SFU, @IntelAI, @UCBerkeley.

ID: 1090794734010822657

https://aihabitat.org/ 31-01-2019 02:11:45

492 Tweet

1,1K Followers

31 Following

FAIR researchers (AI at Meta) presented SegmentAnything and our robotics work at the White House correspondents’ weekend. Llama3 + Sim2Real skills (trained with AI Habitat) = a robot assistant

Naoki Yokoyama Naoki Yokoyama presenting his best paper award finalist talk at #ICRA2024! Vision-Language Frontier Maps for Zero-Shot Semantic Navigation: show how to combine VL foundation models with a mapping+search stack. Georgia Tech School of Interactive Computing Robotics@GT Machine Learning at Georgia Tech Boston Dynamics

Dhruv Batra Naoki Yokoyama Georgia Tech School of Interactive Computing Machine Learning at Georgia Tech Boston Dynamics .Dhruv Batra We were thrilled to catch the talk live 🤖🔥

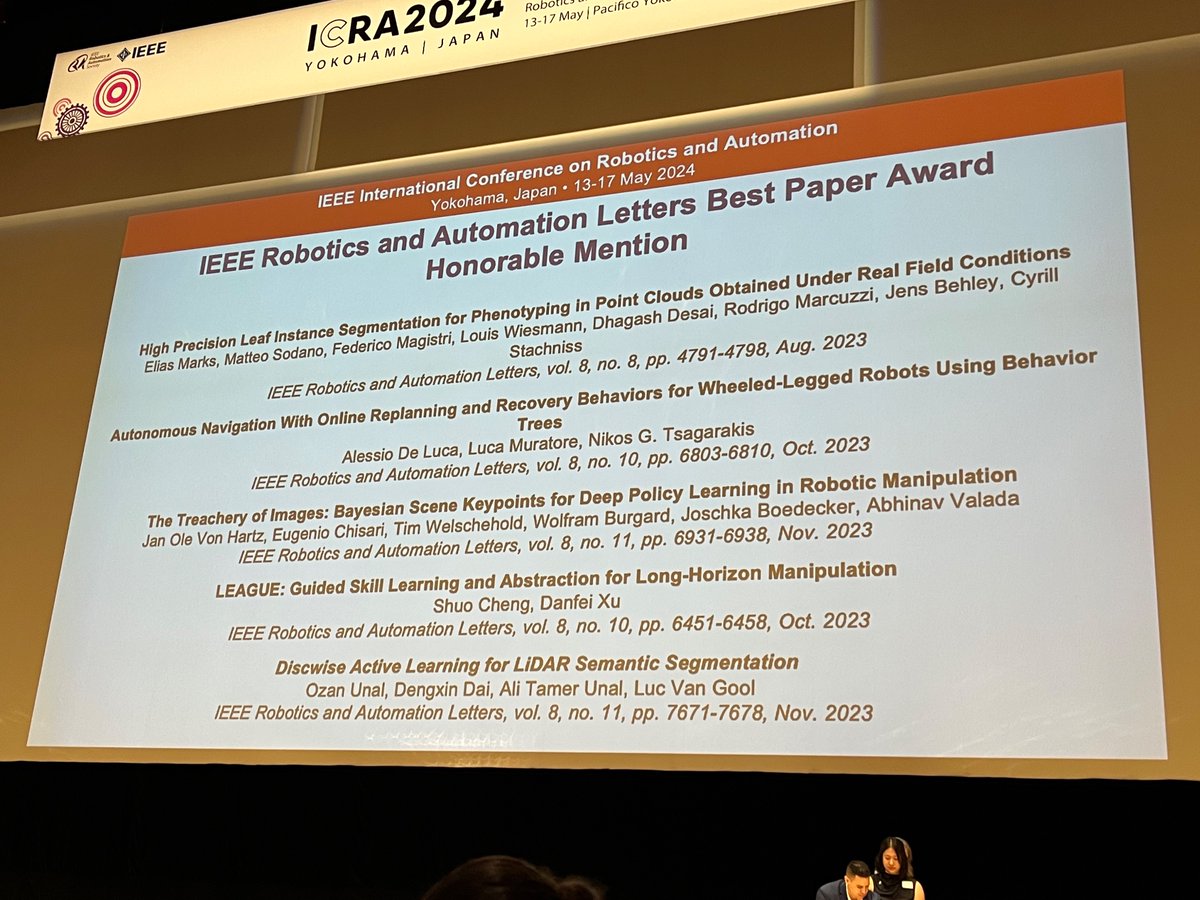

A good day for Georgia Tech School of Interactive Computing Robotics@GT. Congratulations Sehoon Ha Naoki Yokoyama Dhruv Shah and Shuo Cheng !

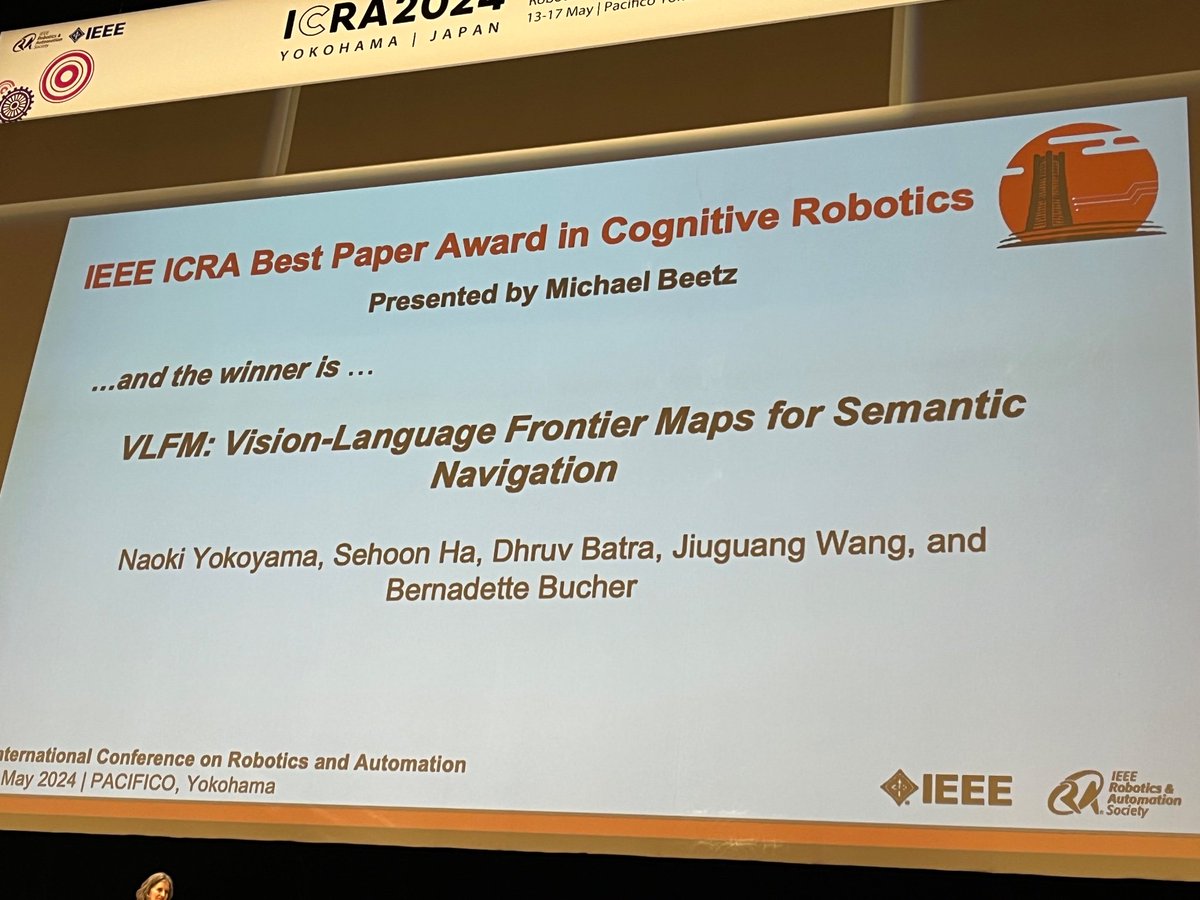

From Best Paper finalist to WINNER 🏆 IEEE ICRA for Naoki Yokoyama and team. Congratulations, Naoki Yokoyama Sehoon Ha Dhruv Batra Georgia Tech Computing

From Best Paper Award finalist to winner! Congratulations Naoki Yokoyama on a well-deserved recognition! Georgia Tech Computing Robotics@GT #icra2024

This is no small feat. At the robotics field's #1 research venue, our researchers are #1, earning the Best Paper Award in Cognitive Robotics, among the eligible 1,700+ papers at #ICRA2024. Partnered with Boston Dynamics. 🤖🏆🎉 Award details: ieee-ras.org/awards-recogni…