Agentica Project

@agentica_

Building generalist agents that scale @BerkeleySky

ID: 1884497281870929920

http://www.agentica-project.com 29-01-2025 07:02:25

55 Tweet

2,2K Followers

8 Following

Check out Michael Luo's latest work, Autellix—an ultra-fast system for serving agentic workloads, achieving 4-15x speedups over vLLM/SGLang! At Agentica, we are committed to building efficient infra for serving/training of LLM agents, and Autellix is the first step towards it!

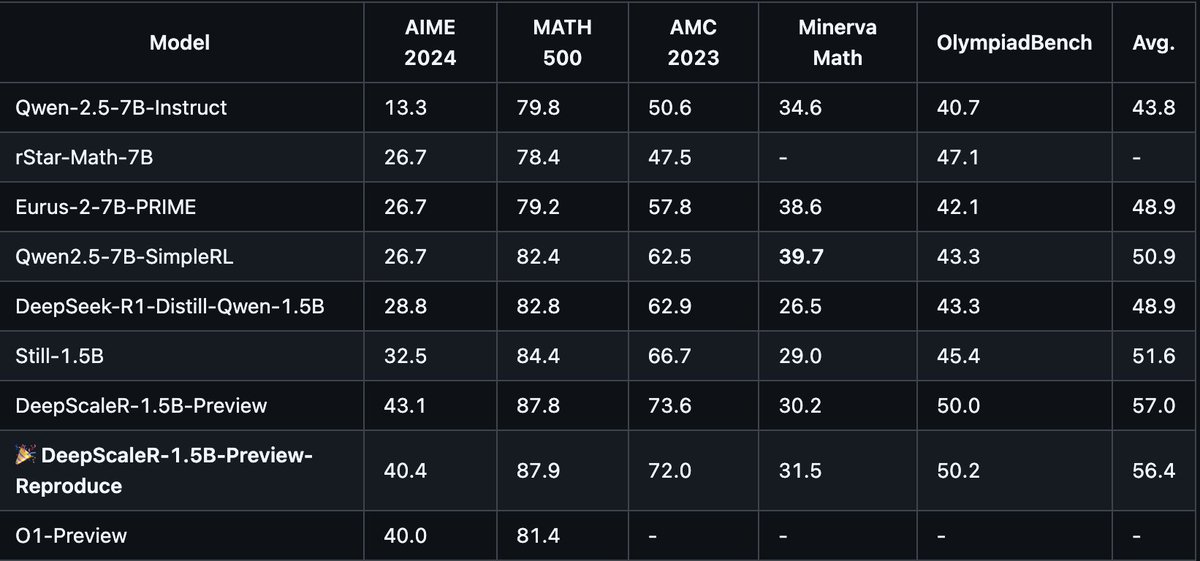

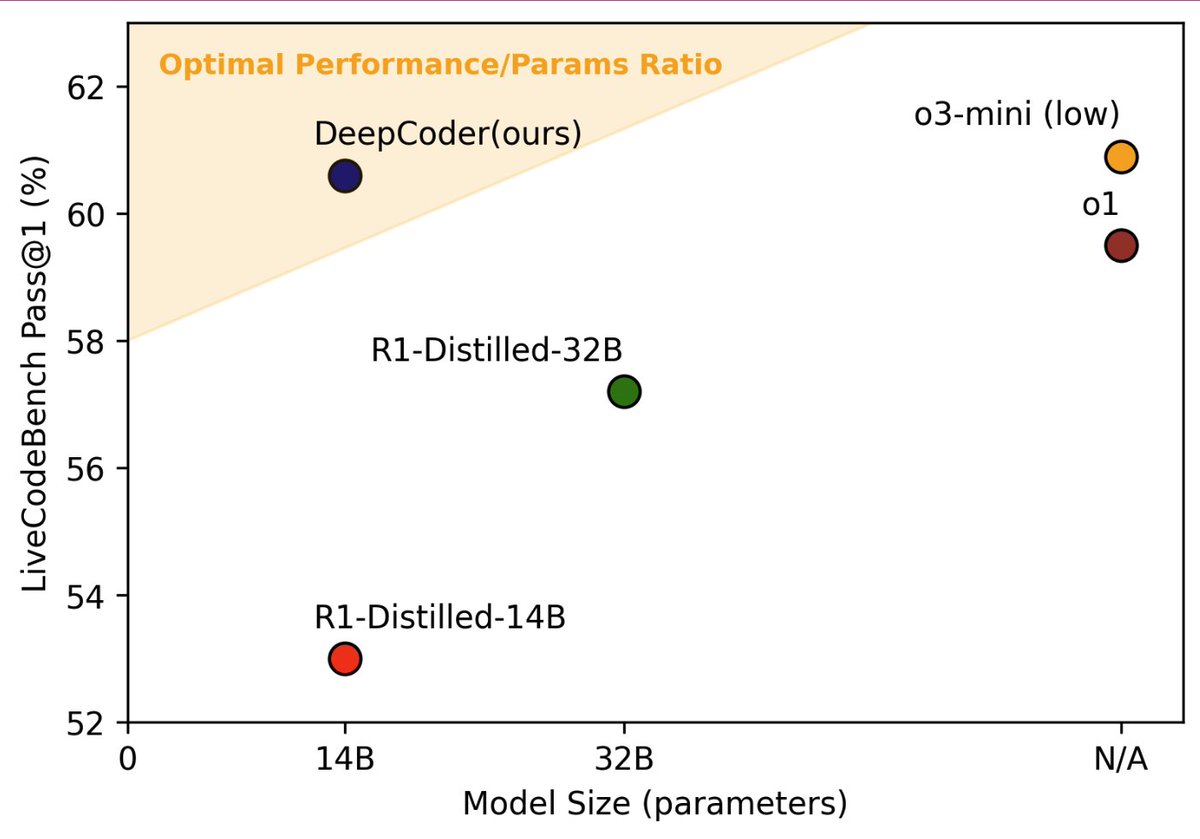

Announcing DeepCoder-14B – an o1 & o3-mini level coding reasoning model fully open-sourced! We’re releasing everything: dataset, code, and training recipe.🔥 Built in collaboration with the Agentica Project team. See how we created it. 🧵

Hey Sam Altman, we know you're planning to open-source your reasoning model—but we couldn’t wait. Introducing DeepCoder-14B-Preview: a fully open-source reasoning model that matches o1 and o3-mini on both coding and math. And yes, we’re releasing everything: model, data, code, and

We're trending on Hugging Face models today! 🔥 Huge thanks to our amazing community for your support. 🙏