Adrien Bardes

@adrienbardes

Research Scientist @AIatMeta. Self-supervised learning, Video understanding, Visual world modelling. PhD @AIatMeta & @Inria.

ID: 787639668

http://adrien987k.github.io 28-08-2012 19:01:18

56 Tweet

804 Followers

234 Following

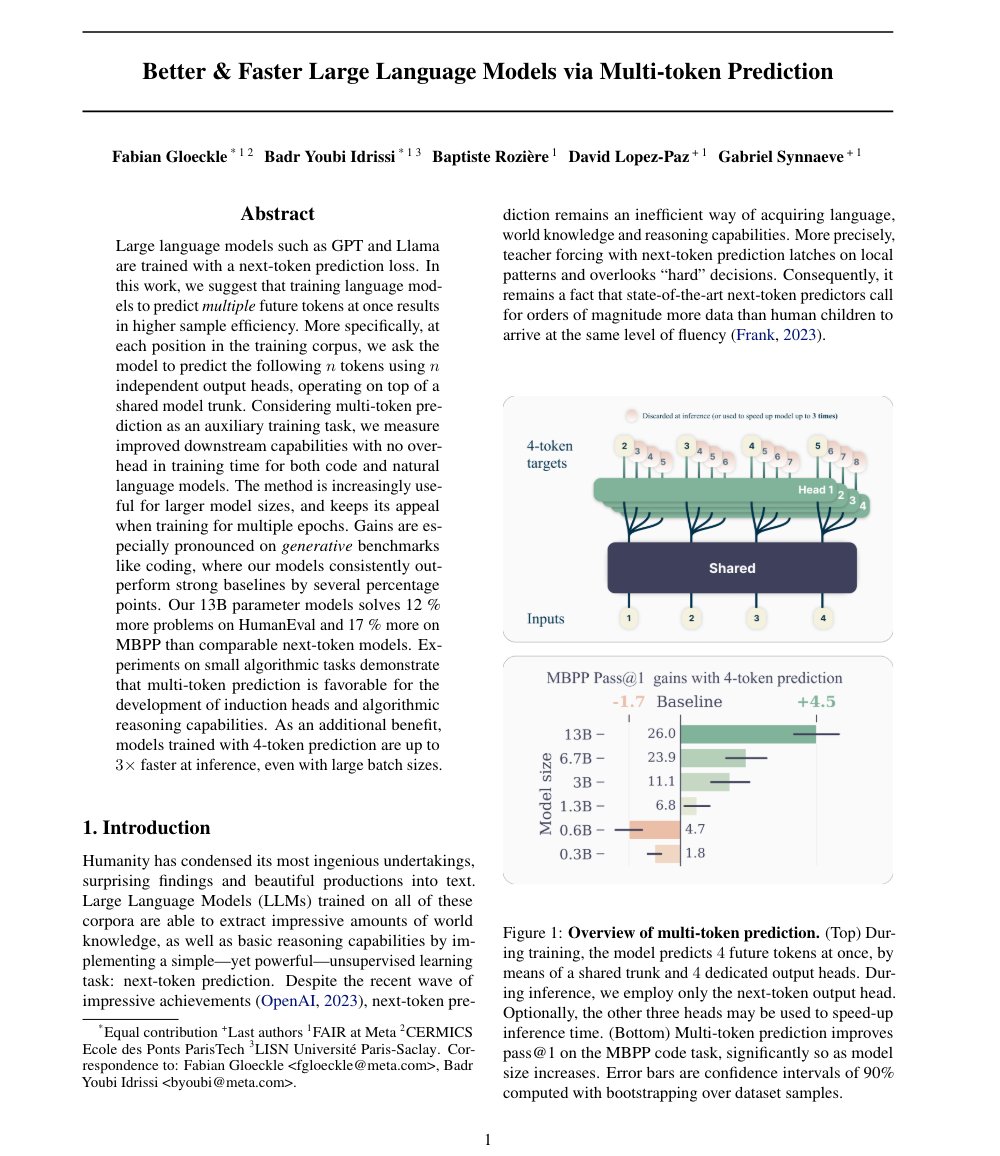

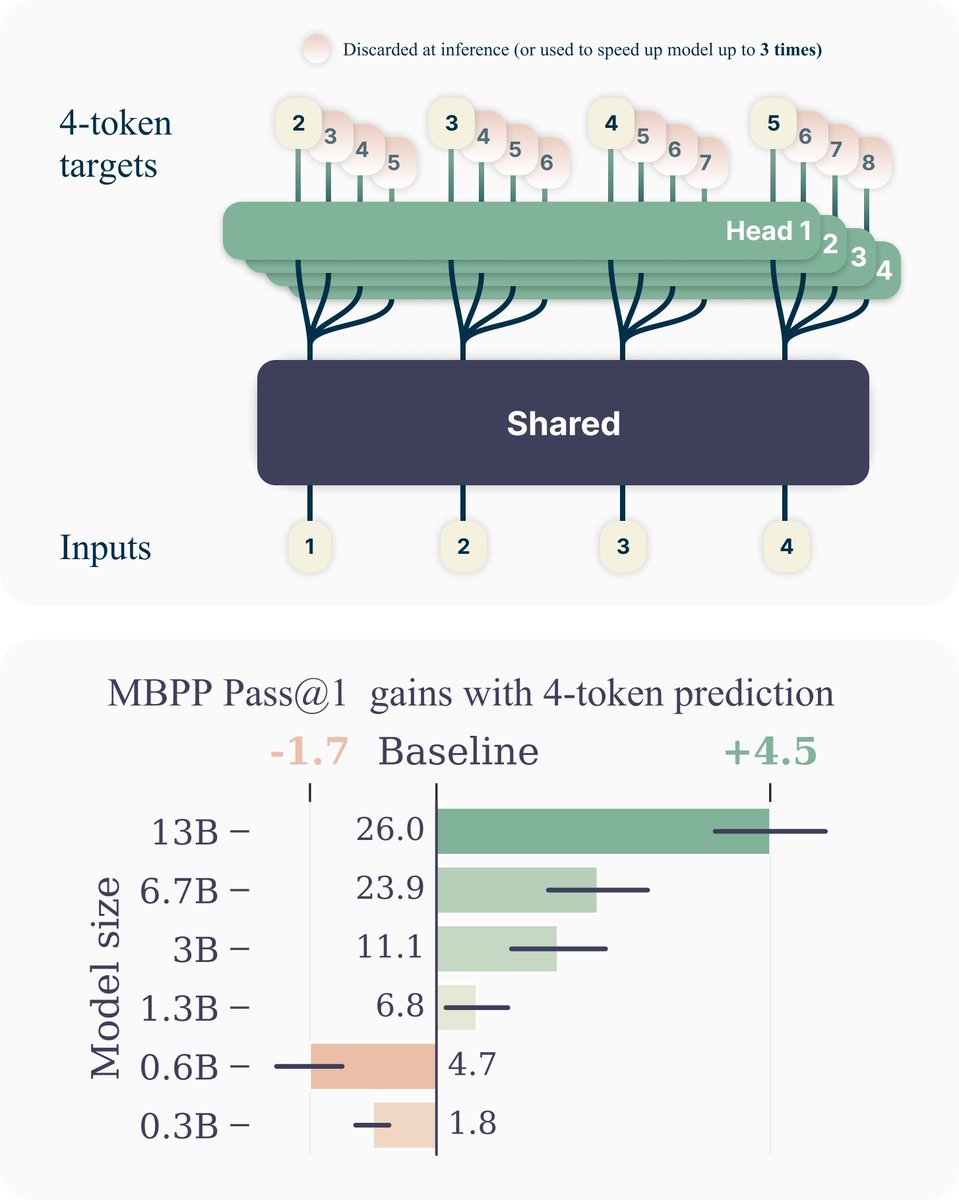

What happens if we make language models predict several tokens ahead instead of only the next one? In this paper, we show that multi-token prediction boosts language model training efficiency. 🧵 1/11 Paper: arxiv.org/abs/2404.19737 Joint work with Fabian Gloeckle

Yann LeCun from AI at Meta greets the public at the exclusive Open Source AI Day event at STATION F at panel with adriennejan from Scaleway, Thomas Wolf from Hugging Face and Patrick Pérez from kyutai Join us as lnkd.in/gwphNpV2 We will continue by great...

Job alert 🚨 My team AI at Meta is looking for a PhD intern to join us in 2025 in Paris. We are working on self-supervised learning from video, world modelling and JEPA ! Apply here or reach out directly: metacareers.com/jobs/168411027…