Adithya Bhaskar

@adithyanlp

Second Year CS Ph.D. student at Princeton University (@princeton_nlp), previously CS undergrad at IIT Bombay

ID: 1669231860130660352

http://adithyabh.github.io 15-06-2023 06:34:30

39 Tweet

226 Takipçi

245 Takip Edilen

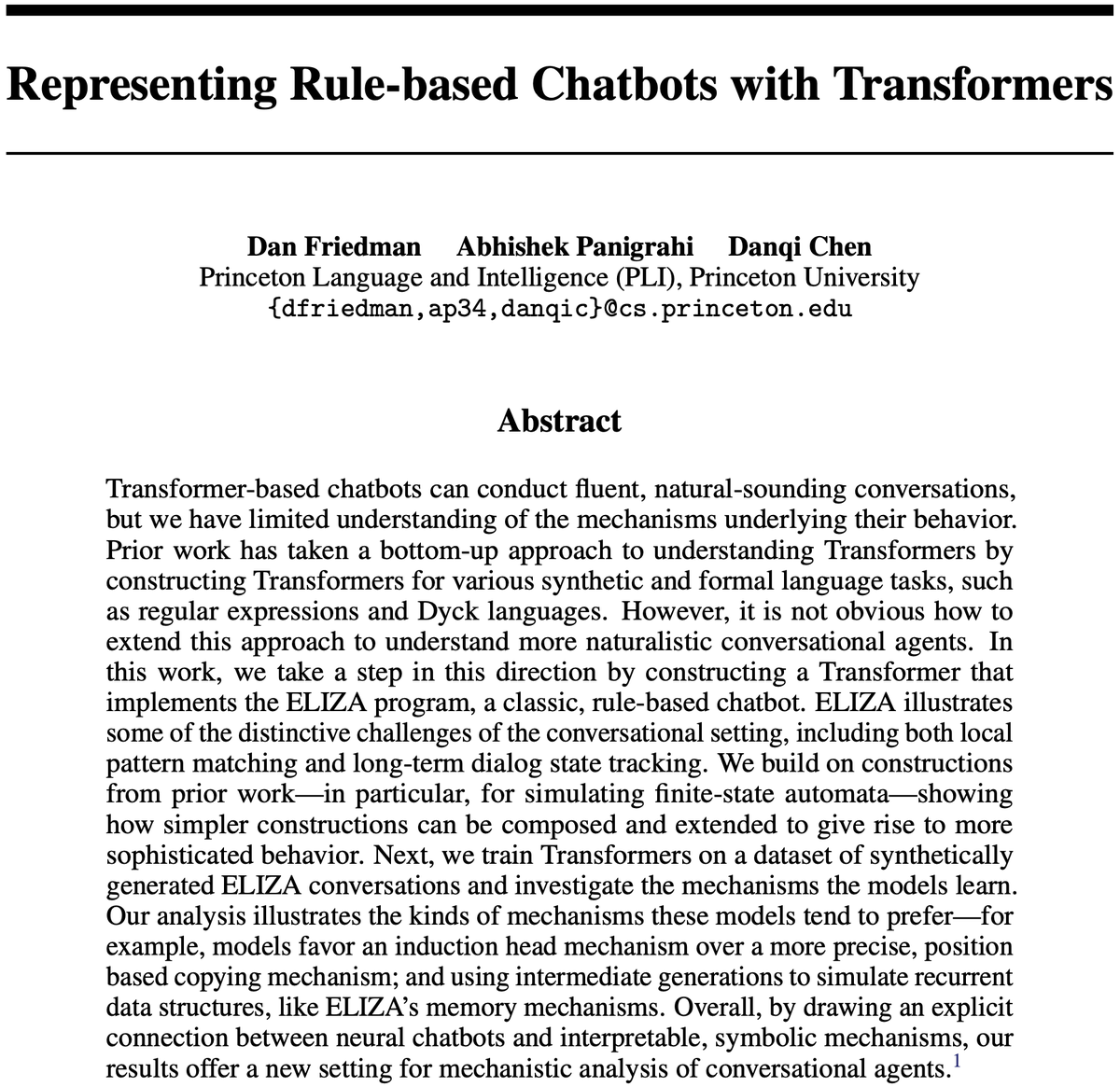

How can we understand neural chatbots in terms of interpretable, symbolic mechanisms? To explore this question, we constructed a Transformer that implements the classic ELIZA chatbot algorithm (with Abhishek Panigrahi and Danqi Chen). Paper: arxiv.org/abs/2407.10949 (1/6)

![Yu Meng @ ICLR'25 (@yumeng0818) on Twitter photo Introducing SimPO: Simpler & more effective Preference Optimization!🎉

Significantly outperforms DPO w/o a reference model!📈

Llama-3-8B-SimPO ranked among top on leaderboards!💪

✅44.7% LC win rate on AlpacaEval 2

✅33.8% win rate on Arena-Hard

arxiv.org/abs/2405.14734

🧵[1/n] Introducing SimPO: Simpler & more effective Preference Optimization!🎉

Significantly outperforms DPO w/o a reference model!📈

Llama-3-8B-SimPO ranked among top on leaderboards!💪

✅44.7% LC win rate on AlpacaEval 2

✅33.8% win rate on Arena-Hard

arxiv.org/abs/2405.14734

🧵[1/n]](https://pbs.twimg.com/media/GOWj2X1XoAEPGbM.jpg)