Adam W. Harley

@adamwharley

Postdoc at Stanford. CMU Robotics PhD. I work on computer vision and machine learning.

ID: 1290112570146402306

https://adamharley.com 03-08-2020 02:30:01

246 Tweet

2,2K Followers

125 Following

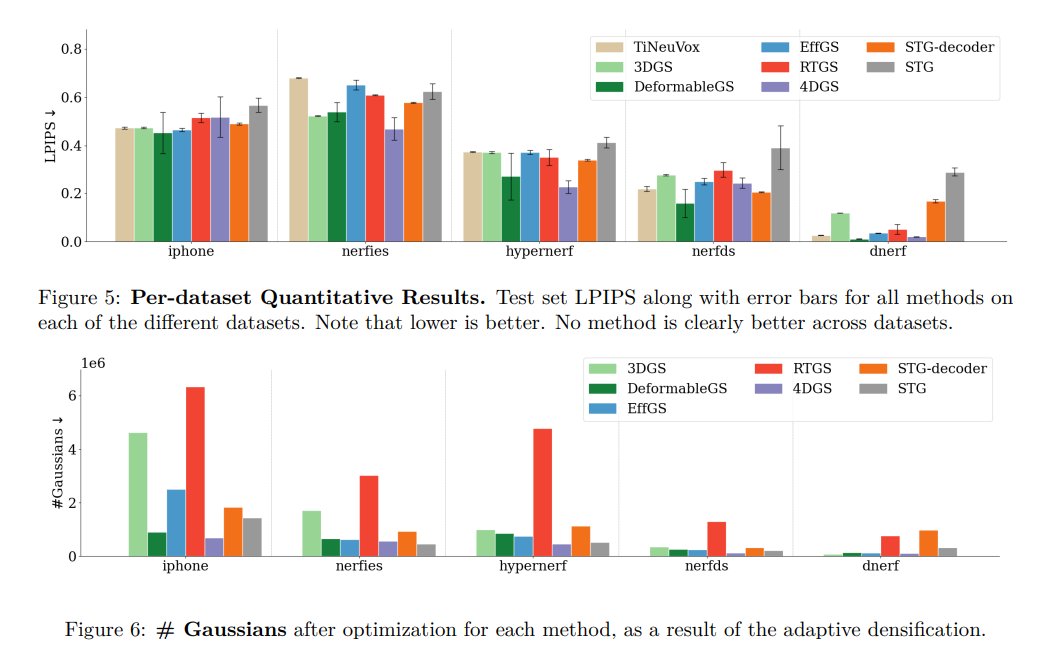

Monocular Dynamic Gaussian Splatting is Fast and Brittle but Smooth Motion Helps Yiqing Liang, Mikhail Okunev, Mikaela Angelina Uy, Runfeng Li, Leonidas Guibas, James Tompkin, Adam W. Harley tl;dr: benchmark for monocular dynamic GS arxiv.org/abs/2412.04457

We are excited for our CVPR 2025 PixFoundation workshop in #CVPR2025 2025. Note our workshop start is @ 8:30 am in room 101 E Music City Center. Our first two speakers: F. Güney is an assistant professor in Koc university, and Adam W. Harley is a research scientist in Meta.