Adam Coates

@adampaulcoates

Has AI made the world better yet? Let's get on that. Director at Apple. Fmr Stanford PhD, Director Baidu SVAIL, @khoslaventures. #deeplearning #HPC #AI

ID: 2781430855

http://apcoates.com 31-08-2014 01:26:52

362 Tweet

31,31K Followers

287 Following

Learn concrete tips on how to successfully build useful #MachineLearning projects from @EmmanuelAmeisen, Head of AI @InsightDataSci and Adam Coates, operating partner Khosla Ventures. blog.insightdatascience.com/how-to-deliver…

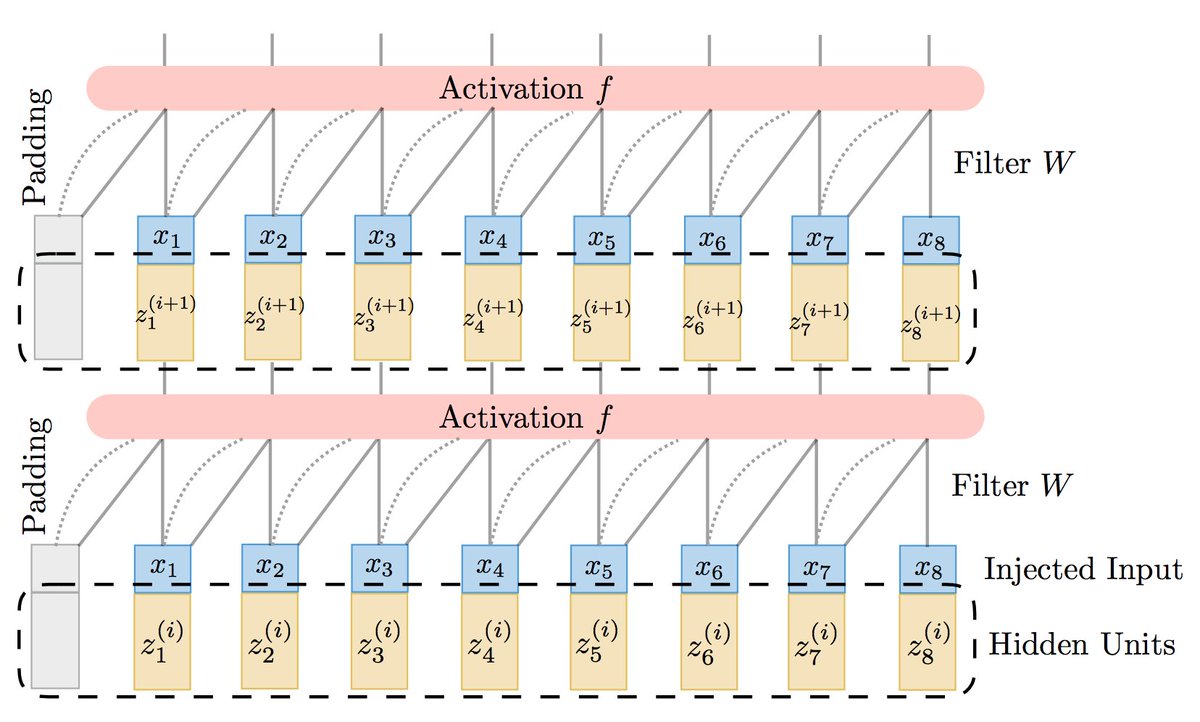

Got the "do I use an RNN or CNN for sequence modeling" blues? Use a TrellisNet, an architecture that connects these two worlds, and works better than either! New paper with Shaojie Bai and Vladlen Koltun. Paper: arxiv.org/abs/1810.06682 Code: github.com/locuslab/trell…

Good piece on managing competing priorities that are common to data projects blog.insightdatascience.com/how-to-deliver… via @EmmanuelAmeisen of @InsightDataSci and Adam Coates of Khosla Ventures #BigData #MachineLearning #DataScience #AI #ApacheSpark

WaveGlow: A non-autoregressive generative model for speech synthesis. Our unoptimized PyTorch inverts mel-spectrograms at 500 kHz on a V100 GPU, and is easy to train. Paper: arxiv.org/abs/1811.00002 Samples: nv-adlr.github.io/WaveGlow Work with Ryan Prenger and Rafael Valle

Ankesh Anand Edward Grefenstette Yann LeCun I posted the slides for my model-based RL tutorial today here: people.eecs.berkeley.edu/~cbfinn/_files… Content is similar to the DRL bootcamp video 1 yr ago, but updated.

All materials of Berkeley AI Research Deep Unsupervised Learning now up: sites.google.com/view/berkeley-… Great semester w/Peter Chen,@Aravind7694,Jonathan Ho, and guest inst. Alec Radford,Ilya Sutskever,A Efros,Aäron van den Oord Covers: AR / PixelCNN, Flow models, VAE, GAN, self-supervised learning, etc...