Acyr Locatelli

@acyr_l

Lead pre-training @Cohere

ID: 294045100

06-05-2011 12:47:58

84 Tweet

563 Takipçi

870 Takip Edilen

A moment for @Cohere and Cohere For AI team appreciation. 💙 #NeurIPS2024 - stop by the booth to catch up with our team, or find us throughout the conference.

Check out Laura Ruis on Machine Learning Street Talk discussing her work on understanding LLM reasoning at cohere along with fantastic collaborators 🔥

Excited to announce that @Cohere and Cohere Labs models are the first supported inference provider on Hugging Face Hub! 🔥 Looking forward to this new avenue for sharing and serving our models, including the Aya family and Command suite of models.

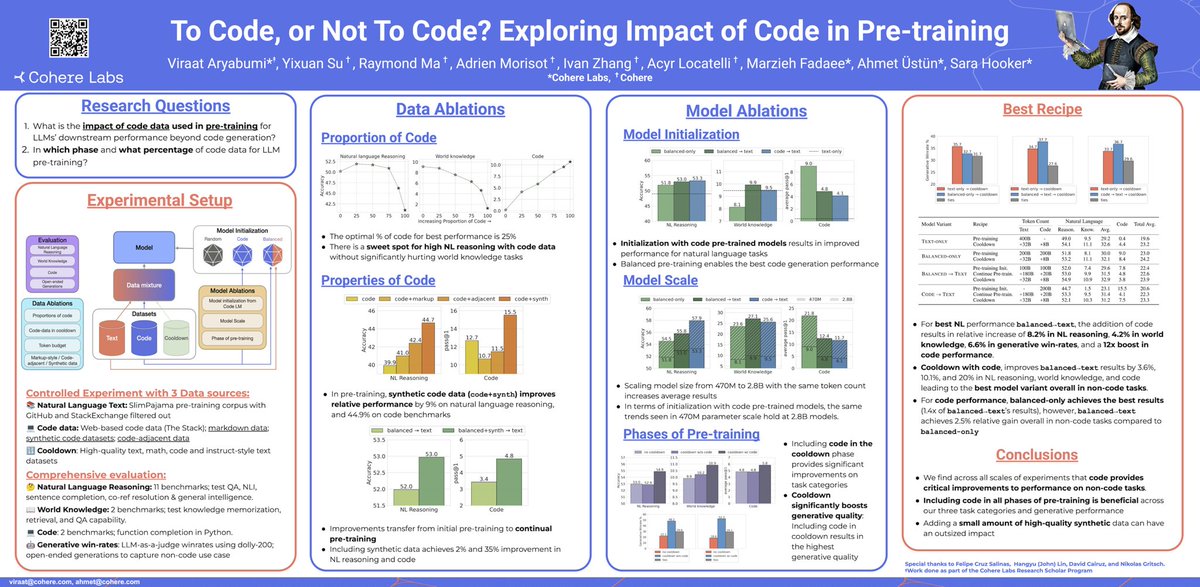

Very proud of this work which is being presented ICLR 2026 later today. While I will not be there — Catch up with Viraat Aryabumi and Ahmet Üstün who are both fantastic and can share more about our work at both Cohere Labs and cohere. 🔥✨