Lequn Chen

@abcdabcd987

Faster and cheaper LLM inference.

ID: 470747166

https://abcdabcd987.com 22-01-2012 03:26:52

25 Tweet

893 Followers

559 Following

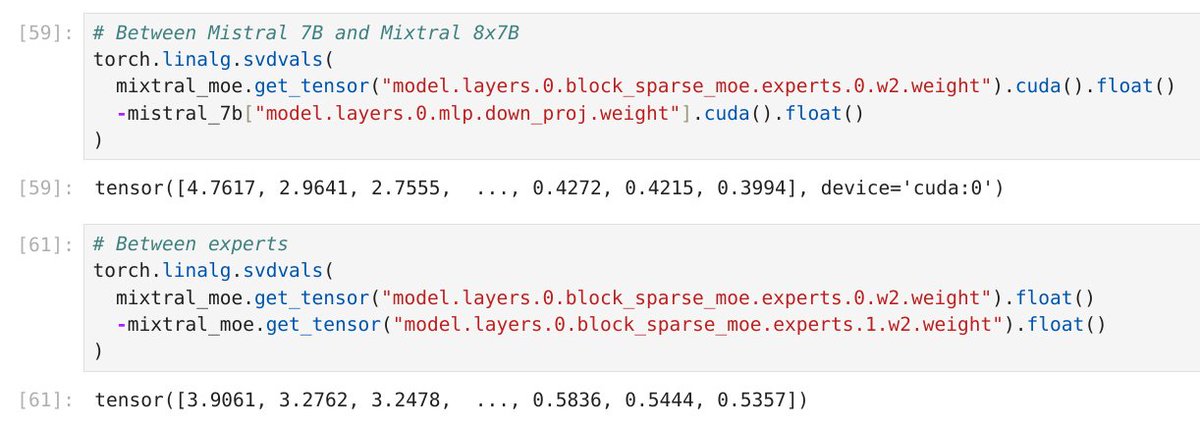

Really good observation from Tianle Cai and Junru Shao . I did a quick sanity check. Delta between Mixtral 8x7B MoE and Mistral 7B is NOT low-rank. SGMV is not applicable here. We need new research :)

Go Lequn Chen (Lequn Chen)! Great work on making lots LoRAs cheap to serve. Nice collaboration with Zihao Ye Arvind Krishnamurthy and others! #mlsys24 arxiv.org/abs/2310.18547