Shruti Joshi

@_shruti_joshi_

phd student in identifiable repl @Mila_Quebec. prev. research programmer @MPI_IS Tübingen, undergrad @IITKanpur '19.

ID: 1026105870818529281

https://shrutij01.github.io/ 05-08-2018 14:01:18

176 Tweet

375 Followers

817 Following

Presenting tomorrow at #EMNLP2023: MAGNIFICo: Evaluating the In-Context Learning Ability of Large Language Models to Generalize to Novel Interpretations w/ amazing advisors and collaborators 🇺🇦 Dzmitry Bahdanau, Siva Reddy, and Satwik Bhattamishra

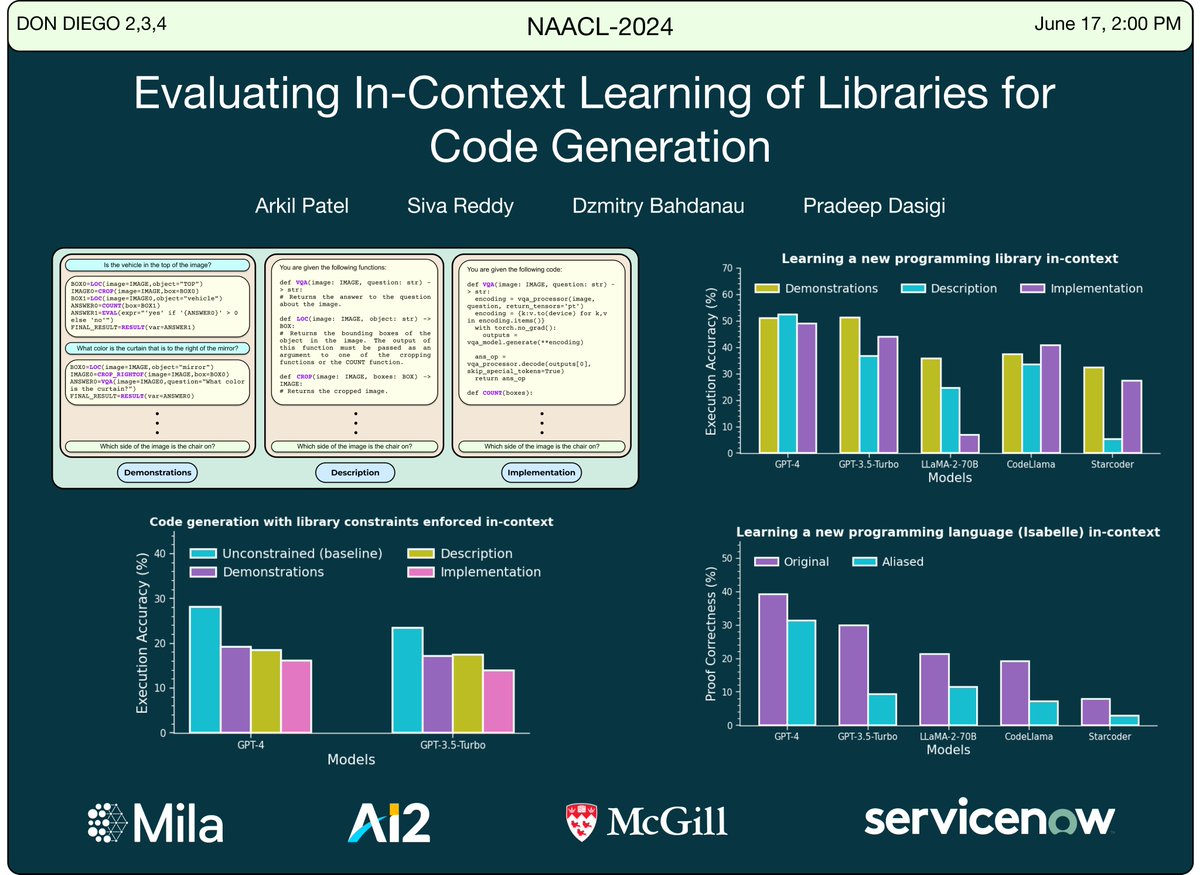

Presenting tomorrow at #NAACL2024: 𝐶𝑎𝑛 𝐿𝐿𝑀𝑠 𝑖𝑛-𝑐𝑜𝑛𝑡𝑒𝑥𝑡 𝑙𝑒𝑎𝑟𝑛 𝑡𝑜 𝑢𝑠𝑒 𝑛𝑒𝑤 𝑝𝑟𝑜𝑔𝑟𝑎𝑚𝑚𝑖𝑛𝑔 𝑙𝑖𝑏𝑟𝑎𝑟𝑖𝑒𝑠 𝑎𝑛𝑑 𝑙𝑎𝑛𝑔𝑢𝑎𝑔𝑒𝑠? 𝑌𝑒𝑠. 𝐾𝑖𝑛𝑑 𝑜𝑓. Internship Ai2 work with Pradeep Dasigi and my advisors 🇺🇦 Dzmitry Bahdanau and Siva Reddy.

I am thrilled to announce that I will be joining the Gatsby Computational Neuroscience Unit at UCL as a Lecturer (Assistant Professor) in Feb 2025! Looking forward to working with the exceptional talent at Gatsby Computational Neuroscience Unit on cutting-edge problems in deep learning and causality.

Presenting ✨ 𝐂𝐇𝐀𝐒𝐄: 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐢𝐧𝐠 𝐜𝐡𝐚𝐥𝐥𝐞𝐧𝐠𝐢𝐧𝐠 𝐬𝐲𝐧𝐭𝐡𝐞𝐭𝐢𝐜 𝐝𝐚𝐭𝐚 𝐟𝐨𝐫 𝐞𝐯𝐚𝐥𝐮𝐚𝐭𝐢𝐨𝐧 ✨ Work w/ fantastic advisors 🇺🇦 Dzmitry Bahdanau and Siva Reddy Thread 🧵: