carlos

@_carlosejimenez

phd student @princeton_nlp

ID: 1124834159841562624

https://www.carlosejimenez.com/ 05-05-2019 00:32:16

240 Tweet

1,1K Takipçi

451 Takip Edilen

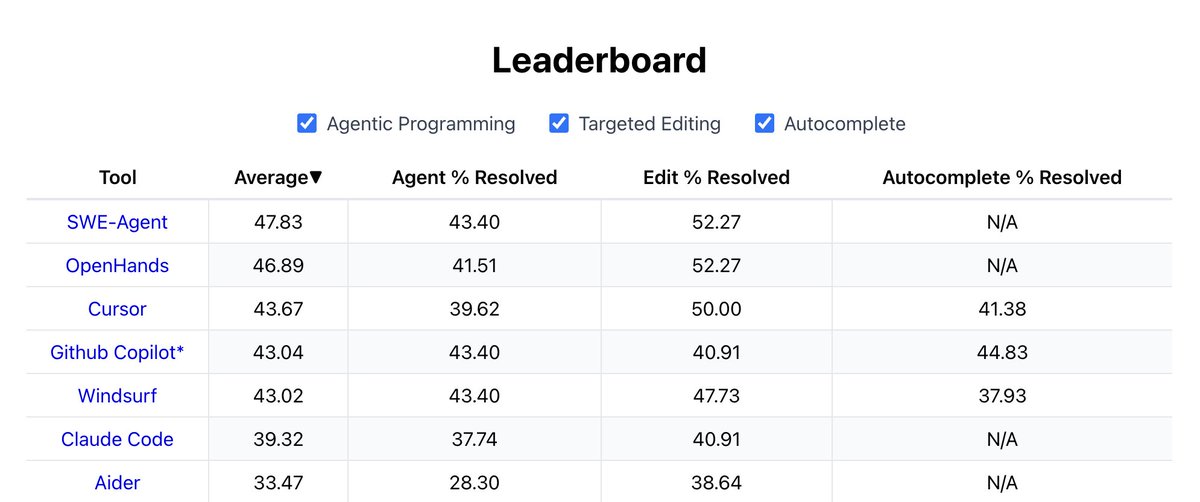

Can language model systems autonomously complete entire tasks end-to-end? In our next Expert Exchange webinar, Ofir Press explores autonomous LM systems for software engineering, featuring SWE-bench and SWE-agent—used by OpenAI, Meta, & more. 🔗 Register: