Amr Abdullatif

@__amrabdullatif

Assistant Professor in Computer Science @UniofBradford. Machine learning researcher @BHGECO. PhD @UniGenova. Researcher at @ScuolaSantAnna. Opinions are my own.

ID: 1102944776222052352

https://www.linkedin.com/in/amrabdullatif/ 05-03-2019 14:51:41

76 Tweet

33 Followers

188 Following

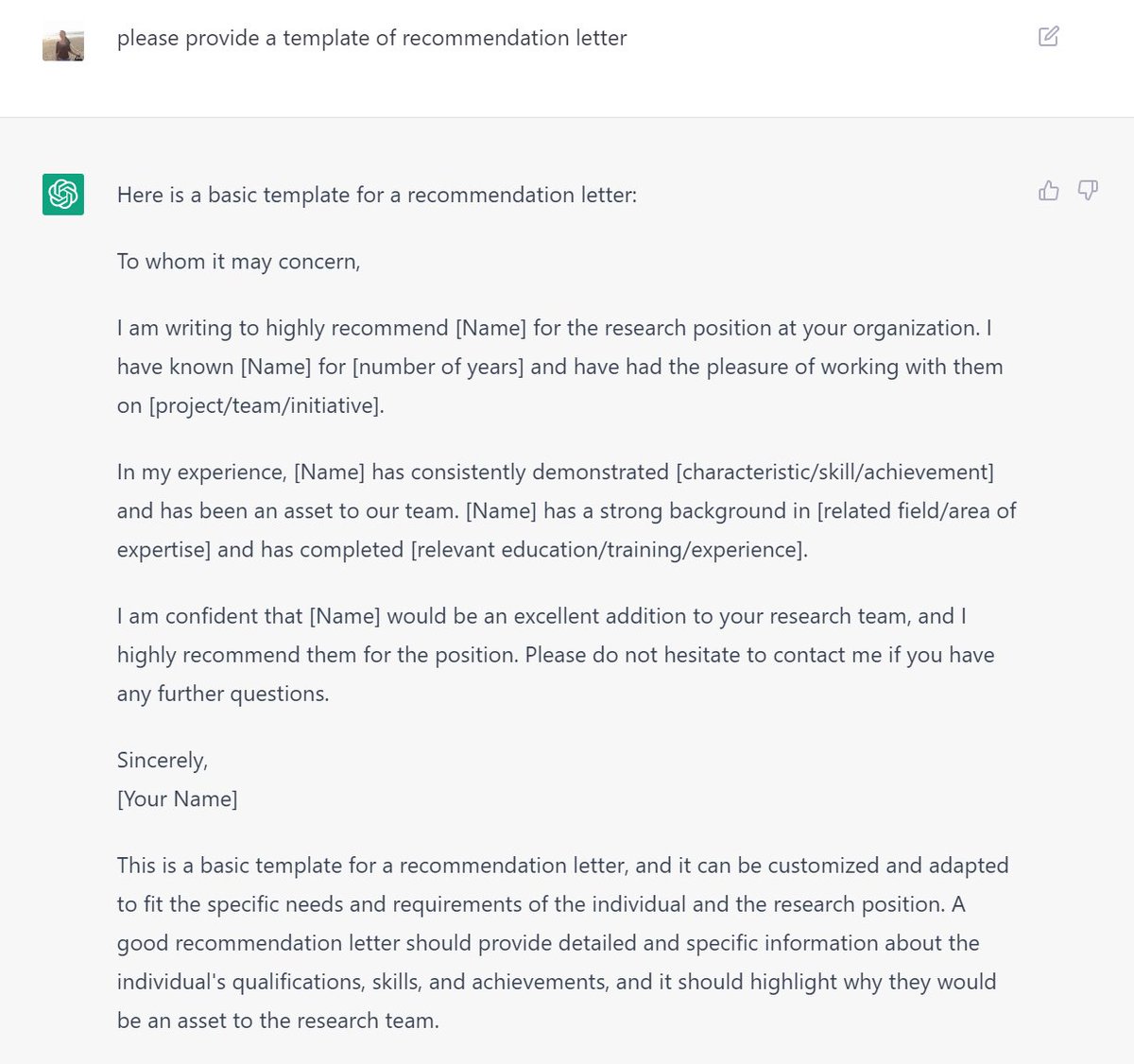

Very excited to share some personal news! Jonathan Whitaker Pedro Cuenca apolinario 🌐 and I are writing a book with @oreilly about generative ML🤗 We'll cover many topics from theory and practical aspects, discuss creative applications, and more! What topics would you like to see?

No More GIL! the Python team has officially accepted the proposal. Congrats Sam Gross on his multi-year brilliant effort to remove the GIL, and a heartfelt thanks to the Python Steering Council and Core team for a thoughtful plan to make this a reality. discuss.python.org/t/a-steering-c…

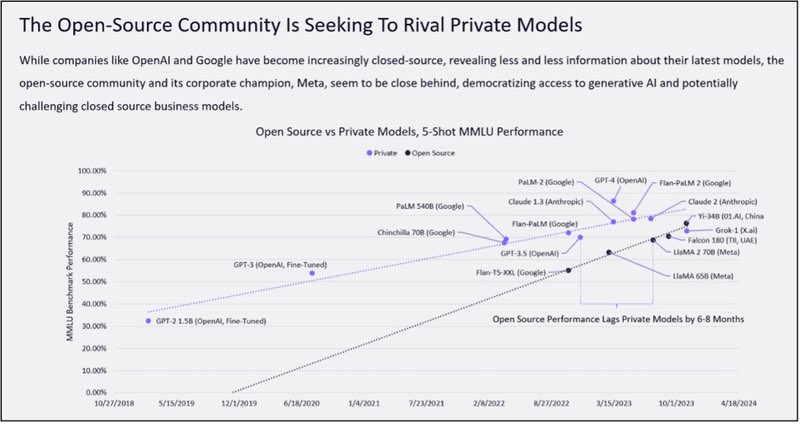

This is perhaps one of the most important charts on AI for 2024. It was built by the amazing researcher team at Cathie Wood’s ARK Invest. We can see the rise of open source local models are on the path to overtake massive (and expensive) cloud based closed models.