Ziming Liu

@ZimingLiu11

PhD student@MIT, AI for Physics/Science, Science of Intelligence & Interpretability for Science

ID:1390673534033092608

https://kindxiaoming.github.io/ 07-05-2021 14:23:11

402 Tweets

8,6K Followers

632 Following

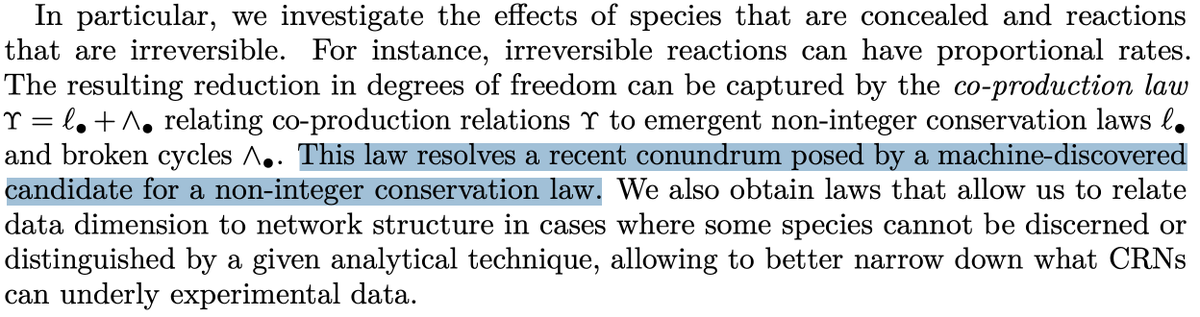

Deciphering a neural network’s insides has been near impossible, and researchers hunt for any clue they can find.

Recently, they discovered a new one.

Anil Ananthaswamy reports:

quantamagazine.org/how-do-machine…

I first heard Irina Rish mention Grokking #neural networks on the Paul Middlebrooks's Brain Inspired podcast! That was in early '22. Years later, here's a story on Grokking, about the follow-up detective work of Neel Nanda Ziming Liu and others for Quanta Magazine quantamagazine.org/how-do-machine…

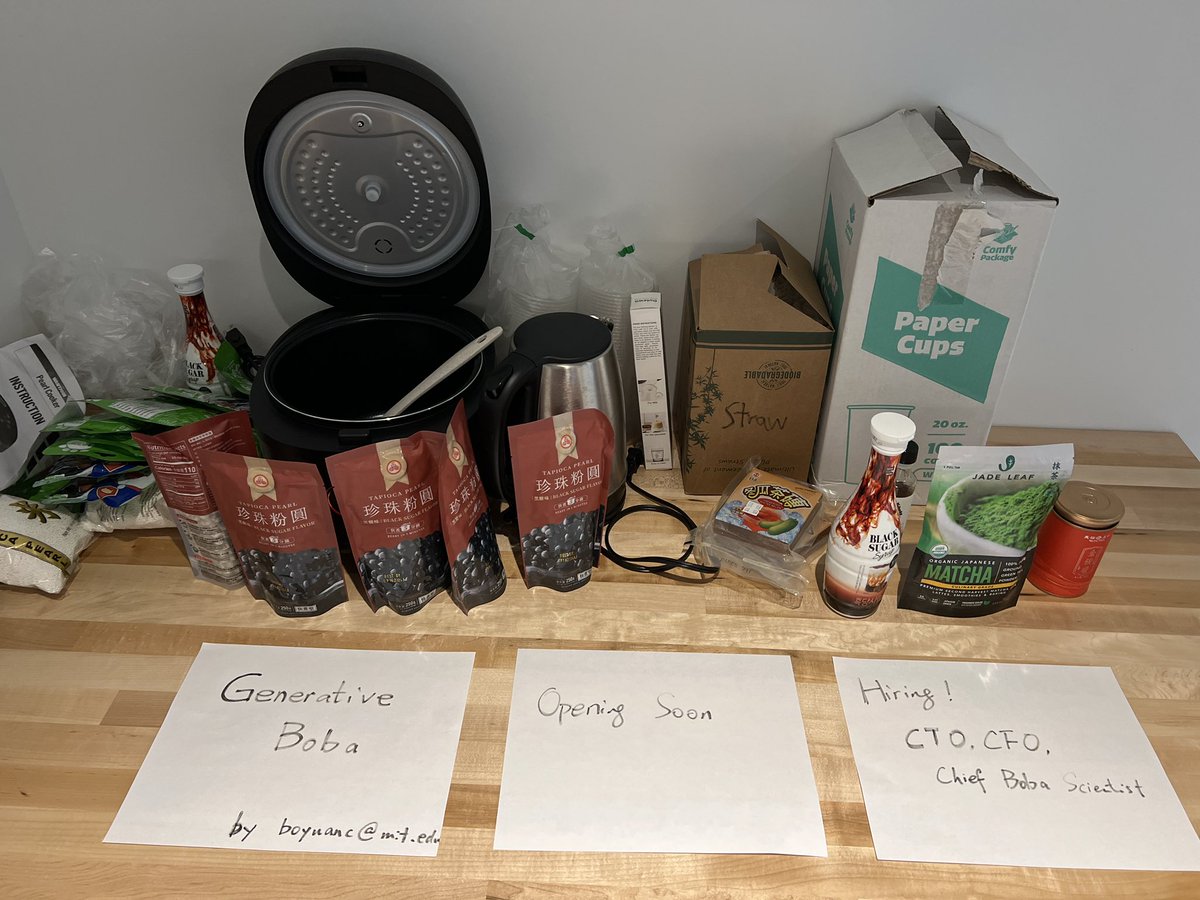

I quit PhD (for a day) and opened a boba shop at Massachusetts Institute of Technology (MIT) - Generative Boba! It’s a huge success - right next to our office so all the AI researchers are enjoying it. Checkout our boba diffusion algorithm in the poster to understand why boba generation is so important to Massachusetts Institute of Technology (MIT)_CSAIL !

Giving the Presidential Lecture tomorrow at Simons Foundation Flatiron Institute:

'The Next Great Scientific Theory is Hiding Inside a Neural Network' simonsfoundation.org/event/the-next…

Will be in NYC until the 10th – please get in touch if you would like to chat!

🥳🥳🥳 We are excited to share that AI for Science workshop will be held again with ICML Conference 2024, Vienna! This time, we focus on scaling in AI for Science (as a new dimension to theory, methodology and discovery)! Tentative schedules can be found: ai4sciencecommunity.github.io/icml24.html

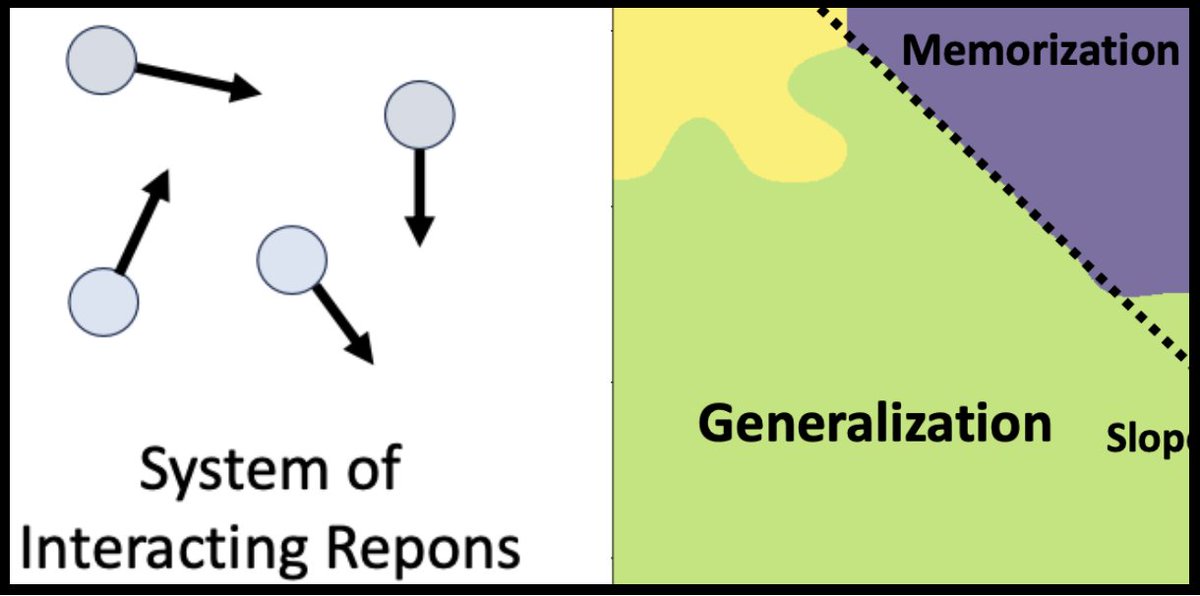

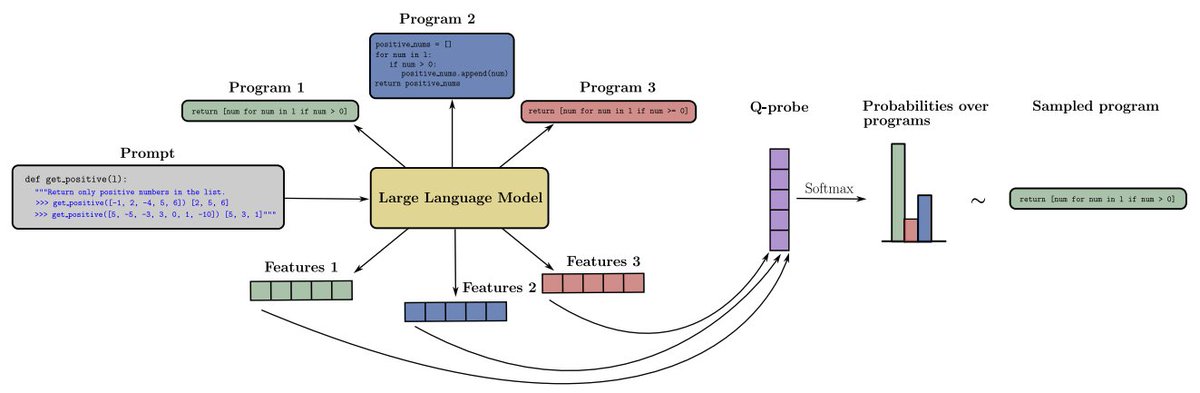

Our new paper shows how machine-learning to generalize can be modeled as representations interacting like particles ('repons'). We also predict how much data is needed, and find a Goldilocks zone where the decoder is neither too weak nor too powerful: Ziming Liu David D. Baek